AI Factory Playbook: A Developer’s Guide to Building Secure, Accelerated Gen AI Applications

AI Factory Playbook: A Developer’s Guide to Building Secure, Accelerated Gen AI Applications

Table of Contents

At NVIDIA AI Day, Mr. Pham Vu Hung, Solutions Architect & Senior Consultant at FPT Smart Cloud, FPT Corporation delivered the keynote “AI Factory Playbook: A Developer's Guide to Building Secure, Accelerated Gen AI Applications.”

Mr. Hung gives insights on how to achieve end-to-end AI development, from building generative AI models to deploying AI agents for your enterprise, on the NVIDIA H100/H200 GPU Cloud Platform using the domestically deployed AI factory. Specifically, the presentation touches on the benefits of the homegrown AI factory through rapid development and an optimized inference environment, with specific use cases.

- End-to-end AI development of a domestic AI factory: complete development from generation AI to AI agents in a secure environment at a data center.

- Acceleration with NVIDIA H100/H200 GPUs: Accelerate training and inference with the latest GPUs to significantly shorten development time.

- Generative AI construction and fine-tuning: Highly accurate models are realized through state-of-the-art model construction and fine-tuning with individual data.

Building Up the AI/ML Stack

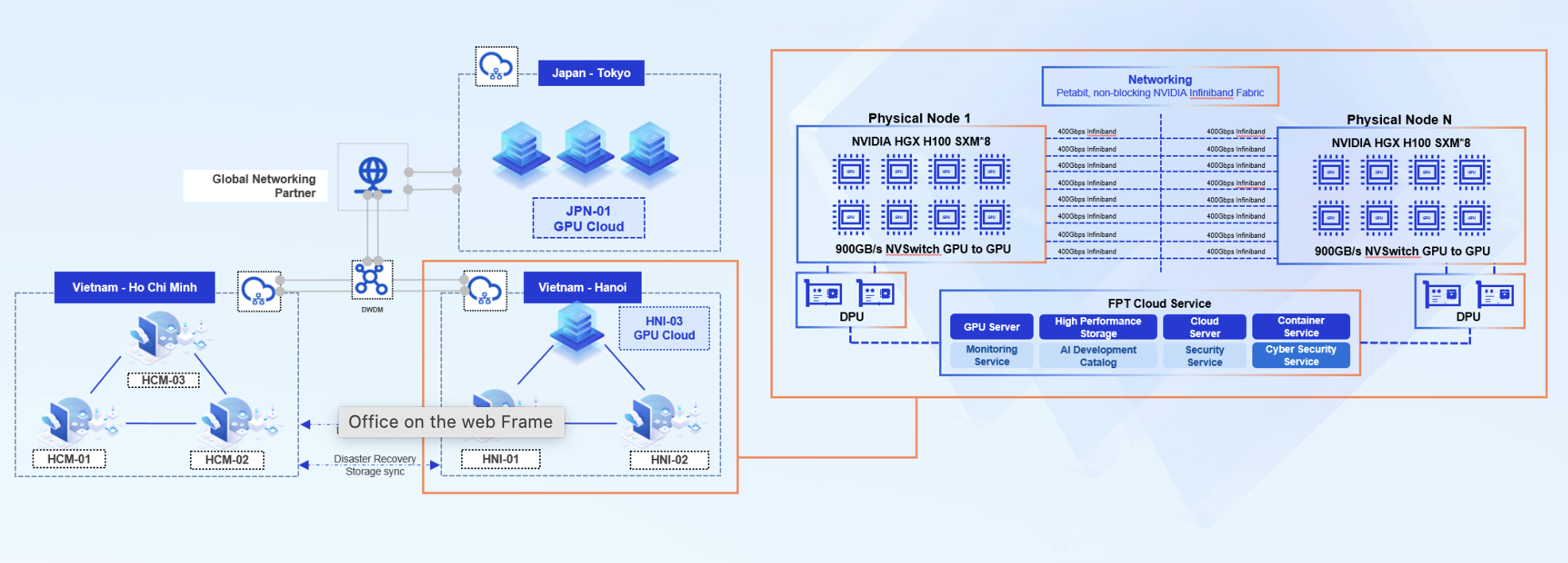

FPT AI Factory provides a comprehensive AI/ML infrastructure stack built on NVIDIA-certified Tier 3 & 4 data centers, ranked 36th and 38th in the TOP500 list (June 2025). Among its wide range of offerings, standout services include GPU Container, GPU Virtual Machine, and FPT AI Studio. Developers can also leverage Bare Metal, GPU Cluster, AI Notebook, and FPT AI Inference.

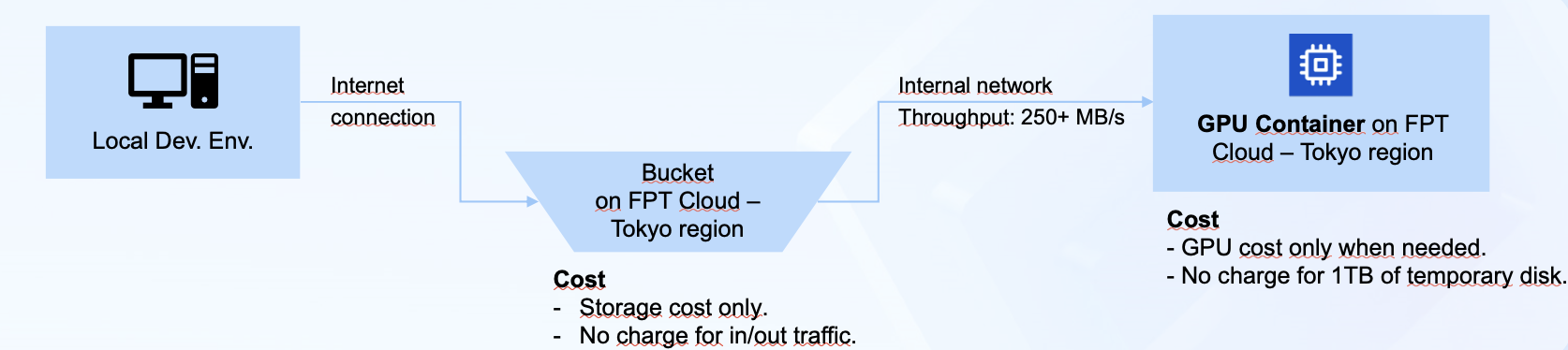

- GPU Container: Designed for experimentation workloads with built-in monitoring, logging, and collaborative notebooks. Developers can easily share data, write code, unit test, and execute in a highly flexible environment.

- GPU Virtual Machine: Multi-purpose VMs optimized for both training and inference, with flexible configuration options (from 1 to 8 GPUs per VM, up to 141GB VRAM per GPU).

- GPU Cluster: Scalable infrastructure for distributed training and large-scale inference. Equipped with NVLink, MIG/MPS/Time-slice GPU sharing, and advanced security add-ons like audit logs and CIS benchmarks.

- AI Notebook: A managed JupyterLab environment preloaded with essential AI/ML libraries. Developers can start coding instantly on enterprise-grade GPUs without setup overhead, achieving up to 70% cost savings compared to traditional notebook environments.

- FPT AI Studio: A no-code/low-code MLOps platform that integrates data pipelines, fine-tuning strategies (SFT, DPO, continual training), experiment tracking, and model registry. Its drag-and-drop GUI enables developers to fine-tune models quickly and store them in a centralized model hub.

- FPT AI Inference: Ready-to-use APIs with competitive token pricing, enabling developers to deploy fine-tuned models quickly and cost-effectively.

During the keynote, Mr. Hung emphasized not only the broad capabilities of AI Factory but also illustrated them through a concrete customer case. For instance, FPT collaborated with a Japanese IT company to fine-tune the Donut (Document Understanding Transformer) model on a dataset exceeding 300GB. By leveraging GPU Container in combination with FPT Object Storage, the customer was able to handle large-scale document data efficiently while optimizing costs - a practical example of how enterprises can take advantage of FPT AI Factory’s infrastructure for real-world workloads.

Accelerating the Deployment of Real-World AI Solution

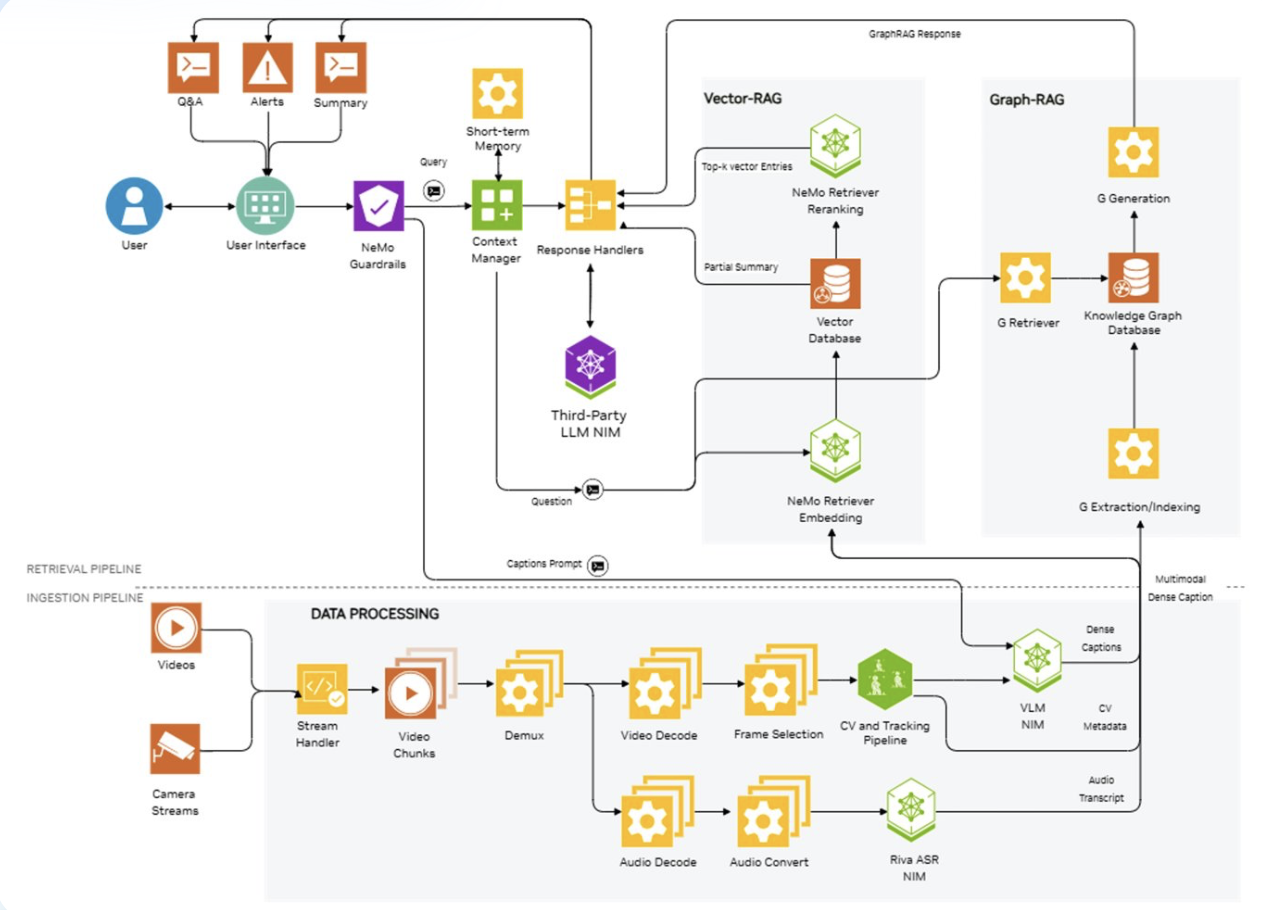

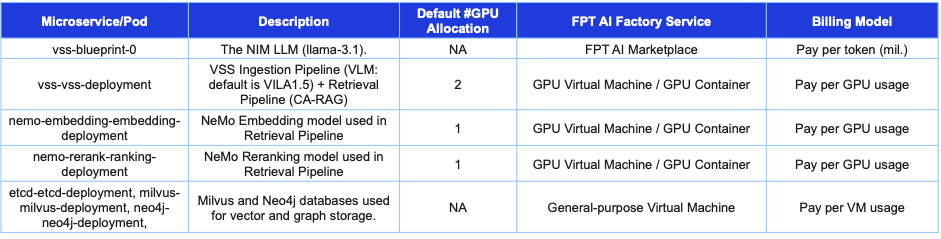

One of the highlights was a live demo of an AI Camera Agent designed for video search and summarization. The workflow is simple yet powerful: select a video, provide a brief description of what you want to find, and the agent automatically identifies relevant segments and generates concise summaries in real time.

What makes this possible is the integration of NVIDIA Blueprints, which provide pre-validated solution architectures and tools for rapid experimentation. Instead of spending months building a prototype from scratch, we were able to move from concept to a working demo in just a single day. This acceleration not only validates the feasibility of the solution but also gives enterprises a tangible way to envision how AI can be applied to their own video data challenges.

In particular, FPT AI Factory delivers a full-stack environment, from infrastructure components such as GPU, VM, and Kubernetes to the developer tools required, to deploy AI solutions quickly and efficiently. With a flexible architecture and ready-to-use models, developers can even stand up complete solutions powered by just a single NVIDIA H100 GPU, balancing performance, scalability, and cost-effectiveness.

For example, FPT AI Inference offers a library of ready-to-use models that developers can integrate instantly through simple API calls. With competitive per-token pricing, teams can run inference workloads faster while significantly reducing costs, enabling businesses to bring AI-powered applications to market more efficiently.

Taking AI Model Fine-Tuning to the Next Level

Developers can fine-tune models on GPU Container, but more for experimentation. For implemented solution, we need solutions that can automate the fine-tuning process.

Introducing FPT AI Studio with popular components in the MLOps processes like AI Notebooks, Data Processing… FPT AI Studio allows users to integrate data, base model, different fine-tuning strategies such as continual training…

The GUI is user-friendly, drag-and-drop interface.

The fine-tuned model can be stored in the model hub. After that, we can transfer these models to FPT AI Inference.

Developers today can fine-tune models directly on GPU container, which is great for experimentation and quick iteration. However, moving from one-off experiments to a production-ready solution requires more than just compute power. It needs automation, reproducibility, and integration into a full MLOps pipeline.

FPT AI Studio provides the right environment to streamline fine-tuning and deployment. The platform is designed to be accessible, with a drag-and-drop GUI for building workflows quickly, while still allowing deep customization for advanced users. It comes with common MLOps components:

- AI Notebook for code-driven experimentation

- Data Processing pipelines to handle preprocessing and feature engineering.

- Fine-tuning strategies including continual training, domain adaptation, and transfer learning.

Once a model is fine-tuned in AI Studio, it can be stored in the Model Hub - a central repository for versioning, sharing, and reuse. From there, models can be seamlessly transferred to FPT AI Inference for scalable, low latency serving in production environments.

For illustration, Mr. Hung walked through a case study of how FPT AI Studio can be applied to adapt a large language model for the Vietnamese healthcare domain. The base model chosen is Llama-3.1-8B, which provides a strong balance between capacity and efficiency. The task is to develop a model optimized for healthcare question answering, requiring domain-specific adaptation while retaining the general reasoning ability of the base model. The dataset consists of Vietnamese healthcare documents, and the goal is to enhance factual recall, domain precision, and response quality in clinical Q&A scenarios.

The first approach relies on continual pre-training. Using 24 NVIDIA H100 GPUs across three nodes, the model is exposed to the healthcare dataset for three epochs. The entire pipeline takes approximately 31 hours to complete.

The second approach applies supervised fine-tuning with LoRA adapters, which represents a more resource-efficient alternative. In this setting, only four NVIDIA H100 GPUs are used on a single node, and training is performed for five epochs. The total runtime of the pipeline is roughly 3 hours. While less computationally demanding, this strategy still delivers significant improvements for downstream Q&A tasks.

Best Practices

First, it’s important to select the right tools for the right workloads to maximize both performance and cost-efficiency. With FPT AI Factory, users are equipped with the necessary tools for any types of AI/ML workloads for faster, more efficient AI innovation. For early experimentation, GPU Container or AI Notebook provide developers with flexible environments for testing ideas and running quick prototypes. For deployment, the right choice depends on the workload: GPU Container are ideal for light-weight inferencing, whereas GPU Virtual Machine deliver the performance needed for real-time or batch inferencing. High-performance computing (HPC) workloads run best on Metal Cloud, which provides bare-metal performance for intensive tasks. Finally, organizations looking for ready-to-use models can turn to the AI Marketplace, which offers pre-trained LLMs and services to accelerate adoption without additional fine-tuning.

Second, developers should optimize training workloads. Optimizing training workloads for large generative AI models requires a combination of hardware-aware techniques and workflow engineering. One key practice is to leverage mixed-precision training, using formats such as FP16 or BF16 to accelerate computation on NVIDIA GPUs while reducing memory usage by up to half. This not only shortens training time but also maintains accuracy with automatic scaling. Distributed training is equally important, where strategies like PyTorch DDP or pipeline parallelism allow workloads to scale across multiple GPUs or nodes, improving throughput and accelerating development cycles. In multi-node environments, optimizing cluster interconnects with NVLink or InfiniBand can further boost training speed by up to three times, ensuring efficient synchronization for large-scale AI tasks. Data pipelines and storage must also be optimized, employing NVIDIA DALI and scalable I/O to avoid bottlenecks. Finally, benchmarking tools such as FPT AI Factory’s GPU performance tests and NVIDIA’s MLPerf results help validate configurations, ensuring cost-effective scaling for fine-tuning.

Third, it is crucial to optimize inference workloads for delivering scalable, low-latency generative AI services. One effective approach is applying quantization and lower precision with NVIDIA TensorRT, converting models to FP8 or INT8 for up to 1.4× higher throughput with minimal accuracy trade-offs. For large language models, managing the KV cache efficiently is equally important; techniques such as PagedAttention and chunked prefill can cut memory fragmentation and reduce time-to-first-token by as much as 2–5× in multi-user scenarios. Speculative decoding further boosts performance by pairing a smaller draft model with the main LLM to predict multiple tokens at once, yielding 1.9–3.6× throughput gains while minimizing latency, which is especially valuable in real-time applications like video summarization. Scaling with multi-GPU parallelism also plays a key role, enabling up to 1.5× gains on distributed inference tasks in high-volume clusters. Finally, model distillation and pruning help shrink models, cutting costs and latency by 20–30% without sacrificing output quality.

Key Takeaways

- How to Architect a Secure, End-to-End AI Workflow: We will deconstruct the architecture of a production "AI factory," focusing on the design principles for creating a secure development lifecycle within a local data center. You'll learn the technical steps for ensuring data isolation, managing secure model hosting, and creating a reliable pathway from model fine-tuning to the deployment of enterprise-grade AI agents.

- Practical Techniques for GPU-Accelerated LLM Operations: Go beyond the specs and learn how to practically leverage high-performance GPUs (like the NVIDIA H100/H200). This session will cover specific, actionable best practices for optimizing both training and inference workloads to maximize throughput, minimize latency, and significantly reduce development cycles for demanding generative AI applications.