Enhancing the Power of Generative AI with Retrieval-Augmented Generation

Enhancing the Power of Generative AI with Retrieval-Augmented Generation

Table of Contents

Artificial Intelligence (AI) is advancing rapidly, transforming industries and reshaping how organizations interact with technology. At the center of this evolution are Large Language Models (LLMs) such as OpenAI’s ChatGPT and Google Gemini. These models deliver impressive capabilities in understanding and generating natural language, making them valuable across multiple business domains.

However, LLMs also have inherent limitations. Their knowledge is based solely on pre-trained data, which can become static, outdated, or incomplete. As a result, they may produce inaccurate or misleading outputs, and struggle with specialized or real-time queries.

To overcome these challenges, Retrieval-Augmented Generation (RAG) has emerged. This approach combines the generative strengths of LLMs with the precision of external knowledge retrieval, enabling more accurate, reliable, and business-ready AI solutions.

What Is Retrieval-Augmented Generation?

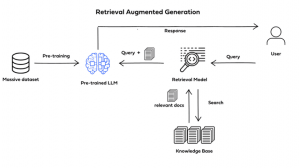

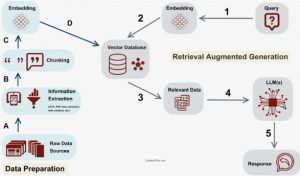

Retrieval-Augmented Generation (RAG) is an AI approach built to improve how large language models (LLMs) generate responses. Instead of relying solely on the model’s pre-trained knowledge, RAG integrates a retriever component that sources information from external knowledge bases such as APIs, online content, databases, or document repositories.

RAG was developed to improve the quality of feedback for LLMs

The retriever can be tailored to achieve different levels of semantic precision and depth, commonly using:

- Vector Databases: User queries are transformed into dense vector embeddings (via transformer-based models like BERT) to perform similarity searches. Alternatively, sparse embeddings with TF-IDF can be applied, relying on term frequency.

- Graph Databases: Knowledge is structured through relationships among entities extracted from text. This ensures high accuracy but requires very precise initial queries.

- SQL Databases: Useful for storing structured information, though less flexible for semantic-driven search tasks.

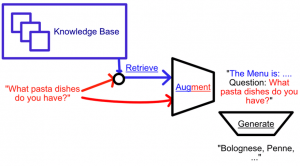

RAG is especially effective for handling vast amounts of unstructured data, such as the information scattered across the internet. While this data is abundant, it is rarely organized in a way that directly answers user queries.

That is why RAG has become widely adopted in virtual assistants and chatbots (e.g., Siri, Alexa). When a user asks a question, the system retrieves relevant details from available sources and generates a clear, concise, and contextually accurate answer. For instance, if asked, “How do I reset the ABC remote?”, RAG can pull instructions from product manuals and deliver a straightforward response.

By blending external knowledge retrieval with LLM capabilities, RAG significantly enhances user experiences, enabling precise and reliable answers even in specialized or complex scenarios.

The RAG model is often applied in virtual assistants and chatbots

Why is RAG important?

Large Language Models (LLMs) like OpenAI’s ChatGPT and Google Gemini have set new standards in natural language processing, with capabilities ranging from comprehension and summarization to content generation and prediction. Yet, despite their impressive performance, they are not without limitations. When tasks demand domain-specific expertise or up-to-date knowledge beyond the scope of their training data, LLMs may produce outputs that appear fluent but are factually incorrect. This issue is commonly referred to as AI hallucination.

The challenge becomes even more apparent in enterprise contexts. Organizations often manage massive repositories of proprietary information—technical manuals, product documentation, or knowledge bases—that are difficult for general-purpose models to navigate. Even advanced models like GPT-4, designed to process lengthy inputs, can still encounter problems such as the “lost in the middle” effect, where critical details buried in large documents fail to be captured.

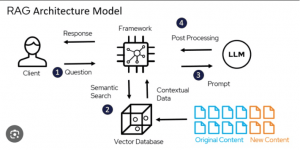

Retrieval-Augmented Generation (RAG) emerged as a solution to these challenges. By integrating a retrieval mechanism, RAG allows LLMs to pull information directly from external sources, including both public data and private enterprise repositories. This approach not only bridges gaps in the model’s knowledge but also reduces the risk of hallucination, ensuring responses are grounded in verifiable information.

For applications like chatbots, virtual assistants, and question-answering systems, the combination of retrieval and generation marks a significant step forward—enabling accurate, up-to-date, and context-aware interactions that enterprises can trust.

RAG enables LLMs to retrieve information from external sources, limiting AI hallucination

Retrieval-Augmented Generation Pipeline

Benefits of RAG

RAG offers several significant advantages over standalone LLMs:

- Up-to-Date Knowledge: Dynamically retrieves the latest information without retraining the model.

- Reduced Hallucination: Grounded answers minimize the risk of fabricated content.

- Transparency: Provides source references, enabling users to verify claims.

- Cost Efficiency: Eliminates frequent re-training cycles, reducing computational and financial overhead.

- Scalability: Works across domains, from healthcare and finance to enterprise IT.

- Versatility: Powers applications such as chatbots, search systems, and intelligent summarization tools.

Practical Use Cases Across Industries

RAG is emerging as the key to helping Generative AI overcome the limitations of models like ChatGPT or Gemini, which rely solely on pre-trained data that can quickly become outdated or inaccurate.

By combining the generative capabilities of language models with external data retrieval, RAG delivers clear, real-time answers, minimizes AI hallucination, and helps businesses optimize costs.

In practice, RAG is already shaping the future of AI across multiple domains:

- Chatbots and Customer Service: Provide instant, accurate responses by retrieving answers directly from product manuals, FAQs, or knowledge bases.

- Healthcare: Deliver reliable medical insights by sourcing information from verified clinical guidelines and research databases.

- Finance: Equip analysts with real-time market updates and contextual insights drawn from live data feeds.

- Knowledge Management: Help employees interact with technical documentation and compliance materials in a natural, conversational way.

These practical use cases illustrate how RAG makes AI more reliable, transparent, and truly valuable across industries.

Future Outlook

RAG represents a pivotal step toward trustworthy, authoritative AI. By bridging parameterized knowledge (learned during training) with retrieved knowledge (dynamic, external data), RAG overcomes one of the greatest limitations of LLMs.

With advancements in agentic AI, where models orchestrate retrieval, reasoning, and generation autonomously, will push RAG even further. Combined with hardware acceleration (e.g., NVIDIA’s Grace Hopper Superchip) and open-source frameworks like LangChain, and supported by enterprise-ready infrastructures such as FPT AI Factory, which delivers high-performance GPUs for training and deploying complex RAG models, RAG will continue to evolve into the backbone of enterprise-grade generative AI.

Ultimately, Retrieval-Augmented Generation is not just a solution to hallucinations and knowledge gaps, it is the foundation enabling intelligent assistants, advanced chatbots, and enterprise-ready AI systems across industries.