DeepSeek-V3.2-Speciale: A New Reasoning Rival for GPT-5 & Gemini-3.0?

DeepSeek-V3.2-Speciale: A New Reasoning Rival for GPT-5 & Gemini-3.0?

Table of Contents

Less than a year after a knockout blow that stunned the AI industry, China’s DeepSeek is back with new open-source model and an ambitious set of claims that are turning heads across the tech world. The newly released DeepSeek V3.2-Speciale, fully open-source, is touted by the company as capable of competing with; and in some cases, even surpassing the biggest names today, including OpenAI’s GPT-5 and Google’s Gemini 3 Pro.

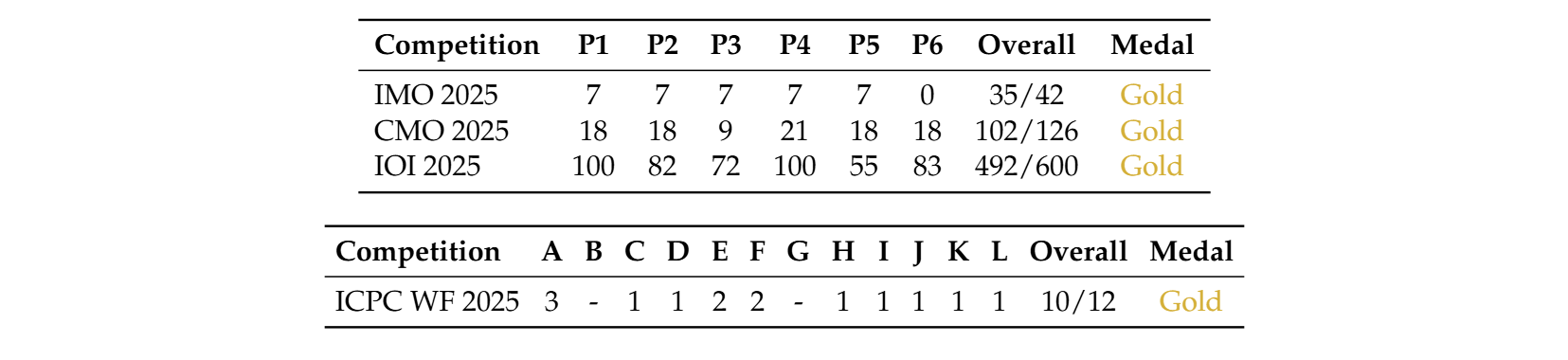

DeepSeek-V3.2-Speciale is a large language model that demonstrates exceptionally strong reasoning capabilities across mathematical, algorithmic, and logic-intensive evaluations. According to DeepSeek report, DeepSeek-V3.2-Speciale achieved gold-medal level performance in both the 2025 International Mathematical Olympiad (IMO) and the 2025 International Olympiad in Informatics (IOI), showcasing its capacity to tackle highly structured mathematical proofs and algorithmic problems with precision rarely seen in AI systems. Furthermore, its submissions to the ICPC World Finals 2025 reached top-tier placements, rivalling expert human competitors across timed programming challenges.

Table 1: Performance of DeepSeek-V3.2-Speciale in top-tier mathematics and coding competitions

Key technical highlights

In comparisons with frontier models such as GPT-5, DeepSeek-V3.2-Speciale shows greater consistency in multi-step reasoning, clearer intermediate logic, and lower variance in problem-solving outputs. These characteristics make the model particularly effective on tasks where correctness, logical depth, and reasoning stability are critical, highlighting an important direction for progress in large language model reasoning performance.

What sets DeepSeek-V3.2-Speciale apart is not sheer scale alone, but a design focuses on efficient reasoning and problem decomposition. DeepSeek-V3.2-Speciale has been optimized through a combination of sparse attention mechanisms and a scalable reinforcement learning framework to deliver higher consistency and deeper multi-step reasoning in domains that require exact logical rigor.

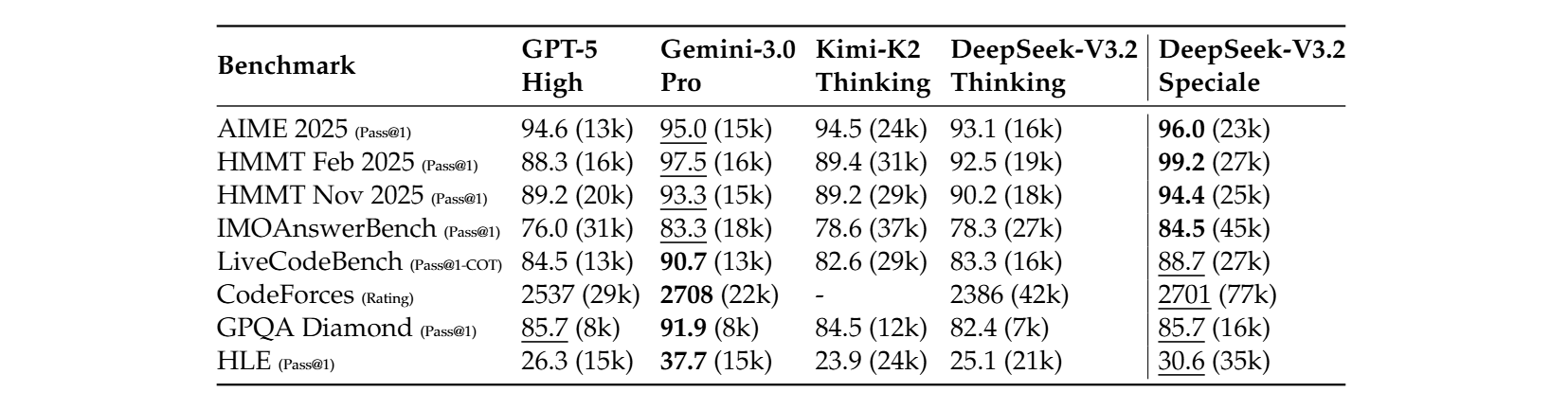

Table 2: Benchmark performance and efficiency of reasoning models

Across a range of reasoning-heavy benchmarks, DeepSeek-V3.2-Speciale consistently matches or outperforms GPT-5 High and Gemini 3.0 Pro, particularly on tasks that emphasize mathematical rigor and multi-step logical reasoning such as AIME 2025, HMMT Feb 2025, HMMT Nov 2025, and IMOAnswerBench.

Taken as a whole, the benchmark results suggest that DeepSeek-V3.2-Speciale has closed the reasoning gap with frontier models; and in several dimensions, moved ahead of them. Compared to GPT-5 and Gemini 3.0 Pro, DeepSeek-V3.2-Speciale shows stronger consistency on reasoning-intensive tasks, with fewer performance drop-offs across different problem distributions. Rather than excelling in isolated benchmarks, it delivers high, stable scores across mathematics, algorithmic reasoning, and competitive programming, indicating robustness rather than specialization in a single test format.

Relative to GPT-5, the results point to a clear trade-off: while GPT-5 remains broadly capable, its reasoning performance exhibits greater variance, whereas DeepSeek-V3.2-Speciale maintains more reliable accuracy on structured, multi-step problems. Against Gemini 3.0 Pro, which performs strongly on select benchmarks, DeepSeek-V3.2-Speciale distinguishes itself by sustaining top-tier performance across a wider range of reasoning evaluations, suggesting stronger generalization within the reasoning domain itself.

When to use DeepSeek-V3.2-Speciale

For practitioners, the implications are fairly clear once the nature of the task is defined. When the problem is pure reasoning on a bounded input such as proving a functional inequality, solving a difficult combinatorics problem, or designing a non-trivial algorithm from scratch. DeepSeek-V3.2-Speciale stands out as one of the strongest engines available.

However, real-world workflows often extend beyond this narrow but demanding class of problems. When a task begins to blend reasoning with broader context, such as drawing up-to-date world knowledge, large multi-file codebases, shell commands, browsing, or multimodal inputs, raw contest performance becomes less decisive. In these mixed workflows, the broader ecosystem and tool integration matter more, and systems like GPT-5.1-High, Gemini-3-Pro, Claude-Opus-4.5, or even standard V3.2-Thinking often deliver better end-to-end results.

Conclusion

In conclusion, DeepSeek-V3.2-Speciale demonstrates that frontier-level reasoning can be achieved through focused design rather than sheer scale. Its strong and consistent performance on mathematical and algorithmic benchmarks places it among the most capable reasoning models available today. While it is not a universal solution for every workflow, in bounded, reasoning-heavy tasks it sets a new bar for reliability and logical depth, pointing toward a more specialized and purposeful direction for future language models.

To experience DeepSeek-V3.2-Speciale, visit our website at AI Marketplace. New users will receive up to 100 million tokens to explore and evaluate this robust model on real-world reasoning tasks.

Get started with DeepSeek-V3.2-Speciale here: https://marketplace.fptcloud.com/en