Dive into Claude Haiku 4.5: Faster, Smarter, and More Affordable

Dive into Claude Haiku 4.5: Faster, Smarter, and More Affordable

Table of Contents

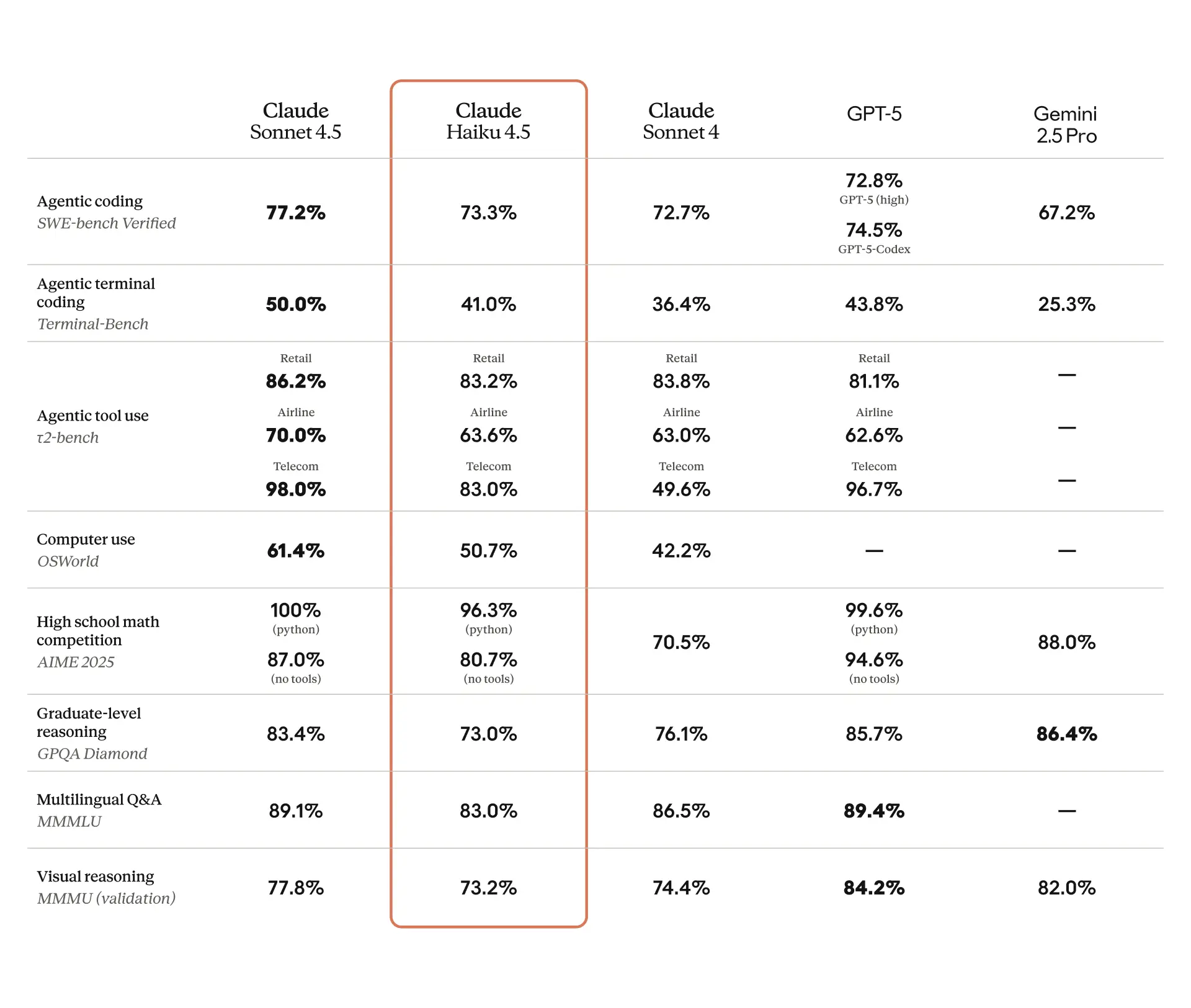

After the release of Claude Sonnet 4.5, considered a world-class model for programming and agentic use, Anthropic has introduced its newest small model: Claude Haiku 4.5. According to Anthropic, this model delivers better performance than Sonnet 4, while costing one-third as much and running at more than double the speed.

Claude Haiku 4.5 is engineered for high-volume, low-latency, cost-sensitive deployments. If your workload involves long-running sequences, many calls to LLMs, or you need to spin up multiple agents in parallel, this is a major shift.

Key technical highlights

Claude Haiku 4.5 is described as a “small, fast model” in Anthropic’s classification. It sits below the “frontier” models but delivers near-frontier coding and reasoning performance at a much lower cost.

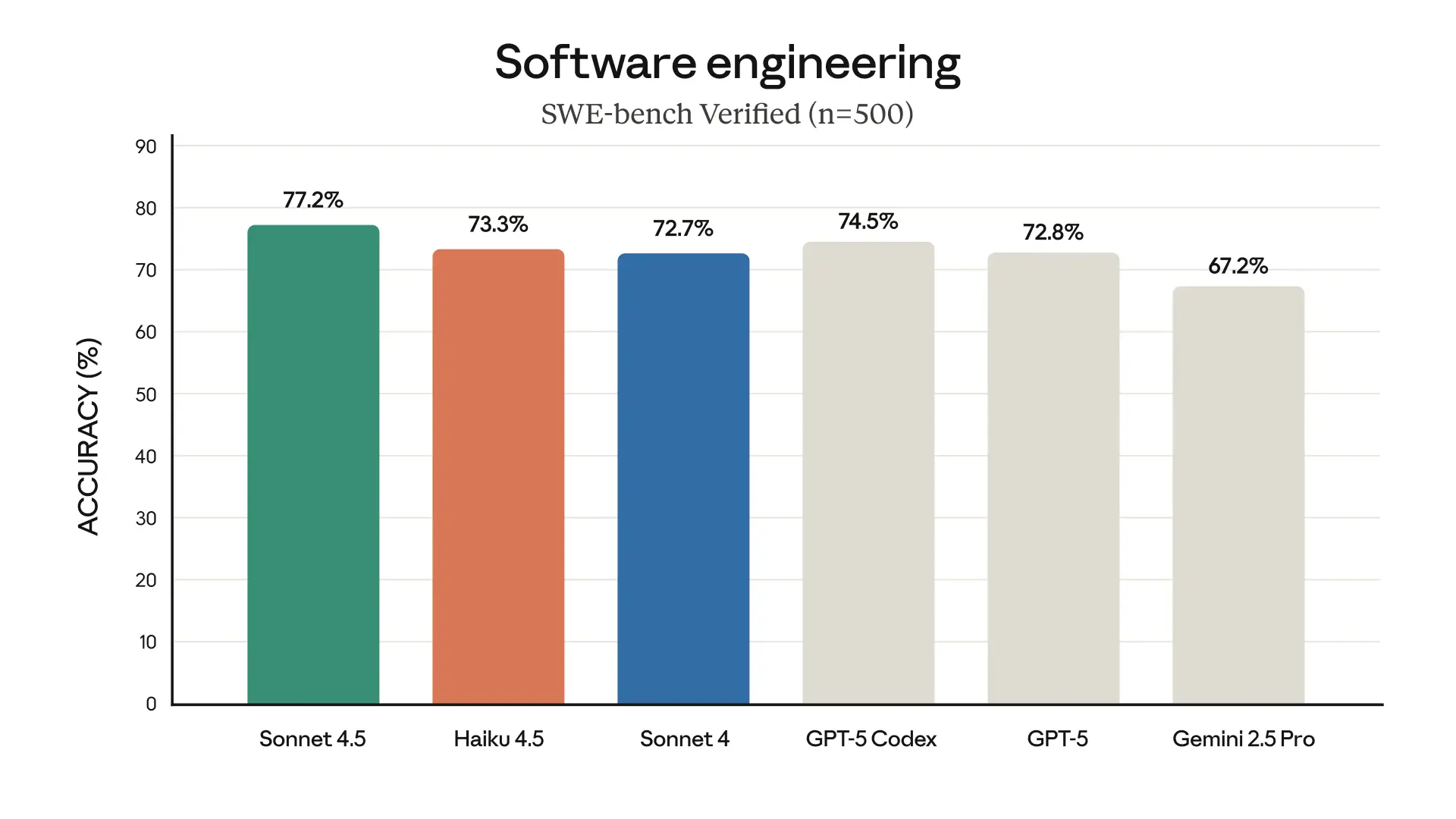

On SWE-bench Verified (a real-world software engineering test using GitHub issues), Claude Haiku 4.5 scored ~73.3%. By comparison, Claude Sonnet 4.5 scored ~77.2%.

Claude Haiku 4.5 supports both text and image inputs and is capable of extended reasoning, computer-use, and tool-assisted workflows.

The model is available via Claude’s API at USD $1 per 1 million input tokens and $5 per 1 million output tokens. This is significantly lower than higher-tier models.

In terms of safety and alignment, Anthropic assigns Haiku 4.5 under its AI Safety Level 2 (ASL-2) standard, which is a less restrictive classification than the ASL-3 assigned to the bigger models, and reports improved behaviour in alignment benchmarks.

What this means for applications & users

For developers, product teams, and businesses, Claude Haiku 4.5 opens up new possibilities:

- Cost-sensitive workflows: When you are running thousands or tens of thousands of model calls (e.g., customer service assistants, chatbots, embedded agents), the lower cost per token matters.

- Speed/latency-critical use cases: Claude Haiku 4.5 is faster, so it is well-suited for real-time interaction, multi-agent orchestration, or workflows where response speed is key.

- Scaling agents: If you architect a system with a top-tier model as the “brain” and multiple sub-agents handling sub-tasks, Claude Haiku 4.5 offers a faster, cheaper sub-agent tier without sacrificing too much in capability.

- Maintain high capability: Claude Haiku 4.5 offers near what was considered cutting-edge only months ago, along with more affordable pricing for many real-world coding, tool-use, and reasoning tasks.

- Flexibility in deployment: Claude Haiku 4.5 is available on Claude Code and Anthropic’s apps. Developers can access the model via API and on major cloud platforms (e.g., Amazon Bedrock, Google Cloud’s Vertex AI), making model adoption smoother.

Conclusions

The era when only the most expensive models could deliver top performance is changing. With Claude Haiku 4.5, Anthropic offers a compelling value proposition: remarkable performance, fast speed, and significantly lower cost. For organizations looking to embed AI agents, deploy at scale, or experiment with generative AI workflows, this model opens doors that were previously constrained by budget or latency.

If you are working on AI-powered systems (chatbots, cloud agents, generative workflows), Claude Haiku 4.5 may well allow you to iterate faster, deploy more broadly, and keep your TCO (total cost of ownership) in check.

Source: https://www.anthropic.com/news/claude-haiku-4-5