FPT AI Factory: A Powerful AI SOLUTION Suite with NVIDIA H100 and H200 Superchips

FPT AI Factory: A Powerful AI SOLUTION Suite with NVIDIA H100 and H200 Superchips

Table of Contents

In the booming era of artificial intelligence (AI), Viet Nam is making a strong mark on the global technology map through the strategic collaboration between FPT Corporation and NVIDIA – the world’s leading provider of high-performance computing solutions, to develop FPT AI Factory, a comprehensive suite for end-to-end AI. This solution is built on the world’s most advanced AI technology, NVIDIA H100 and NVIDIA H200 superchips.

Video: Mr. Truong Gia Binh (Chairman of FPT Corporation) discusses the strategic cooperation with NVIDIA in developing comprehensive AI applications for businesses

According to the Government News (2024), Mr. Truong Gia Binh – Chairman of the Board and Founder of FPT Corporation – emphasized that FPT is aiming to enhance its capabilities in technology research and development, while building a comprehensive ecosystem of advanced products and services based on AI and Cloud platforms. This ecosystem encompasses everything from cutting-edge technological infrastructure and top-tier experts to deep domain knowledge in various specialized fields. "We are committed to making Vietnam a global hub for AI development."

1. Overview of the Two Superchips NVIDIA H100 & H200: A New Leap in AI Computing

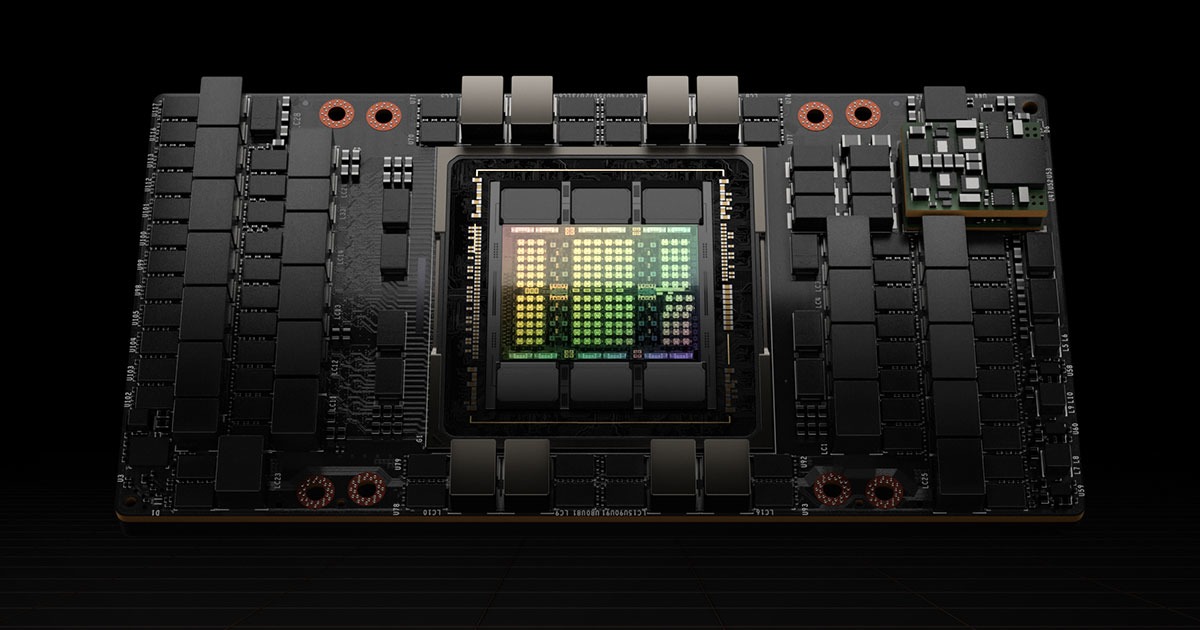

1.1 Information about the NVIDIA H100 Chip (NVIDIA H100 Tensor Core GPU)

The NVIDIA H100 Tensor Core GPU is a groundbreaking architecture built on the Hopper™ Architecture (NVIDIA’s next-generation GPU processor design). It is not just an ordinary graphics processing chip, but a machine specially optimized for Deep Learning and Artificial Intelligence (AI) applications.

The NVIDIA H100 superchip is manufactured using TSMC's advanced N4 process and integrates up to 80 billion transistors. Its processing power comes from a maximum of 144 Streaming Multiprocessors (SMs), purpose-built to handle complex AI tasks. Notably, the NVIDIA Hopper H100 delivers optimal performance when deployed via the SXM5 socket. Thanks to the enhanced memory bandwidth provided by the SXM5 standard, the H100 offers significantly superior performance compared to implementations using conventional PCIe sockets—an especially critical advantage for enterprise applications that demand large-scale data handling and high-speed AI processing.

NVIDIA has developed two different form factor packaging versions of the H100 chip: the H100 SXM and H100 NVL, designed to meet the diverse needs of today’s enterprise market. The specific use cases for these two versions are as follows:

- H100 SXM version: Designed for specialized systems, supercomputers, or large-scale AI data centers aiming to fully harness the GPU’s potential with maximum NVLink scalability. This version is ideal for tasks such as training large AI models (LLMs, Transformers), AI-integrated High Performance Computing (HPC) applications, or exascale-level scientific, biomedical, and financial simulations.

- H100 NVL version: Optimized for standard servers, this version is easily integrated into existing infrastructure with lower cost and complexity compared to dedicated SXM systems. It is well-suited for enterprises deploying real-time AI inference, big data processing, Natural Language Processing (NLP), computer vision, or AI applications in hybrid cloud environments.

| Product Specifications | H100 SXM | H100 NVL |

| FP64 | 34 teraFLOPS | 30 teraFLOP |

| FP64 Tensor Core | 67 teraFLOPS | 60 teraFLOP |

| FP32 | 67 teraFLOPS | 60 teraFLOP |

| TF32 Tensor Core* | 989 teraFLOPS | 835 teraFLOP |

| BFLOAT16 Tensor Core* | 1.979 teraFLOPS | 1.671 teraFLOPS |

| FP16 Tensor Core* | 1.979 teraFLOPS | 1.671 teraFLOPS |

| FP8 Tensor Core* | 3.958 teraFLOPS | 3.341 teraFLOPS |

| INT8 Tensor Core* | 3.958 TOPS | 3.341 TOPS |

| GPU Memory | 80GB | 94GB |

| GPU Memory Bandwidth | 3,35TB/s | 3,9TB/s |

| Decoders | 7 NVDEC

7 JPEG |

7 NVDEC

7 JPEG |

| Max Thermal Design Power (TDP) | Up to 7 MIGS @ 10GB each | 350 - 400W (adjustable) |

| Multi-Instance GPUs) | Up to 7 MIGS @ 10GB each | Up to 7 MIGS @ 12GB each |

| Form Factor | SXM | PCIe

dual-slot air-cooled |

| Interconnect | NVIDIA NVLink™: 900GB/s

PCIe Gen5: 128GB/s |

NVIDIA NVLink: 600GB/s

PCIe Gen5: 128GB/s |

| Server Options | NVIDIA HGX H100 Partner and NVIDIA-

Certified Systems™ with 4 or 8 GPUs NVIDIA DGX H100 with 8 GPUs |

Partner and NVIDIA-Certified Systems with 1 – 8 GPUs |

| NVIDIA AI Enterprise | Optional Add-on | Included |

Table 1.1: Specification Table of the Two H100 Chip Form Factors – H100 SXM and H100 NVL

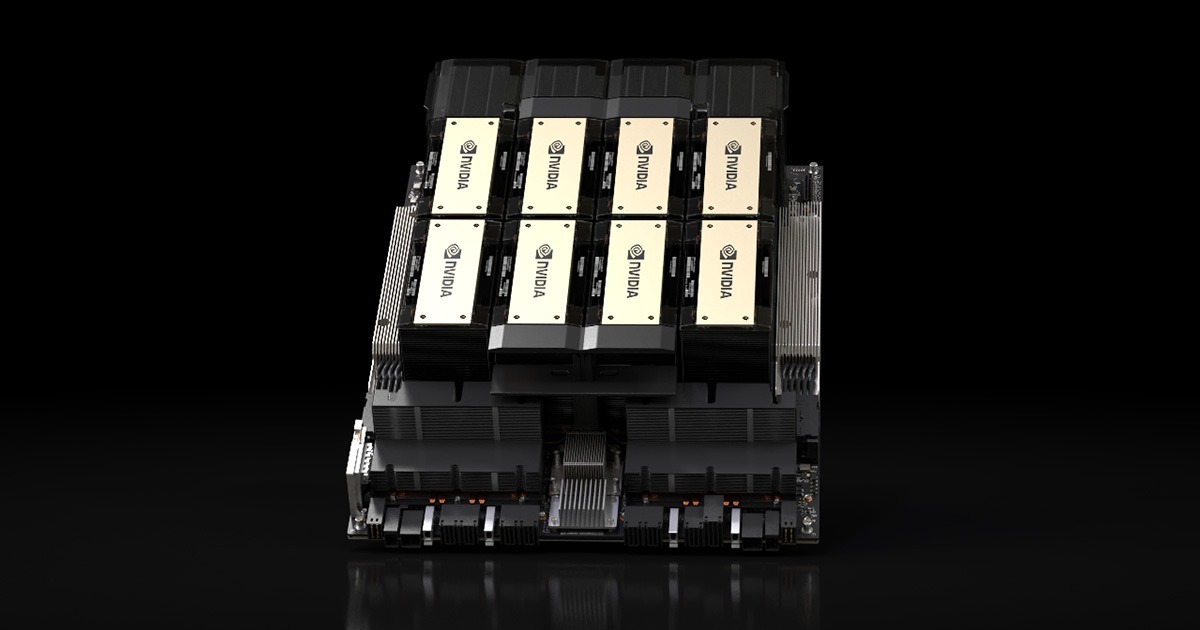

1.2 Information about the NVIDIA H200 Chip (NVIDIA H200 Tensor Core GPU)

Building upon and advancing the Hopper™ architecture, the NVIDIA H200 Tensor Core GPU is a powerful upgrade of the H100, introduced by NVIDIA as the world’s most powerful AI chip, delivering results twice as fast as the H100 at the time of its launch in November 2023. The H200 is designed to handle even larger and more complex AI models, especially generative AI models and large language models (LLMs).

Similar to the H100 superchip, NVIDIA also offers two different form factors for its H200 Tensor Core product, both designed for enterprise use: the H200 SXM and H200 NVL versions.

- NVIDIA H200 SXM: Designed to accelerate generative AI tasks and high-performance computing (HPC), especially with the capability to process massive amounts of data. This is the ideal choice for dedicated systems, supercomputers, and large AI data centers aiming to fully leverage the GPU’s potential with maximum NVLink scalability. Enterprises should use the H200 SXM for scenarios such as training extremely large AI models, HPC applications requiring large memory, and enterprise-level generative AI deployment.

- NVIDIA H200 NVL: Optimized to bring AI acceleration capabilities to standard enterprise servers, easily integrating into existing infrastructure. This version is particularly suitable for enterprises with space constraints needing air-cooled rack designs with flexible configurations, delivering acceleration for all AI and HPC workloads regardless of scale. Use cases for H200 NVL in enterprises include real-time AI inference, AI deployment in hybrid cloud environments, big data processing, and natural language processing (NLP).

| Product Specifications | H200 SXM | H200 NVL |

| FP64 | 34 TFLOPS | 30 TFLOPS |

| FP64 Tensor Core | 67 TFLOPS | 60 TFLOPS |

| FP32 | 67 TFLOPS | 60 TFLOPS |

| TF32 Tensor Core² | 989 TFLOPS | 835 TFLOPS |

| BFLOAT16 Tensor Core² | 1.979 TFLOPS | 1.671 TFLOPS |

| FP16 Tensor Core² | 1.979 TFLOPS | 1.671 TFLOPS |

| FP8 Tensor Core² | 3.958 TFLOPS | 3.341 TFLOPS |

| INT8 Tensor Core² | 3.958 TFLOPS | 3.341 TFLOPS |

| GPU Memory | 141GB | 141GB |

| GPU Memory Bandwidth | 4,8TB/s | 4,8TB/s |

| Decoders | 7 NVDEC

7 JPEG |

7 NVDEC

7 JPEG |

| Confidential Computing | Supported | Supported |

| TDP | Up to 700W (customizable) | Up to 600W (customizable) |

| Multi-Instance GPUs | Up to 7 MIGs @18GB each | Up to 7 MIGs @16.5GB each |

| Form Factor | SXM | PCIe

Dual-slot air-cooled |

| Interconnect | NVIDIA NVLink™: 900GB/s

PCIe Gen5: 128GB/s |

2- or 4-way NVIDIA NVLink bridge:

900GB/s per GPU PCIe Gen5: 128GB/s |

| Server Options | NVIDIA HGX™ H200 partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs | NVIDIA MGX™ H200 NVL partner and NVIDIA-Certified Systems with up to 8 GPUs |

| NVIDIA AI Enterprise | Add-on | Included |

Table 1.2: Technical specifications of the two form factors, H200 SXM and H200 NVL

1.3 Detailed Comparison Between NVIDIA H100 and NVIDIA H200 Superchips

Based on the information regarding the two NVIDIA products, H100 (H100 SXM - H100 NVL) and H200 (H200 SXM - H200 NVL), provided by FPT Cloud, here is a detailed comparison table between NVIDIA H100 & H200 for your reference:

| Features | NVIDIA H100 (SXM) | NVIDIA H100 (NVL) | NVIDIA H200 (SXM) | NVIDIA H200 (NVL) |

| Architecture | Hopper™ | Hopper™ | Inheriting and evolving from Hopper™" | Inheriting and evolving from Hopper™" |

| Manufacturing Process | TSMC N4 (integrating 80 billion transistors) | TSMC N4 (integrating 80 billion transistors) | An upgraded version of H100 | An upgraded version of H100 |

| FP64 | 34 teraFLOPS | 30 teraFLOP | 34 TFLOPS | 30 TFLOPS |

| FP64 Tensor Core | 67 teraFLOPS | 60 teraFLOP | 67 TFLOPS | 60 TFLOPS |

| FP32 | 67 teraFLOPS | 60 teraFLOP | 67 TFLOPS | 60 TFLOPS |

| TF32 Tensor Core | 989 teraFLOPS | 835 teraFLOP | 989 TFLOPS | 835 TFLOPS |

| BFLOAT16 Tensor Core | 1.979 teraFLOPS | 1.671 teraFLOPS | 1.979 TFLOPS | 1.671 TFLOPS |

| FP16 Tensor Core | 1.979 teraFLOPS | 1.671 teraFLOPS | 1.979 TFLOPS | 1.671 TFLOPS |

| FP8 Tensor Core | 3.958 teraFLOPS | 3.341 teraFLOPS | 3.958 TFLOPS | 3.341 TFLOPS |

| INT8 Tensor Core | 3.958 TFLOPS | 3.341 TFLOPS | 3.958 TFLOPS | 3.341 TFLOPS |

| GPU Memory | 80GB | 94GB | 141GB | 141GB |

| GPU Memory Bandwidth | 3.35TB/s | 3.9TB/s | 4.8TB/s | 4.8TB/s |

| Decoders | 7 NVDEC, 7 JPEG | 7 NVDEC, 7 JPEG | 7 NVDEC, 7 JPEG | 7 NVDEC, 7 JPEG |

| Confidential Computing | No information available regarding Confidential Computing | No information available regarding Confidential Computing | Supported | Supported |

| Max Thermal Design Power - TDP | Up to 700W (user-configurable) | 350 - 400W (configurable) | Up to 700W (user-configurable) | Up to 600W (customizable) |

| Multi-Instance GPUs | Up to 7 Multi-Instance GPU (MIG) partitions, each with 10GB | Up to 7 Multi-Instance GPU (MIG) partitions, each with 12GB | Up to 7 Multi-Instance GPU (MIG) partitions, each with 18GB | Up to 7 Multi-Instance GPU (MIG) partitions, each with 16.5GB |

| Form Factor | SXM | PCIe interface, with a dual-slot, air-cooled design | SXM | PCIe interface, with a dual-slot, air-cooled design |

| Interconnect | NVIDIA NVLink™: 900GB/s;; PCIe Gen5: 128GB/s | NVIDIA NVLink: 600GB/s;; PCIe Gen5: 128GB/s | NVIDIA NVLink™: 900GB/s; PCIe Gen5: 128GB/s | NVIDIA NVLink 2- or 4-way bridge: 900GB/s per GPU; PCIe Gen5: 128GB/s |

| Server Options | NVIDIA HGX H100 Partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs; NVIDIA DGX H100 with 8 GPUs | Compatible with Partner and NVIDIA-Certified Systems supporting 1 to 8 GPUs | Supported on NVIDIA HGX™ H200 Partner Systems and NVIDIA-Certified Platforms featuring 4 or 8 GPUs | NVIDIA MGX™ H200 NVL Partner & NVIDIA-Certified Systems (up to 8 GPUs) |

| NVIDIA AI Enterprise | Add-on | Included | Add-on | Included |

Table 1.2: Detailed comparison table between NVIDIA H100 (SXM - NVL) and NVIDIA H200 (SXM - NVL)

2. FPT strategically partners with NVIDIA to develop the first AI Factory in Vietnam

The strategic synergy between NVIDIA, a leading technology company, and FPT's extensive experience in deploying enterprise solutions has forged a powerful alliance in developing pioneering AI products for the Vietnamese market. NVIDIA not only supplies its cutting-edge NVIDIA H100 and H200 GPU superchips but also shares profound expertise in AI architecture. For FPT Corporation, FPT Smart Cloud will be the trailblazing entity to provide cloud computing and AI services built upon the foundation of this AI factory, enabling Vietnamese enterprises, businesses, and startups to easily access and leverage the immense power of AI.

Notably, FPT will concentrate on developing Generative AI Models, offering capabilities for content creation, process automation, and solving complex problems that were previously challenging to address.

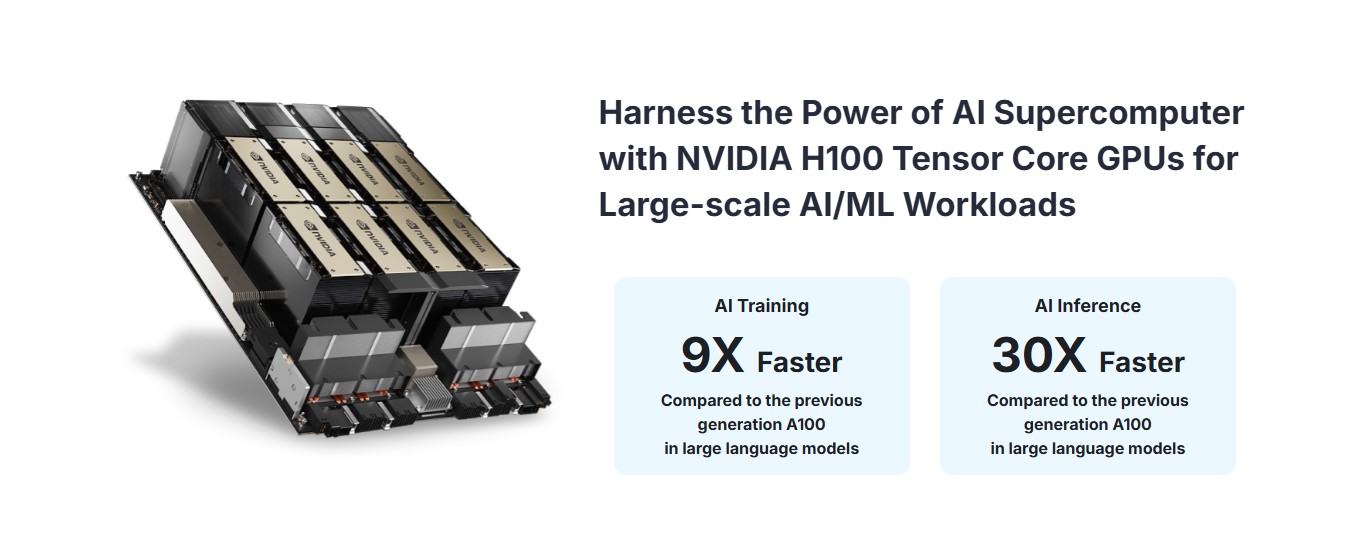

In the era of burgeoning AI technologies, B2B enterprises across all sectors—from Finance, Securities, and Insurance to Manufacturing and Education are facing a pressing need for a reliable partner to achieve digital transformation breakthroughs. FPT AI Factory from FPT Cloud is the optimal solution, offering your business the following outstanding advantages:

- Leading AI Infrastructure: By directly utilizing the NVIDIA H100 and H200 superchips, FPT AI Factory delivers a powerful AI computing platform, ensuring superior performance and speed for all AI tasks.

- Diverse Service Ecosystem: FPT AI Factory is not just hardware but a comprehensive ecosystem designed to support businesses throughout the entire AI solution lifecycle—from development and training to deployment.

- Cost Optimization: Instead of investing millions of dollars in complex AI infrastructure, businesses can leverage FPT AI Factory as a cloud service, optimizing both initial investment and operational costs.

- Security, Compliance, and Integration: FPT is committed to providing a secure AI environment that meets international security standards while also enabling seamless integration with existing enterprise systems.

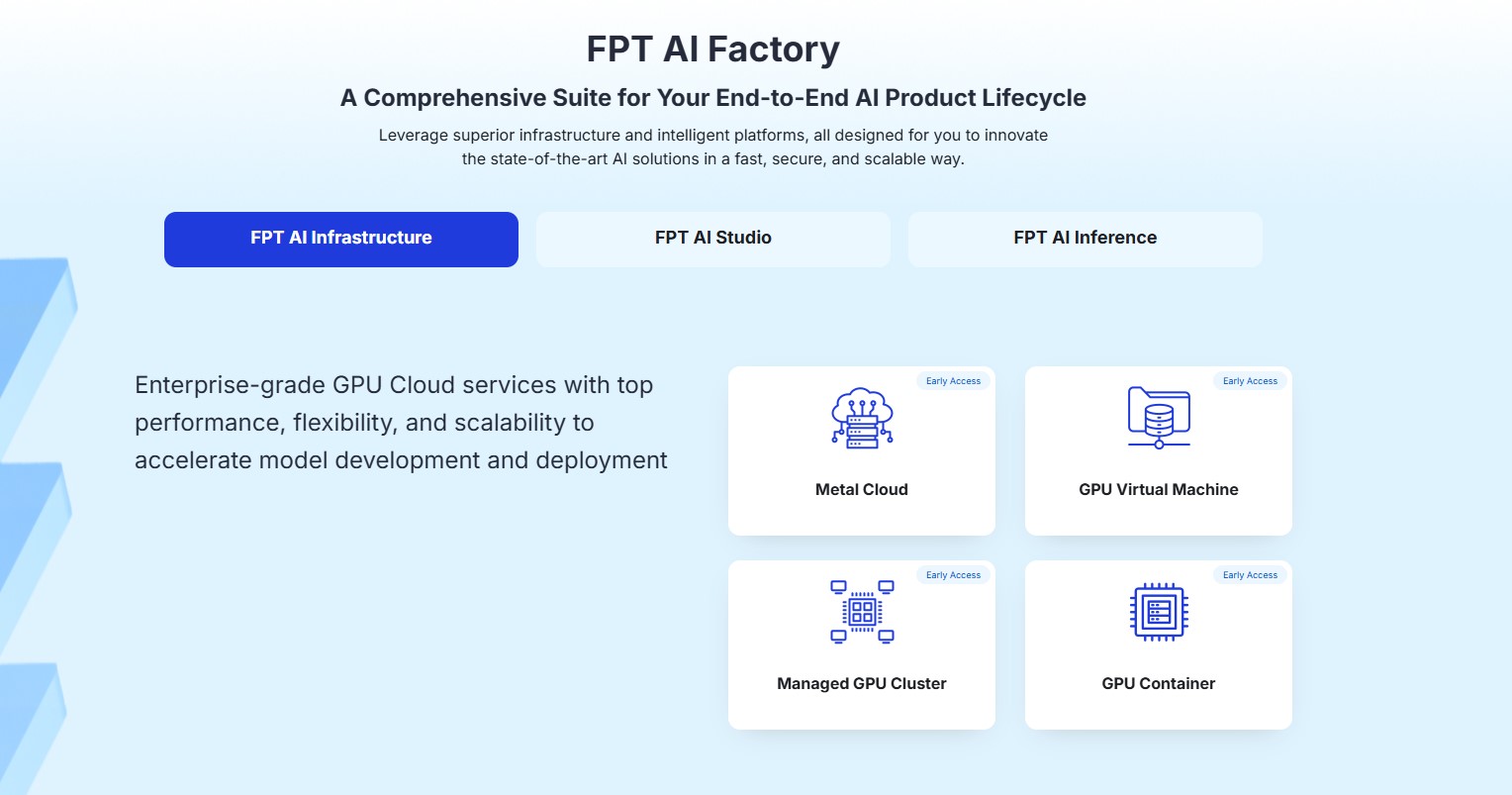

3. Building a Comprehensive FPT AI Factory Ecosystem (FPT AI Infrastructure, FPT AI Studio, and FPT AI Inference) Powered by NVIDIA H100 & H200 Superchips

FPT AI Factory currently offers a trio of AI solutions developed based on the core technology of NVIDIA H100 & NVIDIA H200 superchips for enterprises, including:

- FPT AI Infrastructure: This is the group of products related to enterprise infrastructure.

- FPT AI Studio: This is the group of products related to the platform of tools and services for enterprises.

- FPT AI Inference: This is the group of products related to the platform for AI (Artificial Intelligence) and ML (Machine Learning) models for enterprises.

Video: FPT’s trio of AI solutions — FPT AI Infrastructure, FPT AI Studio, and FPT AI Inference — enables businesses to build, train, and operate AI solutions simply, easily, and effectively.

3.1 FPT AI Infrastructure Solution

FPT AI Infrastructure is a robust cloud computing infrastructure platform, specially optimized for AI workloads. It provides superior computing power from NVIDIA H100 and H200 GPUs, enabling enterprises to build supercomputing infrastructure, easily access and utilize resources to train AI models rapidly, and flexibly scale according to their needs using technologies such as Meta Cloud, GPU Virtual Machine, Managed CPU Cluster, and GPU Container.

3.2 The FPT AI Studio Product

Once a business has established an infrastructure system with advanced GPU technology, the next step is to build and develop its own artificial intelligence and machine learning models tailored to specific operational and application needs. FPT AI Studio is the optimal solution for this. It is a comprehensive AI development environment that offers a full suite of tools and services to support businesses throughout the entire process from data processing, model development, training, evaluation, to deployment of real-world AI/ML models—using cutting-edge technologies such as Data Hub, AI Notebook, Model Pre-training, Model Fine-tuning, and Model Hub.

Register now to start building and deploying AI and Machine Learning models for your business today!

3.3 The FPT AI Inference Service

Once an enterprise's AI or Machine Learning model has been trained using internal and other crucial data, deploying and operating it in a real-world environment demands an efficient solution. FPT AI Inference is the intelligent choice for your business. This solution is optimized to deliver high inference speed and low latency, ensuring your AI models can operate quickly and accurately in real-world applications such as virtual assistants, customer consultation services, recommendation systems, image recognition, or natural language processing, powered by advanced technologies like Model Serving and Model-as-a-Service. This is the final piece in the FPT AI Factory solution suite, helping enterprises to put AI into practical application and deliver immediate business value.

4. Exclusive offer for customers registering to experience FPT AI Factory on FPT Cloud

Exclusive incentives from FPT Cloud just for you when you register early to experience the comprehensive AI Factory solution trio: FPT AI Infrastructure, FPT AI Studio, and FPT AI Inference today:

- Priority access to FPT AI Infrastructure services at preferential pricing: Significantly reduce costs while accessing world-class AI infrastructure, tools, and applications—right here in Vietnam.

- Early access to premium features of FPT AI Factory: Ensure your business stays ahead by being among the first to adopt the latest AI technologies and tools in the digital transformation era.

- Receive Cloud credits to explore a diverse AI & Cloud ecosystem: Experience other powerful FPT Cloud solutions that enhance operational efficiency, such as FPT Backup Services, FPT Disaster Recovery, and FPT Object Storage.

- Gain expert consultation from seasoned AI & Cloud professionals: FPT’s AI and Cloud specialists will support your business in applying and operating the FPT AI Factory solution suite effectively, driving immediate business impact.