Practical Guide to Distributed Training Large Language Models (LLMs) with Slurm and LLaMA-Factory on Metal Cloud

Practical Guide to Distributed Training Large Language Models (LLMs) with Slurm and LLaMA-Factory on Metal Cloud

Table of Contents

1. Overview

This guide provides a comprehensive walkthrough for setting up and running distributed training using LLaMA-Factory on Metal Cloud (Bare Metal Server). We cover environment setup, Slurm-based job scheduling, and performance optimizations.

Additionally, we include a training task using the Open Instruct Uncensored Alpaca dataset, which consists of instruction-tuning samples, for fine-tuning a Llama-3.1-8B model, with full fine-tuning settings on 4 nodes, 8 x NVIDIA H100 GPUs per node, providing hands-on instructions for replicating a real-world training scenario.

The execution setting up a distributed training environment on Metal Cloud using Slurm, an open-source workload manager optimized for high-performance computing. The guide walks through:

- Preparing the infrastructure with Slurm, CUDA, and NCCL for efficient multi-GPU communication.

- Installing LLaMA-Factory and configuring the system to enable seamless model training.

- Running a fine-tuning task for the LLaMA-3.1-8B model using the Open Instruct Uncensored Alpaca dataset.

- Leveraging Slurm’s job scheduling capabilities to allocate resources and monitor performance efficiently.

Key highlights that readers should focus on:

- Scalability & Efficiency: The guide demonstrates how Slurm optimally distributes workloads across multiple GPUs and nodes, reducing training time.

- Cost Optimization: Proper job scheduling minimizes idle GPU time, leading to better resource utilization and lower costs.

- Reliability: Automated job resumption, error handling, and real-time system monitoring ensure stable training execution.

- Hands-on Training Example: A real-world fine-tuning scenario is provided, including dataset preparation, YAML-based configuration, and Slurm batch scripting for execution.

By following this guide, readers can replicate the training pipeline and optimize their own LLM training workflows on Metal Cloud.

2. Why Slurm for Distributed Training?

Slurm is a widely used open-source workload manager designed for high-performance computing (HPC) environments. It provides efficient job scheduling, resource allocation, and scalability, making it an excellent choice for AI training on Metal Cloud. Key advantages include:

- Resource Efficiency: Slurm optimally distributes workloads across GPUs and nodes, minimizing idle resources.

- Scalability: Seamlessly scales from a few GPUs to thousands, accommodating diverse AI workloads.

- Job Scheduling: Prioritizes and queues jobs based on defined policies, ensuring fair usage of resources.

3. Use Case

Use case: Training Large Language Models with LLaMA-factory

One practical application of Slurm on Metal Cloud is training large language models using the LLaMA-factory framework. By distributing training across multiple GPUs and nodes, Slurm helps reduce training time while ensuring stable and efficient execution.

Key Benefits:

- Scalability: Supports large-scale models with efficient GPU utilization.

- Cost Optimization: Reduces cloud computing costs by minimizing idle time.

- Reliability: Automated job resumption and error handling enhance workflow robustness.

4. Prerequisites

Before proceeding, ensure you have the following:

4.1. System Requirements

- Metal Cloud access with multiple GPU-equipped nodes

- Slurm job scheduler installed and configured

- NVIDIA CUDA (11.8+ recommended) installed on all nodes

- NCCL (NVIDIA Collective Communication Library) for multi-GPU communication

- Python 3.8+ installed on all nodes

- Torch with distributed training support

- High-performance Storage

4.2. Network & SSH Configuration

To enable seamless multi-node training, ensure:

- Each node can SSH into other nodes without a password using an SSH key.

- Network interfaces allow high-speed inter-node communication (e.g., InfiniBand).

NCCL and PyTorch distributed backend can communicate over TCP/IP.

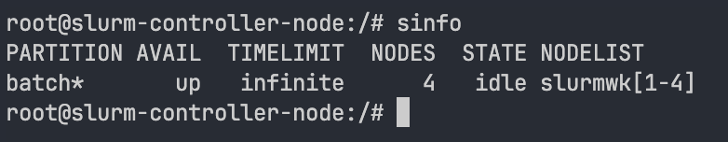

You can verify node connectivity using: scontrol show nodes or sinfo

5. Environment Setup

Assuming that you have all the system requirements for distributed training task, run the following on each compute node to install LLaMA-Factory. It will install all necessary packages to run LLaMA-Factory:

python3 –m venv venv source venv/bin/activate git clone https://github.com/hiyouga/LLaMA-Factory.git cd LLaMA-Factory pip install –e “.[torch,metrics]”

6. Sample Training Task: Fine-Tuning LLaMA on Open Instruct Uncensored Alpaca Dataset

To demonstrate a real-world scenario, we will full fine-tune a Llama-3.1-8B model using the Open Instruct Uncensored Alpaca dataset for instruction-following tasks.

6.1. Dataset: Open Instruct Uncensored Alpaca

The Open Instruct Uncensored Alpaca is a collection of instruction-response pairs dataset. It is one of the most common datasets for fine-tuning models for instruction following. This dataset is public on Hugging Face.

With LLaMA-Factory, you can specify the dataset's URI from a remote repository like Hugging Face directly in the YAML file to set up your training configuration, LLaMA-Factory will automatically download the dataset. To achieve this, you must define the dataset in a file named dataset_info.json, located in LLaMA-Factory/data/dataset_info.json. Add the following line to dataset_info.json.

"uncensored_alpaca": {"hf_hub_url": "xzuyn/open-instruct-uncensored-alpaca"}

When you have downloaded the dataset on the machine, you can add the following line to dataset_info.json and you are good to go.

"your_dataset_name": {"file_name": "path/to/your/dataset.json"}

6.2. Model: LLaMA 3.1 8B

The LLaMA 3.1 8B model is one of the latest releases in Meta’s third-generation LLaMA series. It is a lightweight yet powerful large language model designed for both research and enterprise applications.

LLaMA 3.1 8B can be trained efficiently on multi-GPU multi-node Metal Cloud servers using LLaMA-Factory and DeepSpeed. The next sections of this guide will walk you through setting up distributed training for LLaMA 3.1 8B using LLaMA-Factory.

If you want to download the model directly from Huggingface, you can use the below command:

huggingface-cli download meta-llama/Llama-3.1-8B --local-dir=Llama-3.1-8B

7. Preparing training configuration

LLaMA-Factory uses YAML configuration files to manage training parameters efficiently. A YAML-based configuration simplifies hyperparameter tuning and ensures reproducibility.

This section explains how to prepare a YAML configuration file for fine-tuning the LLaMA 3.1 8B model using LLaMA-Factory.

7.1. Sample YAML Configuration for Fine-Tuning LLaMA 3.1 8B

LLaMA-Factory provides various predefined YAML training configuration files, located at LLaMA-Factory/examples. Here is a YAML file for full fine-tuning LLaMA 3.1 8B with Open Instruct Uncensored Alpaca dataset:

model_name_or_path: meta-llama/Llama-3.1-8B trust_remote_code: true stage: sft do_train: true finetuning_type: full deepspeed: examples/deepspeed/ds_z2_config.json dataset: uncensored_alpaca template: llama3 cutoff_len: 2048 max_samples: 500000 overwrite_cache: true preprocessing_num_workers: 16 output_dir: saves/llama3.1-8b/full/sft logging_steps: 10 save_steps: 10000 plot_loss: true overwrite_output_dir: true per_device_train_batch_size: 4 gradient_accumulation_steps: 2 learning_rate: 1.0e-5 num_train_epochs: 2.5 lr_scheduler_type: cosine warmup_ratio: 0.1 bf16: true ddp_timeout: 180000000 val_size: 0.001 per_device_eval_batch_size: 1 eval_strategy: steps eval_steps: 10000

You can put the model URI on Huggingface directly with:

model_name_or_path: meta-llama/Llama-3.1-8B

and LLaMA-Factory will automatically download the model before training. If you have downloaded the model on the machine, you can specify as:

model_name_or_path: path/to/your/model

To train on Open Instruct Uncensored Alpaca dataset, add the data by the specified name:

dataset: uncensored_alpaca

We adjust the number of training samples to 500,000 samples by:

max_samples: 500000

You can adjust all other options if necessary

8. Configuring Slurm for Multi-Node Training

Assume you have a YAML training configuration file named llama31_training.yaml, create a Slurm script train_llama.sbatch for training on 4 nodes, 8 GPUs per node:

#!/bin/bash #SBATCH --job-name=multinode-training

#SBATCH --nodes=4

#SBATCH --time=2-00:00:00

#SBATCH --gres=gpu:8

#SBATCH -o training.out

#SBATCH -e training.err

#SBATCH --ntasks=4

nodes=($(scontrol show hostnames $SLURM_JOB_NODELIST ) )

nodes_array=($nodes)

head_node=${nodes_array[0]}

node_id=${SLURM_NODEID}

head_node_ip=$(srun --nodes=1 --ntasks=1 -w "$head_node" hostname --ip-address | cut -d" " -f2)

echo Master Node IP: $head_node_ip

export LOGLEVEL=INFO

export NNODES=4

export NPROC_PER_NODE=8

export HEAD_NODE_IP=$head_node_ip

export HEAD_NODE_PORT=29401

export NODE_RANK=$node_id

export NCCL_IB_DISABLE=0

export NCCL_SOCKET_IFNAME=^lo,docker0

export NCCL_TIMEOUT=180000000

export NCCL_DEBUG=INFO

export NCCL_BLOCKING_WAIT=1 # Ensure NCCL waits for operations to finish export NCCL_ASYNC_ERROR_HANDLING=1 # Allow handling of NCCL errors asynchronously

source venv/bin/activate</em></wp-p>

srun llamafactory-cli train llama31_training.yaml

Use sbatch to submit the training job:

sbatch train_llama.sbatch

![]()

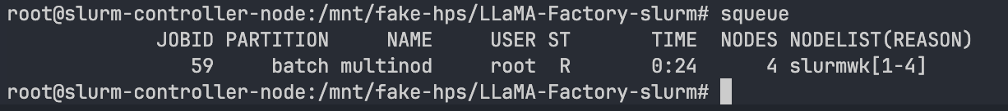

View the queue job with squeue

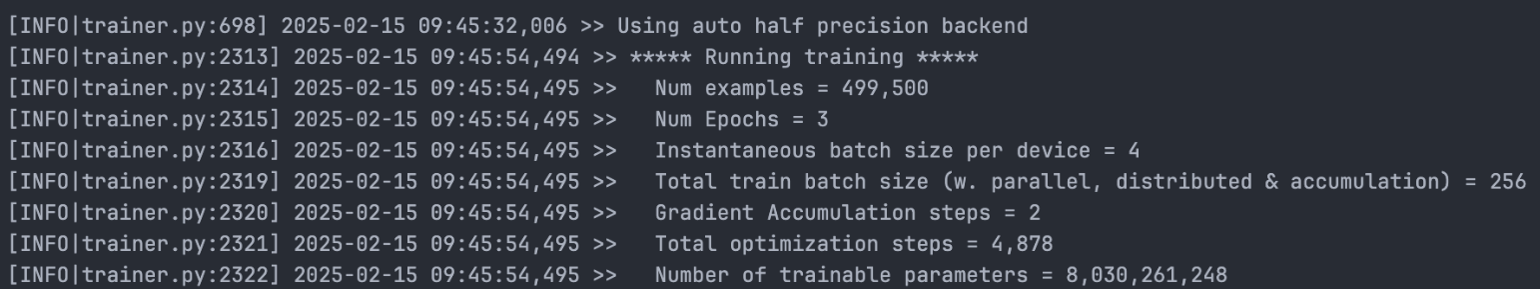

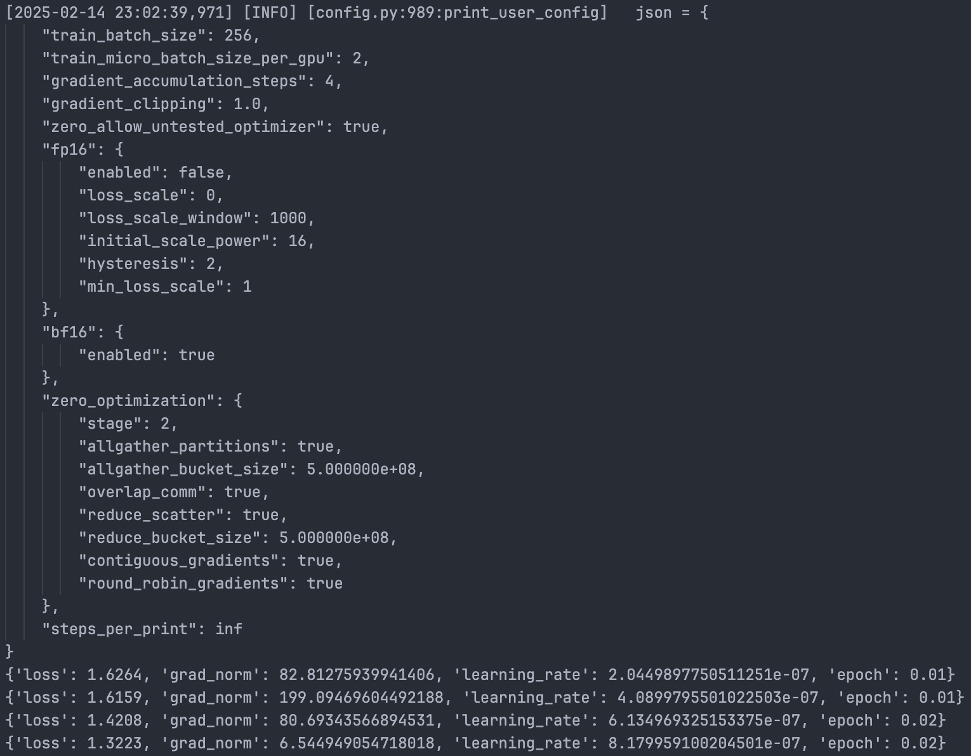

Inspect the training.out and training.err file to see the training progress

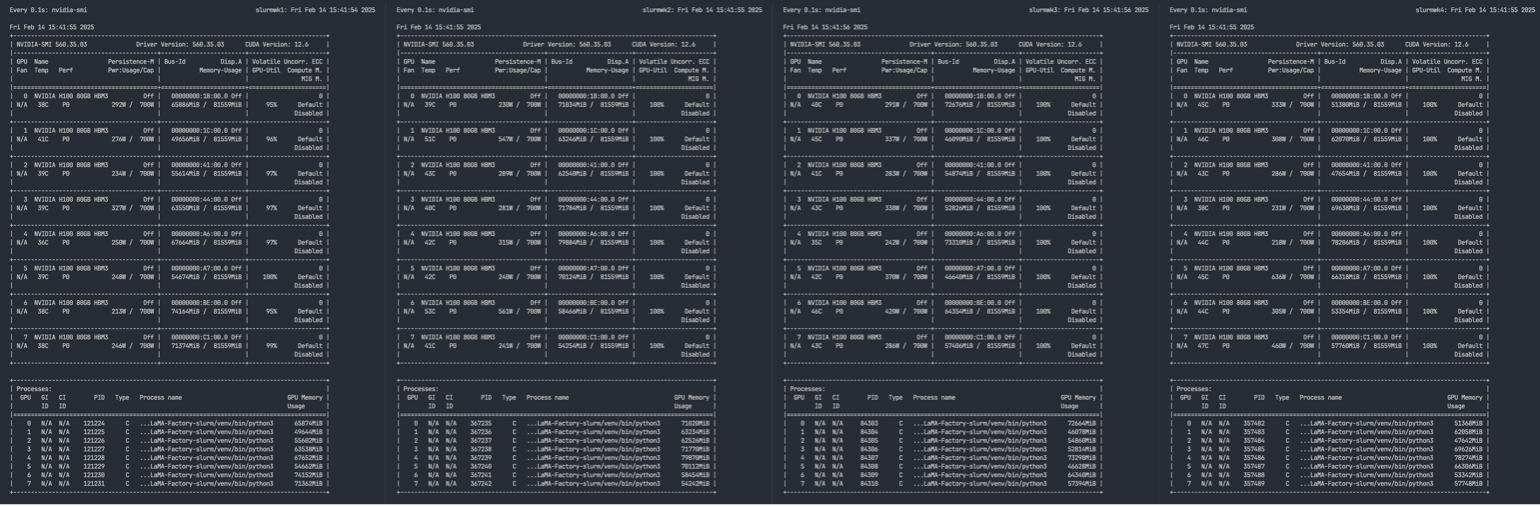

All 4 nodes are utilized perfectly.

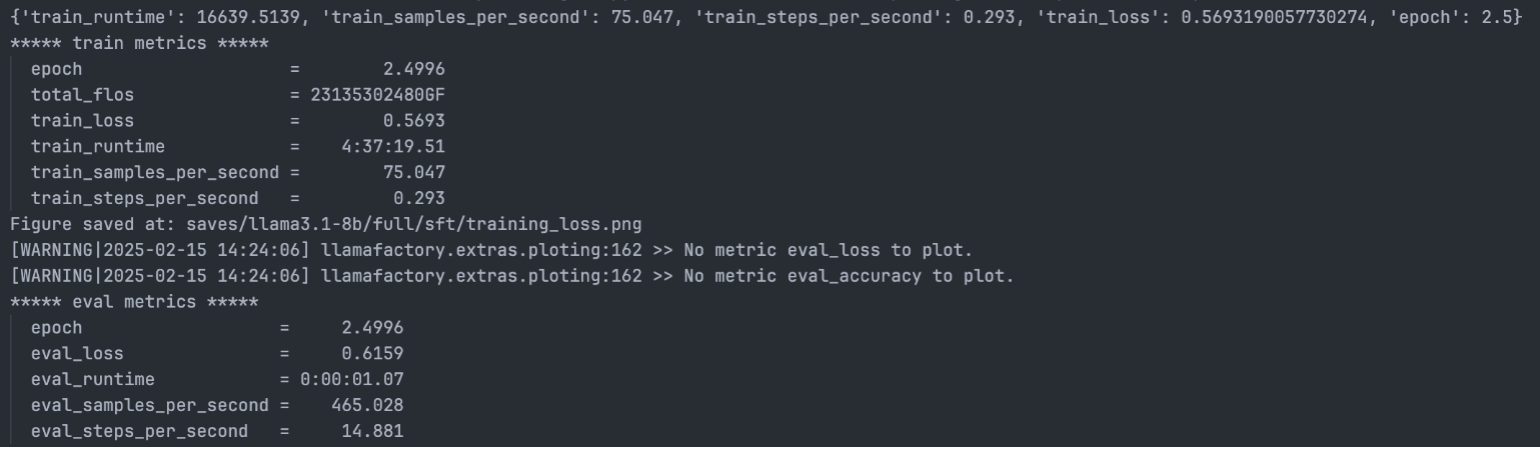

The final result is shown below:

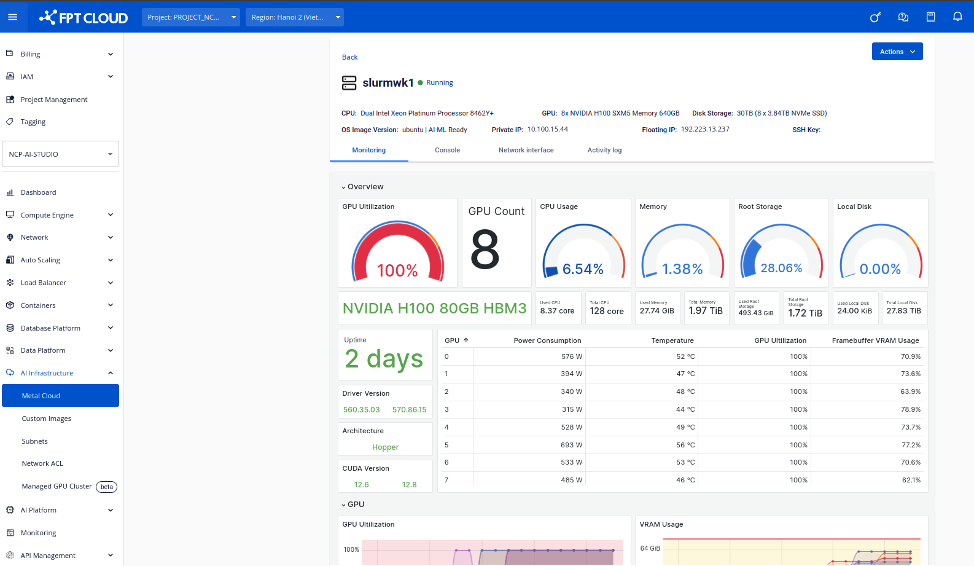

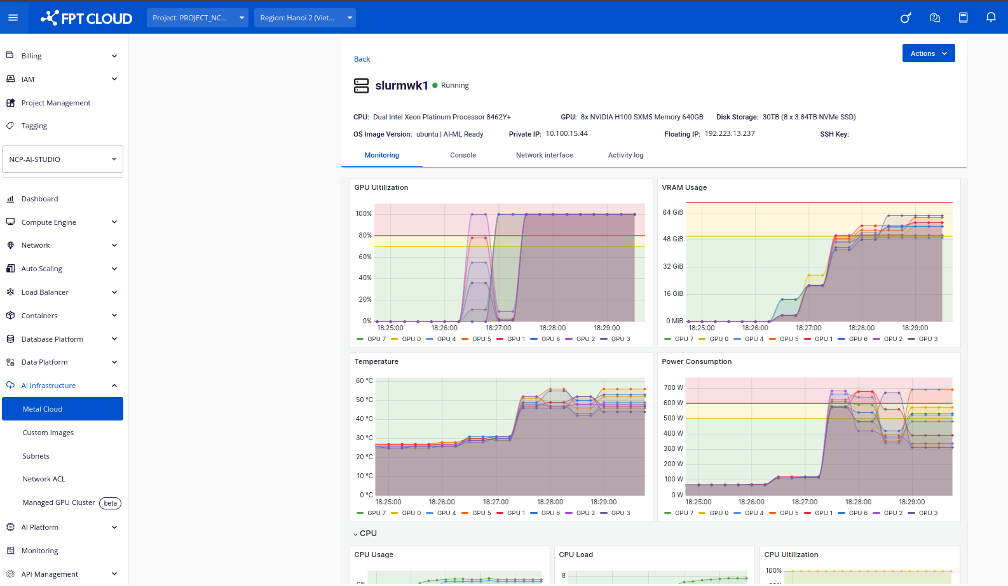

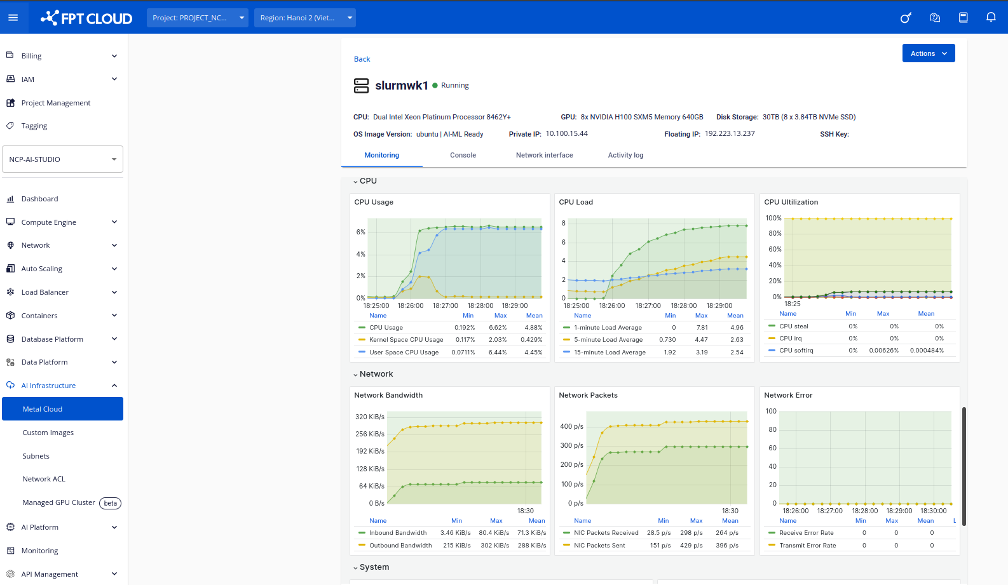

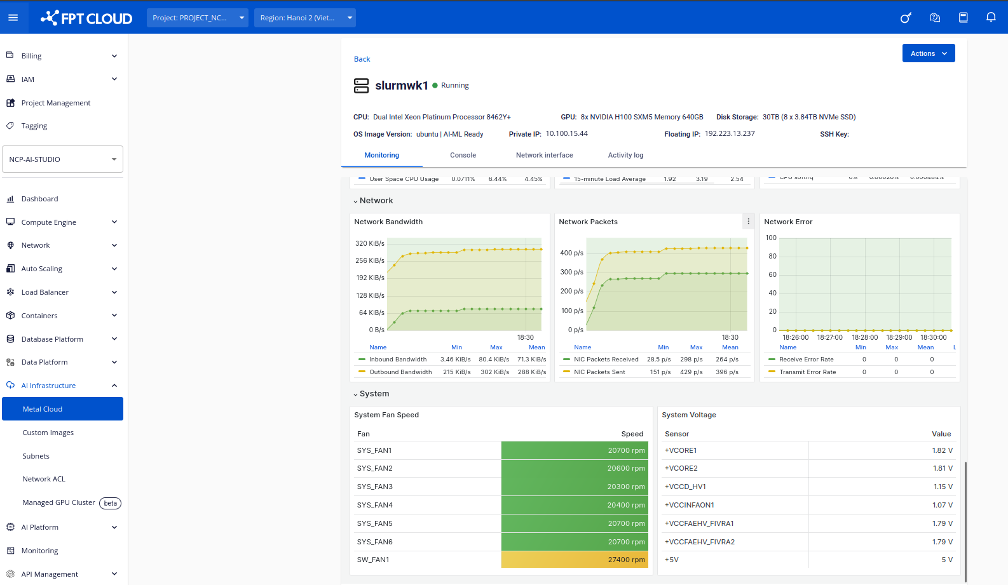

9. Monitoring CPU and GPU Usage During Training

When training large-scale models like LLaMA 3.1 8B on Metal Cloud, it is important to monitor system resources such as CPU, GPU, memory, and disk usage. Proper monitoring helps in:

- Detecting bottlenecks (e.g., underutilized GPUs, CPU overload).

- Optimizing resource allocation (e.g., adjusting batch sizes).

- Avoiding system crashes due to out-of-memory (OOM) errors.

Bare Metal provides a monitoring page where users can track real-time hardware usage, including:

- GPU Utilization – See how much each GPU is being used.

- VRAM Usage – Check memory consumption per GPU.

- CPU Load – Monitor processor usage across nodes.

- Disk & Network Stats – Identify I/O bottlenecks.

Users can access the monitoring page via their Metal Cloud dashboard to ensure efficient and stable training.

Conclusion

This guide provides a structured approach to setting up distributed training for LLaMA-Factory on Metal Cloud. We covered:

- Environment setup

- Slurm job submission

- Distributed training with LLaMA-Factory and DeepSpeed

- Optimizations for large-scale models

Following these steps, you can fine-tune LLaMA models efficiently on Metal Cloud multi-node GPU clusters.

Metal Cloud is now available on FPT AI Factory for reservation. Find out more at: https://aifactory.fptcloud.com/

For more information and consultancy, please contact:

- Hotline: 1900 638 399

- Email: [email protected]

- Support: m.me/fptsmartcloud