LLaMA Factory: A Feature-Rich Toolkit for Accessible LLM Customization

LLaMA Factory: A Feature-Rich Toolkit for Accessible LLM Customization

Table of Contents

As large language models (LLMs) and vision-language models (VLMs) become increasingly essential in modern AI applications, the ability to fine-tune these models on custom datasets has never been more important. However, for many developers, especially those without a deep background in machine learning, existing frameworks can be overwhelming, requiring heavy coding and complex configurations.

LLaMA Factory is an open-source toolkit designed to make LLM fine-tuning accessible to everyone. Whether you're a beginner, a non-technical professional, or an organization seeking an efficient model customization solution, LLaMA Factory simplifies the entire process with an intuitive web interface and support for dozens of fine-tuning strategies.

In this article, we’ll explore what makes LLaMA Factory stand out, who can benefit from it, and how it compares with other popular frameworks.

Who should use LLaMA Factory?

LLaMA Factory is ideal for:

- 🧑💻 Beginner developers experimenting with LLMs

- 📊 Data analysts and researchers without ML expertise

- 🧠 AI enthusiasts working on personal or community projects

- 🏢 Small teams or startups without ML engineering bandwidth

If you want to fine-tune powerful open-source models like LLaMA or Mistral on your own dataset without writing a line of code, this tool is built for you.

What Does LLaMA Factory Offer?

LLaMA-Factory is an open-source project that provides a comprehensive set of tools and scripts for fine-tuning, serving, and benchmarking LLM and VLM models. It provides a solution for flexibly customizing the fine-tuning of 100+ LLMs and VLMs without the need for coding through the built-in web UI LlamaBoard.

The LLaMA-Factory repository makes it easy to get started with large models by providing:

- Scripts for data preprocessing and tokenization tasks

- Training pipelines for fine-tuning models.

- Inference scripts for generating text with trained models

- Benchmarking tools to evaluate model performance

- Gradio web UI for interactive testing and training.

LLaMA Factory is designed specifically for beginners and non-technical professionals who want to fine-tune open-source LLMs on their custom datasets without learning complex concepts of AI. Users simply select a model, upload their dataset, and adjust a few parameters to initiate the training process.

Once training is complete, the same web application can be used to test the model before exporting it to Hugging Face or saved locally. This provides a fast and efficient way for fine-tuning LLMs in a local environment.

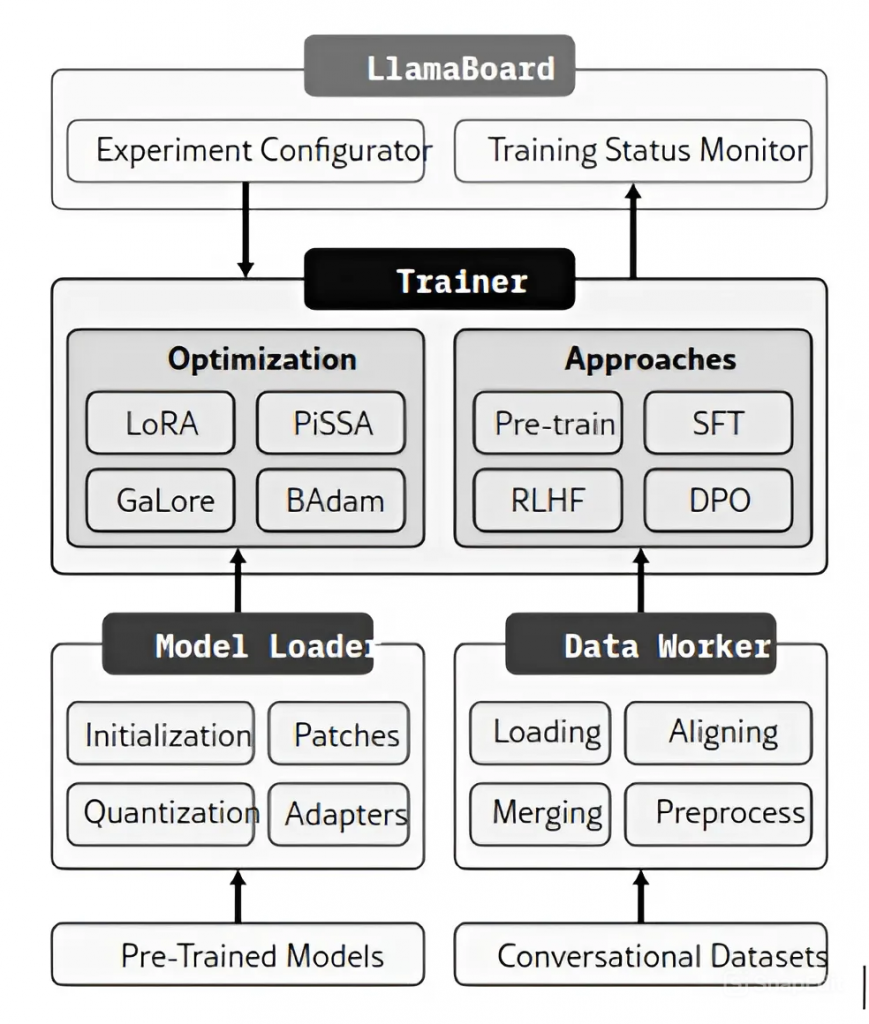

Figure: LLaMA Factory Architecture

Comparing Feature support across LLM training frameworks

Here's how LLaMA Factory stacks up against other popular LLM fine-tuning frameworks like FastChat, LitGPT, and LMFlow:

| Llama Factory | FastChat | LitGPT | LMFlow | Open-Instruct | |

| LoRA | ✓ | ✓ | ✓ | ✓ | ✓ |

| QLoRA | ✓ | ✓ | ✓ | ✓ | ✓ |

| DoRA | ✓ | ||||

| LoRA+ | ✓ | ||||

| PiSSA | ✓ | ||||

| GaLore | ✓ | ✓ | ✓ | ✓ | |

| BAdam | ✓ | ||||

| Flash attention | ✓ | ✓ | ✓ | ✓ | ✓ |

| S2 attention | ✓ | ||||

| Unsloth | ✓ | ✓ | |||

| DeepSpeed | ✓ | ✓ | ✓ | ✓ | ✓ |

| SFT | ✓ | ✓ | ✓ | ✓ | ✓ |

| RLHF | ✓ | ✓ | |||

| DPO | ✓ | ✓ | |||

| KTO | ✓ | ||||

| ORPO | ✓ |

Table: Comparison of features in LlamaFactory with popular frameworks of fine-tuning LLMs

Note: While most frameworks are built on PyTorch and have similar hardware requirements, LLaMA Factory differentiates itself through its ease of use, wide feature support, and strong community. It stands out with extensive support for multiple fine-tuning techniques, including LoRA, QLoRA, DoRA, PiSSA, and more, providing users with flexibility to optimize models based on their specific needs.

Fine-Tuning Techniques Supported

| Freeze-tuning | GaLore | LoRA | DoRA | LoRA+ | PiSSA | |

| Mixed precision | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Checkpointing | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Flash attention | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| S2 attention | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Quantization | ✗ | ✗ | ✓ | ✓ | ✓ | ✓ |

| Unsloth | ✗ | ✗ | ✓ | ✓ | ✓ | ✓ |

Table 2: Compatibility between the fine-tuning techniques featured in Llama Factory.

Quick Overview of Techniques

- Freeze-tuning: involves freezing a majority of parameters while fine-tuning the remaining parameters in a small subset of decoder layers.

- Gradient low-rank projection: projects gradients into a lower-dimensional space, facilitating full-parameter learning in a memory-efficient manner.

- Low-rank adaptation freezes all pre-trained weights and introduces a pair of trainable low-rank matrices to the designated layer.

- QLoRA: LoRA combines with quantization to reduce memory usage.

- DoRA (Weight-Decomposed Low-Rank Adaptation): breaks down pre-trained weights into magnitude and direction components and updates directional components for enhanced performance.

- LoRA+: is proposed to overcome the sub-optimality of LoRA.

- PiSSA (Principal Singular Values and Singular Vectors Adaptation) initializes adapters with the principal components of the pre-trained weights for faster convergence.

Quick Start to LLaMA-Factory

1. Installing Dependencies

Workspace and environment can be set up easily by cloning LLaMA Factory repository.

git clone --depth 1 https://github.com/hiyouga/LLaMA-Factory.git cd LLaMA-Factory conda create --name llama-factory python=3.11 conda activate llama-factory pip install -e ".[torch,liger-kernel,metrics]" pip install deepspeed==0.14.4

2. Preparing Dataset

LLaMA Factory supports multiple data formats for various training methods (e.g., SFT, DPO, RLHF...) as well as sample datasets to help us visualize the structure of a dataset. All data is stored in the /data directory. Users can prepare a customized dataset and update its information in the dataset_info.json file which is also located in the /data directory.

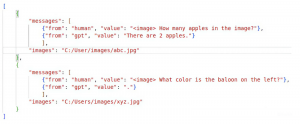

For example, I have a dataset where the image paths, prompt information, and model responses are stored in the file C:/User/annotations.json, and the dataset is structured as follows:

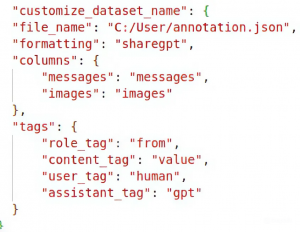

At this point, you can add the following information to the dataset_info.json file:

3. Finetuning

3. Finetuning

You can choose to fine-tune via LLaMA Factory's WebUI by running the following command in the terminal:

cd LLaMA-Factory GRADIO_SHARE=1 llamafactory-cli webui

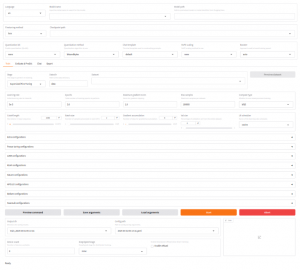

The web interface will appear as follows:

You can adjust the required training configurations, specify the path to the output directory, and click 'Start' to begin the training process.

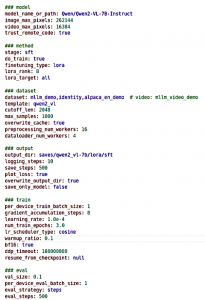

Additionally, you can fine-tune via the command line by preparing a config.yaml file that includes the required training configurations. You can find examples of different training configurations in the /examples directory.

Then, run the following command to start the training process:

llamafactory-cli train training_config.yaml

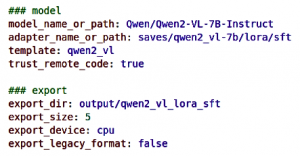

4. Merge LoRA

In the case of LoRA training, the adapter weights need to be merged with the original model to obtain the fine-tuned model. This process can be executed via the WebUI by selectingclicking on the 'Export' tab. For the command line, you also need to prepare a configuration file in YAML format, as shown in the following example:

Then run the following command to merge:

llamafactory-cli export merge_config.yaml

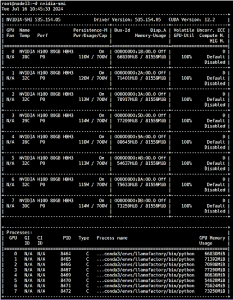

5. Resource monitoring

After setting up the environment successfully, you can check the running process using the 'nvidia-smi' command. Below is an example of training a LLM model using LlamaFactory on H100 node

Conclusion

LLaMA Factory is a powerful and user-friendly framework designed to lower the barrier to entry for individuals interested in customizing large language models. It offers a comprehensive suite of state-of-the-art fine-tuning techniques, intuitive UI controls, and compatibility with popular deployment tools while eliminating the need for coding. Whether you're an ML novice or just want a faster way to experiment with LLMs, LLaMA Factory is definitely worth checking out.

Learn more about FPT AI Factory's services HERE.

For more information and consultancy about FPT AI Factory, please contact:

- Hotline: 1900 638 399

- Email: support@fptcloud.com

- Support: m.me/fptsmartcloud