Use Cases for Training Large Language Models (LLMs) with Slurm on Metal Cloud

Use Cases for Training Large Language Models (LLMs) with Slurm on Metal Cloud

Table of Contents

I. Introduction

Large Language Models (LLMs) are pushing the boundaries of artificial intelligence, enabling human-like text generation and the understanding of complex concepts. However, training these powerful models requires immense computational resources. This document explores the realm of distributed training, empowering you to leverage multiple GPUs efficiently to train LLMs using Slurm on Metal Cloud.

1. Purpose

This document presents a proof of concept (PoC) for developing and training Large Language Models (LLMs) utilizing open-source tools. The setup is designed to easily adapt to various frameworks that support distributed training and aims to streamline debugging process.

2. Context: Why Training LLMs Requires a Multi-Node (Cluster) Setup?

Large Language Models (LLMs) have significantly advanced artificial intelligence, particularly in the field of natural language processing. Recent models such as GPT-2, GPT-3, and LLaMA2 can understand and generate human-like text with impressive accuracy.

Training LLMs is a highly resource-intensive task that requires substantial hardware resources. Distributed training on GPU clusters, such as NVIDIA H100, has become essential for accelerating the training process and efficiently handling large datasets.

Althought training LLMs on a single node is technically feasible, several limitations make this approach impractical:

- Extended Training Time: Training on a single node significantly increases the duration of each training cycle, making it inefficient for large-scale models.

- Hardware Limitations: Single-node systems often lack the memory and processing power necessary to handle extremely large models. For instance, models exceeding 70 billion parameters or datasets with over 37,000 samples may exceed the available GPU memory and storage capacity of a single machine.

- Scalability Issues: As model size and dataset complexity increase, single-node training struggles to efficiently utilize resources, leading to bottlenecks and suboptimal performance.

These challenges are effectively addressed by utilizing a multi-node (cluster) training setup, which distributes computational workloads across multiple GPUs and accelerates training while ensuring scalability. This approach enables:

- Parallel Processing: Distributing model training across multiple nodes reduces processing time and optimizes resource utilization.

- Handling Large Models & Datasets: Multi-node setups can accommodate LLMs with billions of parameters by splitting the workload across multiple GPUs and nodes.

- Improved Fault Tolerance & Flexibility: Cluster computing provides redundancy and enables better handling of system failures, ensuring training stability.

By leveraging a multi-node Slurm cluster, organizations and researchers can efficiently train LLMs while overcoming the constraints of single-node training.

3. SLURM - The Backbone of High-Performance Computing for AI

As AI projects continue to grow in complexity and scale, the demand for high-performance computing (HPC) environments is increasing rapidly. This expansion requires efficient resource management—a challenge that SLURM (Simple Linux Utility for Resource Management) is designed to address effectively.

SLURM acts as the central nervous system of an HPC environment by enabling AI engineers to maximize computing cluster performance and tackle the most demanding AI workloads. It ensures:

- Optimized Task Distribution: Workloads are efficiently allocated across computing nodes to maintain performance balance.

- Intelligent Resource Management: Critical resources such as CPU cores, memory, and specialized hardware like GPUs are dynamically assigned to maximize efficiency.

- Scalability & Adaptability: SLURM reallocates resources as needed, ensuring smooth scalability and efficient workload execution.

By leveraging SLURM, AI researchers and engineers can harness the full power of distributed computing, enabling faster and more efficient training of Large Language Models (LLMs) and other complex AI applications.

4. Why Deploy SLURM on Kubernetes?

SLURM (Simple Linux Utility for Resource Management) is a widely used job scheduler for High-Performance Computing (HPC), while Kubernetes (K8s) is the leading container orchestration platform for managing distributed workloads. Combining SLURM with Kubernetes offers several advantages:

- Enhanced Scalability & Dynamic Resource Allocation: Kubernetes enables auto-scaling of compute resources based on workload demand, dynamically provisioning or deallocating nodes as needed. Unlike traditional SLURM clusters, which are often static, running SLURM on K8s allows for on-demand scaling, optimizing resource utilization.

- Improved Containerized Workflows & Portability: AI/ML and HPC workloads increasingly rely on containerized environments (e.g., Docker, Singularity). Kubernetes provides native support for containers, making it easier to package and deploy SLURM workloads across multi-cloud and hybrid environments.

- Efficient Multi-Tenancy & Isolation: Kubernetes supports namespace-based isolation, enabling multiple teams to run SLURM jobs securely on shared infrastructure. Resource quotas and limits in K8s help ensure fair allocation of CPU, GPU, and memory among different workloads.

- Integration with Cloud-Native Ecosystem: Running SLURM on K8s allows integration with cloud-native tools like Prometheus (monitoring), Grafana (visualization), and Argo Workflows (pipeline automation). This enables a modern, observability-driven approach to HPC workload management.

- Cost Optimization for Cloud-Based HPC: Traditional SLURM clusters often require dedicated hardware, leading to underutilization when workloads are low. With Kubernetes, organizations can dynamically spin up and terminate cloud-based nodes, reducing unnecessary costs while ensuring peak performance during intensive computational workloads.

Deploying SLURM on Kubernetes combines the strengths of HPC job scheduling and cloud-native orchestration, providing scalability, flexibility, and cost efficiency. This approach is ideal for AI/ML training, large-scale simulations, and enterprise-level scientific computing.

II. Implementation

1. Specifications

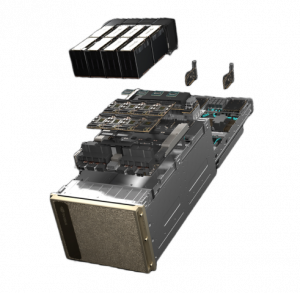

Each node in the setup is equipped with the following specifications:

- GPUs: 8 NVIDIA H100 GPUs, each with 80GB HBM3 memory and 700W power consumption.

- OS: Ubuntu 22.04 LTS

- Driver Version: 550.44.15+

- CUDA Version: 12.2.1+

- Docker Version: 26.1.2+

- NVIDIA Toolkit: 1.15.0-1+

- Built with Docker using NVIDIA toolkit

2. Steps for Training Machine Learning Models (MLMs)

a. Upload Data & Model to High Performance Storage (HPS)

Objective:

The first step is to prepare and upload training data and models to High Performance Storage (HPS), ensuring easy access from computing nodes.

Steps:

- Training Library Reference: For training the model, we utilize the LLaMA-Factory library, which is available at the following repository:

- GitHub Repository: LLaMA-Factory

Additional training guidelines and example configurations can be found in the official documentation:

- Examples & Tutorials: LLaMA-Factory Examples

These resources provide detailed instructions on configuring and fine-tuning LLMs, ensuring an efficient training process.

- Storing Model and Data in HPS for Training

The model and dataset are stored in the High-Performance Storage (HPS) under the following paths:

- Model Storage Path: /mnt/data-hps/models/

- Dataset Storage Path: /mnt/data-hps/data/

For this experiment, we use the Qwen/Qwen2.5-72B-Instruct model from Hugging Face (Qwen2.5-72B-Instruct). The model is stored in:

/mnt/data-hps/models/Qwen2.5-72B-Instruct/

Regarding the dataset, we use an SFT dataset named stem_sft, which is stored as:

/mnt/data-hps/data/stem_sft.json

- Registering the Dataset with LLama Factory

To train the model using LLama Factory, we must register this dataset by defining its metadata in the dataset_info.json file. The file should be structured as follows:

{

"stem_aug_sft_h_fm_botest": {

"file_name": "stem_aug_sft_h_fm_botest.json",

"formatting": "sharegpt",

"columns": {

"messages": "messages"

},

"tags": { "role_tag":

"role", "content_tag":

"content", "user_tag":

"user", "assistant_tag":

"assistant",

"system_tag": "system"

}

}

}

b. Set Up Slurm Cluster (Infrastructure & Configuration)

Objective:

Set up the Slurm cluster to manage resources and schedule tasks for model training.

Steps:

- Install and Configure Slurm Controller (slurmctld):

- Install Slurm Controller on a central server to manage compute nodes.

- Edit slurm.conf to define node resources (e.g., CPU, GPU) and configure job parameters.

- Set Up Slurm Daemon (slurmd) on Compute Nodes:

- Install and configure Slurm Daemon on each compute node.

- Ensure communication between compute nodes and Slurm Controller for job distribution.

c. Create Training Configuration & Training Script (LLaMA Factory)

Objective:

Define the training parameters and write the training script using frameworks like LLaMA Factory.

Steps:

- Create Training Configuration File:

- Define hyperparameters like learning rate, batch size, number of epochs, etc., in a configuration file (e.g., config.json or train_config.yaml).

- Write the Training Script:

- Develop the training script (train.py) using frameworks such as PyTorch or TensorFlow. The script will include model definition, loss functions, optimizers, and training logic.

- Integrate LLaMA Factory:

- Use LLaMA Factory to streamline model configuration and training, optimizing the process for LLMs.

d. Create Slurm Job File (Resource Allocation & Script)

Objective:

Prepare the Slurm job file to specify resource requirements and job configuration for training.

Steps:

- Create the Slurm Job Script:

- Write a Slurm job file (train_llm.slurm) to define resource requirements (e.g., CPU, GPU, memory) and specify the commands to run the training script.

- Example train_llm.slurm file:

#!/bin/bash  #SBATCH --job-name=train_llm  #SBATCH --nodes=1  #SBATCH --gres=gpu:1  #SBATCH --time=48:00:00  #SBATCH --mem=64GB     module load cuda/11.2  python train.py --config config.json

- Define Resource Requirements: Specify the necessary resources (e.g., GPU, CPU, RAM) based on the model's training demands.

e. Submit the Slurm Job (Run the Script to Start the Job)

Objective:

Submit the job to the Slurm job scheduler and begin the training process.

Steps:

- Submit the Job: Use the sbatch command to send the job to Slurm for execution:

sbatch train_llm.slurm

- Check Job Status: Use the squeue command to monitor the status of the job and confirm it’s running as expected.

f. Monitor Metrics (GPU Usage, Logs, etc.)

Objective:

Monitor the performance of the job during training, especially resource usage like GPU and logs.

Steps:

- Track GPU Usage: Use the nvidia-smi command to check GPU utilization:

nvidia-smi

Monitor Job Logs: View logs and metrics using:

- scontrol show job <job_id> for job details.

- tail -f slurm-<job_id>.out for real-time log monitoring.

j. Retrieve the Trained Model from the Output Path (HPS)

Objective:

After training is complete, retrieve the trained model from High Performance Storage (HPS).

Steps:

- Identify the Output Path: Check the Slurm job script or config file to locate the output path where the trained model is saved.

- Download the Trained Model: Use scp, rsync, or API to fetch the model from HPS:

scp user@server:/path/to/output_model/model_checkpoint.p th .&nbsp;

- Verify the Model: After downloading, verify the model’s integrity and performance.

3. Some Execution Results

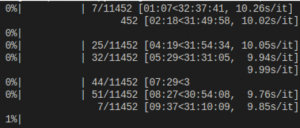

3.1 Pre-training stage

- Data size: 48.74 GB, context length: 4096, model size: 32B, epoch: 1

- 1 node:

- bs/d = 1: 31 days 7:59:33 ~ 31.3 days

- 32 nodes:

- bs/d = 1: 70h ~ 2.9 days

- bs/d = 4: 31h ~ 1.3 days

- bs/d = 8: OOM (Out of Memory)

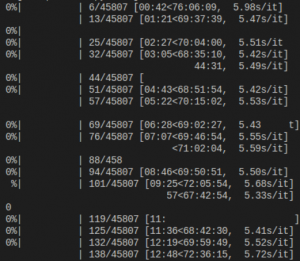

3.2 Post-training stage (SFT)

- Data size: 37.66 MB ~ 37,123 samples, context length: 2560, model size: 72B, epoch: 5

- 1 node:

- bs/d = 4: 5h22m

- 32 nodes:

- bs/d = 4: 22m

III. Conclusion

Training Large Language Models (LLMs) is a computationally demanding process that requires efficient resource management and scalable computing infrastructure. By leveraging Slurm on Metal Cloud, organizations and researchers can take full advantage of distributed training, enabling faster model convergence, optimized GPU utilization, and seamless workload orchestration.

Throughout this document, we have explored the key steps in training LLMs, from uploading models and datasets to the High-Performance Storage (HPS) to configuring Slurm clusters, submitting jobs, monitoring GPU usage, and retrieving the trained models. The integration of Kubernetes with Slurm further enhances scalability, flexibility, and cost efficiency, making it an ideal solution for handling large-scale AI workloads.

By implementing this approach, AI engineers can overcome the limitations of single-node training, efficiently manage multi-node clusters, and accelerate the development of state-of-the-art language models. As AI continues to evolve, leveraging Slurm on cloud-based HPC platforms will play a crucial role in advancing large-scale deep learning and natural language processing.

For more information and consultancy about FPT AI Factory, please contact:

- Hotline: 1900 638 399

- Email: support@fptcloud.com

- Support: m.me/fptsmartcloud