Blogs Tech

Categories

Blog chia sẻ kiến thức FPT Cloud

AI Factories Are Reshaping Data Infrastructure for an Intelligent Future

15:36 08/08/2025

From startups to global giants, the AI reasoning era is redefining how we build, think, and operate. Hyperscalers and innovation leaders are scaling AI factories globally, and soon every enterprise will depend on one to stay ahead.

The Rise of AI Factories: Manufacturing Intelligence for an AI-Native Era

The world is entering the age of AI Natives — a new generation of individuals, organizations, and economies born into environments where artificial intelligence is not just an enhancement, but a default operating layer.

Teenagers now grow up talking to AI assistants instead of typing search queries. Companies like Amazon rely on AI to manage logistics at machine speed. Tesla collects terabytes of real-world data every day to refine its autonomous driving models. Even governments are adopting AI copilots to streamline citizen services and policymaking.

In this new era, AI is embedded into products, into decisions, into every customer interaction. For AI-Native entities, intelligence must be continuous, generative, and scalable. To meet the demands of this fundamental shift, we need a new kind of infrastructure. This leads to the emergence of AI Factory - the next generation of data centers, designed not merely to store information but to produce intelligence at scale.

From Data Centers to Intelligence Manufacturing Hubs

Traditional data centers were built for general-purpose computing, capable of processing a wide range of workloads with relative flexibility. However, in today’s AI-driven economy, speed, scale, and specialization matter more than ever. Businesses and governments can no longer afford to wait months for fragmented AI initiatives to yield actionable insights. Instead, they require industrial-grade systems capable of managing the full AI lifecycle, from data ingestion to model training, fine-tuning, and high-volume inference in real-time.

AI Factories are purpose-built to meet this demand. They transform raw data into actionable intelligence with speed, continuity, and cost efficiency. Intelligence is no longer a byproduct. It is a product.

The key performance metric is AI token throughput, which measures how effectively an AI Factory produces reasoning and predictions to power decisions, enable automation, and unlock value.

AI Factories: Building the Backbone of the AI Economy

Around the world, governments and enterprises are accelerating efforts to build AI factories as strategic drivers of economic growth, innovation, and efficiency.

In Europe, the European High-Performance Computing Joint Undertaking has unveiled plans to develop seven AI factories in partnership with 17 EU member states, marking a significant step toward establishing AI infrastructure at scale.

This movement is part of a broader global wave, as countries and corporations invest heavily in AI factories to transform industries and power national competitiveness:

India: Yotta Data Services, in collaboration with NVIDIA, has introduced the Shakti Cloud

Platform—democratizing access to advanced GPU computing. By combining NVIDIA AI Enterprise software with open-source tools, Yotta offers a streamlined platform for AI development and deployment.

Japan: Top cloud providers such as GMO Internet, Highreso, KDDI, Rutilea, and SAKURA Internet are building NVIDIA-powered AI infrastructure to revolutionize sectors ranging from robotics and automotive to healthcare and telecommunications.

Norway: Telenor has launched an AI factory leveraging NVIDIA technologies to drive AI adoption across the Nordic region, with a strong emphasis on workforce upskilling and sustainable development.

Together, these initiatives highlight a pivotal shift: AI factories are no longer optional; they are emerging as foundational infrastructure for the digital economy, much like telecommunications and energy grids once were.

Inside an AI Factory: Where Intelligence Is Manufactured

At the core of every AI factory lies a set of vital ingredients: foundation models, trustworthy customer data, and a suite of powerful AI tools. These components come together in a purpose-built environment where models are fine-tuned, prototyped, and optimized for real-world deployment.

As these models enter production, they initiate a continuous learning cycle, drawing insights from new data, refining performance through feedback loops, and evolving with every iteration. This closed-loop system, often called a data flywheel, enables organizations to unlock ever-smarter AI, fueling enterprise growth through adaptability, precision, and scale.

Source: NVIDIA via blog.nvidia.com

FPT AI Factory: Vietnam’s Pioneer, Japan’s Trusted Partner

Amid this global shift, FPT AI Factory stands at the forefront of the region’s AI transformation. It is the first of its kind in Vietnam and a trusted infrastructure partner for enterprise customers in Japan. Developed in strategic collaboration with NVIDIA, FPT AI Factory is purpose-built to accelerate the AI-native evolution of businesses and governments alike.

FPT AI Factory provides an end-to-end infrastructure stack for the entire AI product lifecycle, integrating thousands of NVIDIA H100/H200 GPUs, the latest NVIDIA AI Enterprise software, and FPT’s AI ecosystem and deployment expertise. This combination empowers businesses to accelerate the development and deployment of advanced AI solutions, streamline resource and process management, and optimize total cost of ownership while ensuring speed, scalability, and sustainability.

FPT AI Factory enables enterprises to accelerate every stage of their AI journey through four integrated components:

FPT AI Infrastructure: Built on NVIDIA H100/H200 GPUs, this infrastructure supports compute-intensive AI workloads with high performance and energy efficiency — ideal for training LLMs, multimodal models, and more.

FPT AI Studio: A complete environment for experimentation, fine-tuning, and rapid prototyping that helps teams accelerate development and reduce costs.

FPT AI Inference: A scalable, cost-efficient serving platform optimized for low latency and high throughput, suited for production-grade applications with demanding SLAs.

FPT AI Agents: A GenAI-powered platform for creating intelligent, multilingual, multi-tasking AI agents that integrate seamlessly with enterprise workflows.

Source: FPT Smart Cloud

Additionally, FPT AI Factory is integrated with over 20 ready-to-use generative AI products, enabling rapid AI adoption and immediate impact across customer experience, operational excellence, workforce transformation, and cost optimization.

Powering the Intelligent Enterprise Future

AI is no longer just a tool for innovation; it’s becoming the foundation for enterprise transformation and national competitiveness. Around the world, AI Factories are emerging as strategic infrastructure that empowers organizations to develop, deploy, and scale intelligent systems at speed. More than technical assets, they are catalysts for the next wave of productivity and long-term economic resilience.

FPT AI Factory marks Vietnam’s entry into this global movement. Designed to support enterprises across on-premises, cloud, and hybrid environments, it offers a full-stack platform that simplifies the entire AI lifecycle. With strategic investments spanning Vietnam and Japan, FPT is helping shape a future where intelligence is not an add-on, but a core layer of every business, every industry, and every nation ready to lead in the AI era.

A Deep Dive into the Global Artificial Intelligence Trends, Challenges, and Future Prospects

16:37 31/07/2025

Artificial Intelligence (AI) is becoming a core engine for countries and businesses to accelerate transformation and leap ahead in the smart era. With long-term vision, governments around the world are actively advancing AI through strategic policies, infrastructure investments, and innovation ecosystems to accelerate both business growth and national competitiveness.

Across the global AI race, countries are leveraging distinct national strengths to shape their trajectories. The United States leads with a market-driven model, fueled by Big Tech and a robust culture of private-sector innovation. While China advances through a top-down national strategy, positioning AI as a core pillar of digital sovereignty and economic competitiveness. India is rapidly establishing itself as a digital powerhouse, leveraging a deep pool of tech talent and an increasingly dynamic innovation landscape. As a representative of Southeast Asia’s digital ascent, Indonesia demonstrates growing momentum in AI adoption and digital transformation. These four countries have been selected as representative markets to analyze AI trends from various perspectives.

I. The USA: Strategic Investment and AI Policy Leadership

1. Emerging Trends in the United States

Venture capital investment in AI surged to $55.6 billion in Q2/2025, marking the highest level in two years. This represents a 47% increase compared to the $37.8 billion raised in Q1, largely fueled by growing interest in AI startups, according to Reuters. While funding had previously declined from a peak of $97.5 billion in Q4/2021 to a low of $35.4 billion in Q2/2024 due to high interest rates, AI is now reversing that trend by becoming a top destination for new capital.

2. Government Initiatives and Policy Support

As one of the world’s leading technology hubs, the United States is aggressively driving AI development through a comprehensive national strategy—spanning infrastructure, policy, and talent. A key policy shift came under the Trump administration in early 2025, with the Executive Order titled “Removing Barriers to American Leadership in Artificial Intelligence,” focusing on reducing regulatory burdens and accelerating private-sector innovation to strengthen U.S. dominance in the AI race.

Altogether, the U.S. is building a robust AI ecosystem—backed by strong private capital, clear public policies, and effective public-private innovation models—laying a solid foundation to retain its leadership position in the AI era.

II. China: Centralised Planning and Technological Self-Reliance

1. Emerging Trends in China

China is investing heavily in domestic AI chips, supercomputers, big data platforms, and autonomous robotics to reduce reliance on Western technologies. Tech giants such as Baidu, Alibaba, Tencent, and Huawei are leading the charge, working alongside startups to commercialize AI across sectors like transportation, healthcare, finance, and defense.

Aligned with its strategic ambition to lead the global AI race, China is channeling close to $100 billion into AI development by 2025—over half of which is driven by state-led initiatives. This investment is anchored in the “Next Generation AI Development Plan,” reinforcing its long-term vision to position AI as a pillar of national competitiveness by 2030.

2. Government Initiatives and Policy Support

China is shaping a distinct AI development path through strong state coordination and institutional leadership. Recent national efforts focus on building a resilient AI supply chain, securing strategic technologies such as advanced chips, large language models, and sovereign data infrastructure.

In addition, this country also takes a pioneering role in global AI governance, having registered over 1,400 algorithms and introduced a suite of regulatory frameworks for generative AI, algorithmic accountability, and fair data usage.

Rather than following existing models, China is actively exporting its regulatory approach and technical standards — a signal of its ambition to influence not just the pace but also the rules of global AI development.

III. India's Dominance in the AI Landscape: A Global Powerhouse for Innovation and Startups

In a world shifting toward smarter systems and automated processes, India has become one of the most prominent players in the digital revolution that has a vast talent pool and a thriving technology sector. With over 600,000 AI professionals and 700 million internet users, India contributes 16% of the global AI talent pool, second only to the United States.

1. Emerging Trends in India

India is witnessing several significant trends in AI development, and the rise of AI-driven startups is particularly notable. According to Statista (2025), the current Indian AI market is valued between $7-$10 billion, and is projected to reach $31.94 billion by 2031, reflecting a CAGR of 26.37%. This rapid expansion highlights the market’s exceptional growth, as it is expected to more than quadruple in just six years. The growth is largely driven by India’s robust talent base and the rise of AI-driven startups that are shaping this dynamic ecosystem.

In recent years, nearly 3,000 AI startups have been launched contributing significantly to sectors like healthcare diagnostics, agricultural automation, fintech, and language processing. These startups are leveraging AI to create innovative solutions, such as personalized healthcare diagnostics and precision farming techniques. With a rapidly growing AI-driven startup ecosystem, India is on its way to becoming the third-largest startup ecosystem globally.

Furthermore, AI chatbots, virtual assistants, and automated customer service solutions are becoming a growing trend for businesses of all sizes, as they offer high efficiency while significantly reducing operational costs. This trend is expected to continue, as companies increasingly aim to enhance customer experiences and also optimize their operational processes.

2. Government Initiatives and Policy Support

With an ambitious plan to position India as a global AI leader in sectors such as healthcare, agriculture, and education, the government launched the IndiaAI initiative in 2024. This program aims to build a robust AI ecosystem by enhancing critical infrastructure, such as high-performance computing resources, to support advanced AI research and development. It also focuses on fostering innovation through funding for AI startups and establishing AI labs that serve as innovation hubs. Moreover, the initiative seeks to equip the future workforce with the necessary AI skills by integrating specialized training programs and educational resources, ensuring that India develops a highly skilled pool of AI professionals.

Additionally, the Indian government is fostering collaborations with global technology companies such as Google, Microsoft, and IBM. These collaborations help India access the latest AI technologies and implement them in local contexts, such as in agriculture, urban planning, and disaster management.

IV. The Rise of AI in Indonesia

1. Emerging Trends in Indonesia

Indonesia's AI ecosystem has gained substantial traction in recent years. The growing trend of investing in AI to transform a country like Indonesia, which currently lacks a comprehensive regulatory framework and clear guidelines for the use and mandatory training of AI across sectors, is now more crucial than ever in shaping its future technological landscape. In 2024, the country witnessed significant foreign investment in its AI sector, with two major partnerships highlighting Indonesia’s rising role in global technological innovation. Nvidia partnered with PT Indosat to invest $200 million in an AI factory and skills development program in Surakarta, aiming to build local expertise in AI technologies and provide the infrastructure needed to foster future innovations.

Following this, Microsoft made a landmark commitment to invest $1.7 billion in building cloud and AI infrastructure across Indonesia. As part of this investment, Microsoft plans to train 840,000 professionals, enhancing the country’s AI talent pool and empowering the workforce with crucial skills. These investments not only play a pivotal role in boosting Indonesia’s technological growth and enhancing the digital infrastructure, but also demonstrate a growing international confidence in Indonesia’s AI potential.

2. Government Initiatives and Policy Support

In August 2020, the government launched the Indonesia's Golden 2045 Vision, a pivotal initiative aimed at transforming Indonesia from a resource-based economy to an innovation-driven one. This ambitious strategy outlines a comprehensive roadmap for AI development across various sectors, focusing on five key policy pillars: Ethics & Policy, Infrastructure & Data, Talent Development, R&D & Industrial Innovation, and Sectoral Implementation.

Furthermore, the Indonesian government is actively driving the advancement of AI through a multitude of projects, partnering with huge businesses to implement cutting-edge solutions aimed at improving AI capabilities. Several government-led initiatives are being rolled out across various sectors, with the goal of enhancing AI technology and infrastructure. To support this vision, the government is engaging local enterprises to execute and deploy these AI projects, including those focused on research and development to enhance large language models (LLMs). As part of this strategic push, the government is also promoting the development of Interactive Generative AI, which presents ample opportunities for businesses to lead the way in driving innovation and growth in the AI sector.

V. Trends and Government Policy Support in other countries

Across the globe, nations are increasingly focused on advancing AI technology, each taking unique approaches depending on their specific needs and goals. For example, Japan’s AI Strategy is centered on integrating AI into society to address its aging population and related demographic issues, while Canada is dedicated to cultivating AI research excellence, particularly through initiatives like the Pan-Canadian Artificial Intelligence Strategy.

On the other hand, countries such as Russia and South Korea view AI as a critical tool for enhancing national security and boosting economic power, channeling investments into defense technologies, robotics, and autonomous systems. Meanwhile, in the Middle East, countries like the United Arab Emirates and Saudi Arabia are leveraging AI to accelerate economic diversification, foster innovation, and implement smart city solutions as part of their broader modernization efforts.

VI. Navigating the Risks of AI

While Artificial Intelligence offers immense potential for economic advancement and societal benefit, it also introduces a series of complex challenges and risks. Chief among these are concerns related to data privacy, algorithmic bias, and the potential for mass surveillance. As AI continues to automate various tasks, there is a growing concern regarding the displacement of workers, especially in sectors reliant on manual and repetitive tasks. Furthermore, the widespread use of AI in data processing raises critical concerns about privacy and security, as personal information is vulnerable to potential breaches, misuse, or unauthorized surveillance.

In addition, the advancement of deepfake technology presents significant risks to the credibility of information, as AI-generated content can be utilized to create misleading or entirely fabricated media.

VII. The Promising Horizons of AI in the Future

Artificial Intelligence is fast evolving from a disruptive tool into a foundational driver of global progress. In the coming decade, AI is poised to revolutionize the very foundations of how businesses operate, unleashing a wave of innovation, agility, and intelligence. According to a 2024 report from PwC, AI could contribute up to $15.7 trillion to the global economy by 2030, primarily through increased productivity, automation of routine tasks, and the creation of new markets and services.

Moreover, far from being a job destroyer, AI is also proving to be a job enhancer. The World Economic Forum's “Future of Jobs” report (2025) predicts that while AI will automate certain tasks, it will also generate 78 million net new roles globally, especially in data science, AI governance, creative sectors, and digital infrastructure. Notably, industries with high AI exposure have reported faster wage growth and higher demand for skilled professionals.

The AI Native Revolution: A New Business Paradigm

14:04 28/07/2025

2025 witnesses a strategic transition, where AI is no longer just a tool but an integral part of our daily life. Welcome to the age of AI Natives, a generation redefining how we learn, work, and make decisions.

AI Native: A Strategic Shift for Businesses

What is AI Native? If “Digital Native” refers to individuals born into the digital era, “AI Native” describes those who grow up and thrive in a world where AI is not a futuristic concept but a fundamental part of everyday life. For AI Natives, AI is woven into the way they learn, create, socialise, work, and even understand themselves.

AI Native is becoming a strategic keyword in every discussion about the future of business operations. Unlike Digital Natives, who adapted to digital transformation, AI Natives—regardless of age—work, create, and make decisions in environments where AI is seamlessly integrated. They don’t just use AI as a tool; they collaborate with AI as if it were a true colleague. This is the workforce and even the customer from the future, where behaviors, habits, and expectations are shaped by smart, personalised, and consistent AI experiences.

Therefore, this shift isn’t just about demographics—it’s a strategic move for a business’s operational process. Industries such as finance, banking, and insurance, where big data and processing rate are critical, can no longer rely on fragmented, manual processes. AI Native employees now expect agility while AI Native customers demand instant and hyper-personalised experiences. To keep up with the pace, businesses must go beyond implementing AI in isolated applications. They need a holistic AI strategy that transforms infrastructure, human capabilities, and operational models.

[caption id="" align="aligncenter" width="800"] FPT AI Agents marks the new “digital employee” era[/caption]

FPT AI Factory Powering the AI Native Future

With a future-driven vision, FPT has laid the strategic foundation for advancing core technologies in Artificial Intelligence, Big Data, and Automation through FPT AI Factory – Vietnam’s leading center for AI training and operations.

FPT AI Factory offers a comprehensive stack for end-to-end AI development – including high-performance computing infrastructure, robust tools for model training and fine-tuning, a flexible deployment platform, and a suite of pre-integrated AI services – enabling enterprises to optimize the entire AI development and operations pipeline in terms of speed, reliability, and cost-efficiency.

Its multi-layered AI ecosystem consists of four key pillars:

FPT AI Infrastructure: A high-performance infrastructure powered by NVIDIA H100/H200 GPUs, built to fulfill any large-scale AI workloads.

FPT AI Studio: A platform of necessary tools for building next-generation AI models – from LLMs to Multimodal AI – enabling fast training, fine-tuning, and prototyping to accelerate development cycles and reduce costs.

FPT AI Inference: A scalable, cost-optimized inference platform designed to balance throughput, stability, and latency – suitable for a wide range of production-grade AI applications, including time-sensitive and high-volume workloads.

FPT AI Agents: A GenAI-powered platform for building AI Agents capable of multi-language, multi-tasking, and human-like interaction. It enables businesses to create adaptive, easily integrated AI communication interfaces.

Additionally, FPT AI Factory provides access to over 20 ready-to-deploy generative AI solutions tailored for practical business uses.

With this comprehensive AI ecosystem and long-term vision, FPT empowers businesses to evolve – from digital transformation to intelligent operations, from manual processes to collaborative AI – toward a truly AI-native future.

What Are AI Agents? Examples, How they work, How to use them.

14:07 22/07/2025

AI Agents are artificial intelligence systems that can interact with the environment and make decisions to achieve goals in the real world without any human guidance or intervention. This technology are shaping technology trends, with notable milestones such as the Google I/O 2023 event launching Astra or the emergence of GPT-4o.

Large corporations are pouring billions of dollars into AI Agents to take the lead in AI Era. In this article, FPT.Cloud will clarify how AI Agents are helping businesses improve processes, enhance customer experience and optimize operations.

1. What are AI Agents (Intelligent Agents)?

AI Agents are artificial intelligence systems that can interact with the environment and make decisions in the real world without any human guidance or intervention.

AI Agents can gather information from their surroundings, design their own workflows, use available tools, coordinate between different systems, and even work with other Agents to achieve goals without requiring user supervision or continuous new instructions.

With the development of Generative AI, Natural language processing, Foundation Models, and Large Language Models (LLMs), AI Agents can now simultaneously process multiple types of multimodal information such as text, voice, video, audio, and code. Advanced agent AI can learn and update their behavior over time, continuously experimenting with new solutions to problems until achieving optimal results. Notably, they can detect their own errors and find ways to correct them as they progress.

AI Agents can exist in the physical world (robots, autonomous drones, or self-driving cars) or operate within computers and software to complete digital tasks. The aspects, components, and interfaces of each agent AI can vary depending on its specific purpose. Encouragingly, even people without deep technical backgrounds can now build and use AI Agents through user-friendly platforms.

2. What are the key features of an AI Agent platform?

Key features of an AI Agent platform include:

Autonomy: AI Agents can operate independently, make decisions, and take actions without continuous human supervision. For example, self-driving cars can adjust speed, change lanes, stop, or adjust routes based on real-time sensor data about road conditions and obstacles, without driver intervention.

Reasoning Ability: AI agents use logic and analyze available information to draw conclusions and solve problems. They can identify patterns in data, evaluate evidence, and make decisions based on the current context, similar to human thinking processes.

Continuous Learning: AI Agents continuously improve their performance over time by learning from data and adapting to changes in the environment. For instance, customer support chatbots can analyze millions of conversations to gain deeper understanding of common issues and improve the quality of proposed solutions.

Environmental Observation: AI agents continuously collect and process information from their surroundings through techniques like computer vision, natural language processing, and sensor data analysis. This ability helps them understand the current context and make appropriate decisions.

Action Capability: AI agents can perform specific actions to achieve goals. These actions can be physical (like a robot moving objects) or digital (like sending emails, updating data, or triggering automated processes).

Strategic Planning: AI agents can develop detailed plans to achieve goals, including identifying necessary steps, evaluating alternatives, and selecting optimal solutions. This ability requires predicting future outcomes and considering potential obstacles.

Proactivity and Reactivity: AI agents proactively anticipate and prepare for future changes. For example, Nest Thermostat learns the homeowner’s heating habits and proactively adjusts temperature before the user returns home, while quickly responding to unusual temperature fluctuations.

Collaboration Ability: AI agents can work effectively with humans and other agents to achieve common goals. This collaboration requires clear communication, coordinated actions, and understanding the roles and objectives of other participants in the system.

Self-Improvement: Advanced AI agents can self-evaluate and improve their operational performance. They analyze the results of previous actions, adjust strategies based on feedback, and continuously enhance their capabilities through machine learning techniques and optimization.

Key Features of AI Agents

3. Differences between Agentic AI Chatbots and AI Chatbots

Below is a comparison table highlighting the distinctions between Agentic AI chatbots and AI Chatbots:

Criteria

Agentic AI Chatbots

Traditional AI Chatbots

Autonomy

Operate independently, perform complex tasks without continuous intervention

Require continuous guidance from users, only respond when prompted

Memory

Maintain long-term memory between sessions, remember user interactions and preferences

Limited or no memory storage capability, each session typically starts from scratch

Tool Integration

Use function calls to connect with APIs, databases, and external applications

Operate in closed environments with no ability to access external tools or data sources

Task Processing

Break down complex tasks into subtasks, execute them sequentially to achieve goals

Only process simple, individual requests without ability to decompose complex problems

Knowledge Sources

Combine existing knowledge with new information from external sources (RAG)

Rely solely on pre-trained data, unable to update with new information

Learning Capability

Continuously learn from interactions, improving accuracy and relevance over time

Do not learn or improve from user interactions, responses always follow fixed patterns

Operation Mode

Can perform multiple processing rounds for a single request, creating multi-step workflows

Operate on a single-turn basis (receive-process-respond), without multi-step capabilities

Planning Ability

Strategically plan and self-adjust when encountering new information or obstacles

No long-term planning capability or strategy adjustment

Personalization

Provide personalized experiences based on user history, preferences, and context

Deliver generalized responses, identical for all users

Response Process

Analyze intent, access relevant information, create plan, execute actions, and evaluate results

Recognize patterns, search for appropriate responses in existing database, reply

Error Handling

Recognize errors, self-correct, and find alternative solutions when problems arise

Often fail to recognize errors or lack ability to recover when encountering off-script situations

User Interaction

Proactively ask clarifying questions, suggest options, and track progress

Passive, only directly respond to what users explicitly ask

Workflow

Use threads to store all information, connect with tools, execute function calls when needed

Simple processing according to predefined scripts, no workflow extension capability

Practical Applications

Complex customer support, data analysis, process automation, personal assistance

Primarily for FAQs, basic customer support, simple conversations

Intent Detection

Accurately identify users’ underlying intents, even when not explicitly stated

Only react to specific keywords or patterns, often missing true intentions

System Integration

Easily integrate with multiple systems and applications through APIs

Limited integration capabilities, often requiring custom solutions

Development Requirements

Can be developed on no-code platforms, without requiring in-depth programming knowledge

Typically require programming knowledge to build and maintain

Agentic AI chatbots mark a significant evolution in conversational AI, powered by LLMs but extending well beyond them. Operating on thread-based architecture, they store complete conversation histories, files, and function call results. These advanced chatbots activate via various triggers (scheduled events, database changes, or manual inputs) to analyze requests, interpret intentions, and execute actions autonomously.

Five key innovations drive this technology:

RAG integration for context-aware responses with higher accuracy

Function calling to interact with external systems

Advanced memory systems for continuous learning and adaptation

Tool evaluation to assess resources and fill information gaps

Subtask generation to break down complex goals independently

Unlike traditional chatbots’ single-turn model (receive-process-respond), agentic chatbots process multiple turns per prompt, queue actions strategically, and dynamically select appropriate tools based on user intent. They can search connected knowledge bases, call external APIs, or generate responses from core training when external tools aren’t needed. Critically, no-code platforms have democratized their development, accelerating adoption across industries by enabling businesses of all sizes to implement sophisticated AI without significant technical investment.

Differences between Agentic AI chatbots and AI Chatbots

4. Key Components of AI Agents

AI Agents are composed of multiple components working together as a unified system, similar to how the human body functions with senses, muscles, and brain. Each component in AI Agent Architecture plays a specific role in helping the agent sense, think, and interact with the surrounding world.

Key components of AI Agents

4.1. Sensors

Sensors help AI Agents collect information (percepts) from the surrounding environment to understand the context and current situation. In physical robots, sensors might be cameras for “seeing,” microphones for “hearing,” or thermal sensors for “feeling” temperature. For software agents running on computers, sensors might be web search functions to gather online information, or file reading tools to process data from PDF documents, CSV files, or other formats.

Sensors help AI Agents collect information (percepts) from the surrounding environment

4.2. Actuators

If sensors are how agents receive information, actuators are how they affect the world. Actuators are components that allow agents to perform specific actions after making decisions. In physical robots, actuators might be wheels for movement, mechanical arms for lifting objects, or speakers for producing sound. For software agents, actuators might be the ability to create new files, send emails, control other applications, or modify data in systems.

Actuators are components that allow agents to perform specific actions after making decisions

4.3. Brain

Processors, Control Systems, and Decision-Making Mechanisms form the “brain” of the AI Agents, where information is processed and decisions are made. Processors analyze raw data from sensors and convert it into meaningful information. Control systems coordinate the agent’s activities, ensuring all parts work harmoniously. Decision-making mechanisms are the most important part, where the agent “thinks” about processed information, evaluates different action options, and selects the most optimal action based on goals and existing knowledge.

Processors, Control Systems, and Decision-Making Mechanisms form the “brain” of the AI Agent

4.4. Learning and Knowledge Base Systems

These are the memory and learning capabilities of AI Agents, allowing them to improve performance over time. Knowledge base systems store information the agent already knows: data about the world, rules of action, and experiences from previous interactions. This might be a database of locations, events, or problems the agent has encountered along with corresponding solutions.

Learning systems allow the agent to learn from experience, recognize patterns, and improve decision-making abilities. An agent with learning capabilities will continuously update its knowledge base, helping it better cope with new situations or changes in the environment.

The complexity level of these components depends on the tasks the AI Agent performs. A smart thermostat might only need simple temperature sensors, a basic control system, and actuators to turn heating systems on/off. In contrast, a self-driving car needs to be equipped with all components at high complexity levels: diverse sensors to observe roads and other vehicles, powerful processors to handle large amounts of real-time data, sophisticated decision-making systems for safe navigation, precise actuators to control the vehicle, and continuous learning systems to improve driving capabilities through each experience.

AI Knowledge Management Agents

5. How do AI Agents Work?

When receiving a command (goal) from a user (Prompt), AI Agents immediately initiate the goal analysis process, transferring the prompt to the core AI model (typically a Large Language Model) and beginning to plan actions. The Agent will break down complex goals into specific tasks and subtasks, with clear priorities and dependencies. For simple tasks, the Agent may skip the planning stage and directly improve responses through an iterative process.

During implementation, thanks to Sensors, AI agents collect information (transaction data, customer interaction history) from various sources (including external datasets, web searches, APIs, and even other agents). During this collection process, the AI Agent continuously updates its knowledge base, self-adjusts, and corrects errors if necessary.

The Processors of AI Agents use algorithms, Deep Neural Networks, machine learning models, and artificial intelligence to analyze information and calculate necessary actions.

Throughout this process, the agent’s Memory continuously stores information (such as history of decisions made or rules learned). Additionally, AI Agents also use feedback from users, feedback from other Agents, and Human-in-the-loop (HITL) to self-compare, adjust, and improve performance over time, avoiding repetition of the same errors.

Finally, through Actuators, AI Agents perform actions based on their decisions. For robots, actuators might be parts that help them move or manipulate objects. For software agents, this might be sending information or executing commands on systems.

Technically, an AI agent system consists of four main components, simulating the way humans operate

To illustrate this process, imagine a user planning their vacation. They ask an AI Agent to predict which week of the coming year will have the best weather for surfing in Greece. Since the large language model that underpins the agent is not specialized in weather forecasting, the agent must access an external database that contains daily weather reports in Greece over the past several years.

Even with historical data, the agent cannot yet determine the optimal weather conditions for surfing. Therefore, it must communicate with a surf agent to learn that ideal surfing conditions include high tides, sunny weather, and low or no rainfall.

With the newly gathered information, the agent combines and analyzes the data to identify relevant weather patterns. Based on this, it predicts which week of the coming year in Greece is most likely to have high tides, sunny weather, and low rainfall. The final result is then presented to the user.

According to BCG analysis, AI agents are strongly penetrating many business processes, with a compound annual growth rate of up to 45% over the next 5 years

6. Common Types of AI Agents

There are 5 primary types of AI Agents: Simple Reflex Agents, Goal-Based AI Agents, Model-Based Reflex Agents, Utility-Based Agents, Learning Agents. Each suited to specific tasks and applications:

Simple Reflex Agents: Simple Reflex Agents operate on the “condition-action” principle and respond to their environment based on simple pre-programmed rules, such as a thermostat that turns on the heating system at exactly 8pm every night. The agent does not retain any memory, does not interact with other agents without information, and cannot react appropriately if faced with unexpected situations.

Model-Based Reflex Agents: Model-Based Reflex Agents use their cognitive abilities and memory to create an internal model of the world around them. By storing information in memory, these agents can operate effectively in changing environments but are still constrained by pre-programmed rules. For example, a robot vacuum cleaner can sense obstacles when cleaning a room and adjust its path to avoid collisions. It also remembers areas it has cleaned to avoid unnecessary repetition.

Goal-Based AI Agents: Goal-Based Agents are driven by one or more specific goals. They look for appropriate courses of action to achieve the goal and plan ahead before executing them. For example, when a navigation system suggests the fastest route to your destination, it analyzes different paths to find the most optimal one. If the system detects a faster route, it updates and suggests an alternative route.

Utility-Based Agents: Utility-Based Agents evaluate the outcomes of decisions in situations with multiple viable paths. They employ utility functions to measure the usefulness that each action might bring. Evaluation criteria typically include progress toward goals, time requirements, or implementation complexity. This evaluation system helps identify the ideal choice: Is the best option the cheapest? The fastest? The most efficient? For example, a navigation system considers factors such as fuel economy, reduced travel time, and toll costs to select and recommend the most favorable route for the user.

Learning Agents: Learning Agents learn through concepts and sensors, while utilizing feedback from the environment or users to improve performance over time. New experiences are automatically added to the Learning Agent’s initial knowledge base, helping the agent operate effectively in unfamiliar environments. For example, e-commerce websites use Learning Agents to track user activity and preferences, then recommend suitable products and services. The learning cycle repeats each time new recommendations are made, and user activities are continuously stored for learning purposes, helping Agents improve the accuracy of their suggestions over time.

Popular Types of AI Agents

7. What are the outstanding benefits of using AI Agents?

AI Agents for businesses deliver a consistent experience to customers across multiple channels, with the following 4 outstanding benefits:

Improve productivity: AI Agents help automate repetitive and time-intensive tasks, freeing up human resources from manual work so that businesses can focus on more strategic, creative and high-value initiatives, fostering innovation. For more complex issues, AI Agents can intelligently escalate cases to human agents. This seamless collaboration ensures smooth operations, even during periods of high demand.

Reduce costs: By optimizing processes and minimizing human errors, AI personnel help businesses cut operating costs. Complex tasks are handled efficiently by AI Agents without the need for constant human intervention.

Make informed decisions: AI Agents use machine learning (ML) technologies to help managers collect and analyze data (product demand or market trends) in real time, making faster and more accurate decisions.

Improve customer experience: AI agents significantly enhance customer satisfaction and loyalty by offering round-the-clock support and personalized interactions. Their prompt and precise responses effectively address customer needs, ensuring a smooth and engaging service experience. Lenovo leveraged AI agents to streamline product configuration and customer service, integrating them into key systems like inventory tracking. By building a knowledge database from purchase data, product details, and customer profiles, AI agents help Lenovo cut setup time from 12 minutes to 2 minutes, boosting sales productivity and customer experience. This led to a 12% improvement in order delivery KPIs (within 17 days) and generated $5.88 million in one year, according to Gartner.

Benefits of implementing AI Agents in Business

8. Is ChatGPT an AI Agent?

ChatGPT is not an AI Agent. It is a large language model (LLM) designed to generate human-like responses based on received input, with some components similar to AI Agents:

Simple sensors that receive text input

Actuators that generate text, images, or audio

Control system based on transformer architecture

Knowledge base system from pre-training data and fine-tuning.

However, these elements are not sufficient to make ChatGPT a genuine Agent. The most important difference between AI Agents and ChatGPT is autonomy. ChatGPT cannot set its own goals, make plans, or take independent actions. When you ask ChatGPT to write an email, it can create content but cannot send the email itself or evaluate whether sending an email is the best action in a specific situation.

Additionally, ChatGPT cannot directly interact with external systems or adjust its behavior based on real-time feedback. Updates like plugins, extended frameworks, APIs, and prompt engineering can improve ChatGPT’s functionality, but still don’t create a complete Agent. ChatGPT also lacks the ability to maintain long-term memory between sessions. It doesn’t “remember” you or previous conversations unless specifically programmed to do so in certain applications.

ChatGPT lacks core features to be considered an AI Agent

9. Practical Applications of AI Agents

Imagine a future workplace where every employee, manager, and leader not only works together, but is also equipped with a team of AI teammates to support them in every task and at every moment of the workday. With these AI teammates, we will become 10x more productive, achieve better results, create higher quality products, and of course, become 10x more creative.

You may be wondering, “When will this future come?” The answer from FPT is: The future is now. Here are four stories that demonstrate how AI is already impacting businesses.

9.1. Revolutionizing Insurance Claims Processing

Imagine you go to the hospital for a health check-up, buy medicine, and file an insurance claim. Typically, the insurance company’s document processing will take at least 20 minutes. With integrated AI Agents, insurers can process all documents through rapid assessment tools, risk assessment tools, and fraud detection tools, returning results in just 2 minutes.

This represents an incredible leap in productivity, improving the customer experience and creating new competitive value for the business.

AI Agents in Finance – Accounting

9.2. Transforming the Customer Contact Center

The second story focuses on customer service. Several FPT.AI customers have deployed AI systems for inbound and outbound communications. These systems provide human-like customer support, handling requests, resolving issues, and providing excellent service.

For some customers, AI Agents are now handling 70% of customer requests, completing 95% of received tasks, and achieving a customer satisfaction rating of 4.5/5. Currently, FPT’s customer service AI Agents manage 200 million user interactions per month.

How AI Agents Improve Customer Service

Advantages of applying AI Agents in customer service

9.3. Empowering pharmacists with AI Mentor

At Long Chau, the largest pharmacy chain in Vietnam, more than 14,000 pharmacists work every day to advise customers. To ensure they stay updated with knowledge and work effectively, FPT.AI has developed an AI Mentor that interacts with more than 16,000 pharmacists across 2,000 pharmacies every day.

This AI Mentor identifies strengths and weaknesses, provides insights, and personalizes conversations to help them improve. The results are:

Pharmacists’ competencies improved by 15%.

Productivity increased by 30%.

Within the first nine months of the year, the pharmacy chain recorded a revenue growth of 62%, reaching VND 18.006 trillion, accounting for 62% of FRT’s total revenue and completing 85% of its 2024 plan. More importantly, we pride ourselves on helping pharmacists become the best versions of themselves while continuously improving.

FPT AI Mentor won the “Outstanding Artificial Intelligence Solution” award at AI Awards 2024

9.4. From a cost center to a profit center

FPT.AI’s AI Innovation Lab works with customers to identify opportunities, deploy pilots, and scale solutions. For example, one of our clients transformed their customer service center from a cost center to a profit center.

Using AI, they detected when customers were happy and immediately suggested appropriate products or services to upsell credit cards, cross-sell overdrafts, activate new customers to sign up, and reactivate existing customers. This approach helped the customer service center contribute about 6% of total revenue.

The four stories above are just a small part of the countless ways AI can transform businesses. AI, as a new competitive factor, is opening up a blue ocean of innovation. Every company and organization will need to reinvent their operations and build a strong foundation to compete in the future, leveraging the advances of AI.

Applications of AI Agents in practice

10. Challenges in Deploying AI Agents

AI Agents are still in their early stages of development and face many major challenges. According to Kanjun Qiu, CEO and founder of AI research startup Imbue, the development of AI Agents today can be compared to the race to develop self-driving cars 10 years ago. Although AI Agents can perform many tasks, they are still not reliable enough and cannot operate completely autonomously.

One of the biggest problems that AI Agents face is the limitation of logical thinking. According to Qiu, although AI programming tools can generate code, they often write wrong or cannot test their own code. This requires constant human intervention to perfect the process.

Dr. Fan also commented that at present, we have not achieved an AI Agent that can fully automate daily repetitive tasks. The system still has the ability to “go crazy” and not always follow the exact user request.

Challenges and Considerations When Using AI Agents

Another major limitation is the context window – the ability of AI models to read, understand, and process large amounts of data. Dr. Fan explains that models like ChatGPT can be programmed, but have difficulty processing long and complex code, while humans can easily follow hundreds of lines of code without difficulty.

Companies like Google have had to improve the ability to handle context in their AI models, such as with the Gemini model, to improve performance and accuracy.

For “physical” AI Agents such as robots or virtual characters in games, training them to perform human-like tasks is also a challenge. Currently, training data for these systems is very limited and research is just beginning to explore how to apply generative AI to automation.

11. Continue writing the future with AI Agents with FPT.AI

In the digital economy, competition between companies and countries is no longer based solely on core resources, technology and expertise. Organizations, from now on, will need to compete with a new important factor: AI Companions or AI Agents.

It is expected that by the end of 2025, there will be about 100,000 AI Agents accompanying businesses in customer care, operations and production. Each AI Agent will undertake a number of tasks such as programming, training, customer care… Thanks to that, employees are more empowered, businesses increase operational productivity, improve customer experience, and make more accurate decisions based on data analysis.

The Future of AI Agents

FPT AI Agents – a platform that allows businesses to develop, build and operate AI Agents in the simplest, most convenient and fastest way. The main advantages of FPT AI Agents include:

Easy to operate and use natural language.

Flexible integration with enterprise knowledge sources.

AI models are optimized for each task and language.

Currently, FPT AI Agents supports 4 languages: English, Vietnamese, Japanese and Indonesian. In particular, AI Agents have the ability to self-learn and improve over time.

FPT AI Agents is FPT Smart Cloud’s trump card in the AI era

AI Agents are all operated on FPT AI Factory – an ecosystem established with the mission of empowering every organization and individual to build their own AI solutions, using their data, supplementing their knowledge and adapting to their culture. This differentiation fosters a completely new competitive edge among enterprises and extends to building AI sovereignty among nations.

FPT AI Agents Deployment Process

With more than 80 cloud services and 20 AI products, FPT AI Factory helps accelerate AI applications by 9 times thanks to the use of the latest generation GPUs, such as H100 and H200, while saving up to 45% in costs. These factories are fully compatible with the NVIDIA AI Enterprise platform and architectural blueprints, ensuring seamless integration and operation.

12. FAQs about AI Agents

12.1. What’s the difference between LLMs and AI Agents?

LLM (Large Language Model) is an AI model trained on a vast amount of data to recognize and generate natural language. It functions as a “language brain,” predicting each next word in a sentence. However, traditional LLMs are limited to their initial training data, lack the ability to interact with the outside world, and cannot update themselves with new information after training.

AI Agent, on the other hand, is a much more complete system that typically uses an LLM as its core intelligence foundation but is supplemented with sensors (gathering information), actuators (performing actions), knowledge bases (storing knowledge), and control systems (making decisions). This structure allows AI Agents to not only understand language but also interact with the surrounding environment.

The decisive difference between LLMs and Agents lies in the AI Agent’s “tool calling” capability. Through this mechanism, an Agent can retrieve updated information from external sources, optimize workflows, automatically break down and solve subtasks, store interactions in long-term memory, and plan for future actions. These capabilities help AI Agents provide more personalized experiences and comprehensive responses, while expanding their practical application across many fields.

Difference among LLM, RAG and AI Agent

12.2. Are Reasoning models (like OpenAI o3 and DeepSeek R1) AI agents?

No. Reasoning models like o3 and R1 are LLMs trained to reason through solutions to complex problems. They do this by breaking them down into multiple steps using chain of thought. These LLMs cannot naturally interact with other systems or extend their reasoning beyond architectural limitations.

12.3. How do AI Agents integrate with existing systems and workflows?

The most common ways to integrate AI agents are:

Connect it to a RAG platform, providing native connections between LLMs and knowledge bases. This allows the agent to use representations of your documents and business data as context for future responses, increasing output accuracy.

Through APIs to external services. When you configure function calls in the AI agent platform, the model interacts with API endpoints in the same way a traditional program would, creating all the headers and body of the call.

AI Agents workflow

12.4. How does Human-in-the-loop fit into the AI Agent workflow?

Human-in-the-loop frameworks enhance supervision of AI agent systems. Simply put, the agent’s actions pause at predetermined points in the workflow. A notification is sent to the user, who must review decisions, information, and scheduled tasks. Based on this information, the user will approve or change how the AI agent will continue the task.

12.5. Will AI agents take our jobs?

This technology will certainly replace jobs and bring changes to the market, although there is no clear vision of when and how this might happen. Workers may be replaced by AI agents in many industries. At the same time, many positions for AI development and maintenance may be created, along with human-in-the-loop positions, to ensure that human decisions control AI actions rather than the other way around.

12.6. Do AI agents exacerbate bias and discrimination?

An AI model is only as unbiased as the data it was trained on—so yes, they are biased. Addressing these issues involves changing machine learning processes and creating datasets that represent the full spectrum of the world and human experiences.

AI Agents with Human-in-the-loop

12.7. Who is responsible when an AI Agents makes a mistake?

A difficult problem in ethics and law, it’s still unclear who should be blamed for accidents and unintended consequences. The developers? Hardware/software owners? Operators? As new laws are created and industry barriers are implemented, we will be able to understand what roles AI agents can—and cannot—assume.

In short, with the ability to be autonomous, operate independently, make decisions based on data and real-world environments, AI Agents are a powerful automation solution that helps businesses optimize processes. The AI Agents market is forecast to reach a size of 30 billion USD by 2033 and maintain a growth rate of about 31% per year.

The explosive potential of this technology in the next decade is huge. Contact FPT.AI now to take advantage of the enormous power of AI colleagues, accelerate innovation, enhance customer experience and scale more efficiently than ever!

Integrating FPT AI Marketplace API Key into Cursor IDE for Accelerated Code Generation

11:38 18/07/2025

In the AI era, leveraging large language models (LLMs) to enhance programming productivity is becoming increasingly common. Instead of relying on expensive international services, developers in Vietnam now have access to FPT AI Marketplace — a domestic AI inference platform offering competitive pricing, high stability, and superior data locality.

This article provides a step-by-step guide to integrating FPT AI Marketplace’s model API into Cursor IDE, enabling you to utilize powerful code generation models directly within your development environment.

1. Create an FPT AI Marketplace Account

Visit https://marketplace.fptcloud.com/ and register for an account.

Special Offer: New users will receive $1 in free credits to experience AI Inference services on the platform!

2. Browse the List of Available Models

After logging in, you can view the available models on FPT AI Marketplace.

Figure 1: List of available models on FPT AI Marketplace

For optimal code generation results, it is recommended to select models such as Qwen-32B Coder, LLaMA-8B, or DeepSeek.

3. Generate an API Key

Please log in and navigate to https://marketplace.fptcloud.com/en/my-account#my-api-key

Click “Create new API Key”, select the desired models, enter a name for your API key, and then click “Create”.

Figure 2: API Key creation interface

Verify the information and retrieve your newly generated API Key.

Figure 3: API Key successfully created

4. Configure Cursor IDE with FPT AI Marketplace API

Steps to configure:

1. Open Cursor IDE → go to Cursor Settings → select Models.

2. Add Model:

a. Click Add model

b. Add the model (e.g., qwen_coder, deepseek_r1).

3. Enter API Key:

a. In the OpenAI API Key field, paste the API key you generated from FPT AI Marketplace.

4. Configure FPT AI URL:

a. Enable Override OpenAI Base URL

b. Enter the following URL: https://mkp-api.fptcloud.com

Figure 4: Configuring API Key and Base URL in Cursor IDE

5. Confirmation:

a. Click the Verify button.

b. If Verified Successfully appears, you are now ready to start using the model!

5. Using Code Generation Models in Cursor

You can now:

Use the AI Assistant directly within the IDE to generate code.

Ask the AI to refactor, optimize, or explain your existing code.

Select the model you wish to use.

Figure 5: Using the Llama-3.3-70B-Instruction model from FPT AI Marketplace to refactor code

6. Monitor Token Usage

To manage your usage and costs:

Go to My Usage on FPT AI Marketplace.

View the number of requests, input/output tokens, and total usage.

This allows you to see how many tokens you have used, helping you better control and manage your costs.

Conclusion

With just a few simple steps, you can harness the full power of the FPT AI Marketplace. You’ll be able to leverage advanced AI models at a cost-effective rate, accelerate your workflow with fast code generation, intelligent code reviews, performance optimization, and automated debugging. At the same time, you can easily monitor and manage your usage with clarity and transparency.

FPT AI Factory – $200 Million Bold Bet on Sovereign AI

15:36 16/07/2025

From infrastructure, core products to local talent, FPT AI Factory is laying the first bricks toward Vietnam’s long-term ambition of achieving artificial intelligence (AI) sovereignty.

In April 2024, a simple phrase lit up under the white lights of FPT’s main auditorium in Hanoi: “AI Factory – Make-in-Vietnam”, next to the Nvidia logo. The message was subtle, yet it carried the pride and great aspirations of Vietnamese engineers.

In an era where data is the “new oil” and algorithms dictate competitive power, a Vietnamese corporation investing in AI with infrastructure located in Vietnam, developed by Vietnamese engineers, and focused on meeting the needs of Vietnamese users is no longer just a company’s internal strategy. It is a concrete indication that Vietnam is stepping up to assert its position on the global AI map, not just as a consumer of imported technology, but as a creator of its own.

Decision No. 1131/QĐ-TTg is a strategic push that places AI among the 11 national key technologies. For FPT, it’s a much-needed boost for a dream that has been nurtured for years: mastering core technologies. FPT AI Factory - Vietnam’s first AI factory - is turning that vision into reality: a place where data, algorithms, talent, and ambition come together, gradually transforming Vietnamese intelligence from an idea into strategic national products.

With AI Factory, FPT did not take the safe pathway, but chose AI as a “strategic bet” - investing directly in core capabilities, building compute infrastructure, and developing an open ecosystem not just to serve itself, but to empower the entire business community. From 5-person startups to enterprises with thousands of employees, anyone can access the platform and create their own AI solutions.

With the $200 million investment plan announced in April 2024, FPT is not building another traditional data center, but a factory of intelligence - one that doesn’t produce manufacture hardware or commercial software, but generates the true assets of the future digital economy: computing power, language models, and intelligent agents (AI Agents).

FPT’s “Build Your Own AI” strategy is more than just a tech slogan or a product roadmap. It is a strategic move to narrow the gap with global digital powerhouses. Rather than replicating generic AI models, FPT is building a platform that empowers local organizations to develop AI tailored to their language, user behavior, and local business systems.

This is not just a technological leap, but a strategic choice with multiple layers of meaning. It is all about making AI accessible - not only for engineers, but for those who “may not live by algorithms but live with systems,” from logistics managers to customer service operators.

FPT AI Factory is beyond a tech initiative. It embodies a concept that is gaining global relevance: sovereign AI, where computing power is no longer dependent on external forces, data is not silently exported, and creative potential is no longer trapped in pre-packaged, imported “black boxes” with limited adaptability.

AI Factory - The Infrastructure for the Era of Mass AI

In 2023, an engineer named Pieter Levels built an AI startup from a coffee shop in Bali. This one-man model then generated an annual revenue of $1 million. Another anecdote that caught widespread attention tells of a programmer who used GPT-4 to create an entire interactive adventure game within a few hours… while waiting for his wife to give birth in the hospital.

With the launch of ChatGPT, AI has broken free from the inertia of linear growth to an era of exponential breakthroughs. In just five years, AI has evolved from a specialized concept into a foundational tool as ChatGPT writes emails, Midjourney creates visuals that even professional artists admire, and Copilot becomes a companion for developers who half-jokingly call it “organized laziness.” Many AI-embedded applications have been steadily making their way into every enterprise software, collaborative platform, and creative tool, enabling people to make faster, more accurate decisions and complete their work more efficiently.

The presence of AI does not come with a bang; it spreads quietly through millions of small clicks each day. That is when someone edits a document, schedules a meeting, analyzes a customer report… all with AI silently running in the background.

What once required a team of engineers now fits neatly inside a browser. This shift is more than a sign of a tech trend - it’s a signal that AI has become part of the digital infrastructure, weaving its way into every corner of daily life.

In this context, no-code/low-code AI platforms, which allow non-programmers to build intelligent solutions, are reshaping the full picture of digital transformation. According to Mordor Intelligence, this market is expected to reach $8.89 billion by 2030, with a compound annual growth rate (CAGR) of 17.03%.

At the same time, the concept of AI Agents - autonomous agents capable of independently executing tasks within an organization - is gaining just as much traction, with a market value projection of $52.62 billion by 2030 (According to MarketsandMarkets).

The rise of these platforms means that AI is no longer a resource for researchers or Big Tech; it is now a tool that can be deployed at any scale. With just a few clicks, a non-expert can create a customer service chatbot, a data classification system, or even a personal digital assistant - those once necessitated an entire team of engineers.

Yet, this democratization brings new demands for infrastructure. Large language models like DeepSeek-R1 with 671 billion parameters would require immense computational resources to run effectively if the architecture is not optimized. By using the Mixture of Experts (MoE), which activates only a portion of the model during each inference, DeepSeek-R1 has decreased computing costs by up to 94% while maintaining high accuracy.

This is where the AI Factory model becomes essential for a production line for AI capabilities, where every step from data collection, training, fine-tuning, deployment, and operation is optimized like an industrial process.

The key differentiation is not hardware, but in the guiding philosophy of developing localized AI models that understand not only the language but also behaviors, customs, and organizational dynamics of each market.

In Vietnam, those models can comprehend Vietnamese, including unique syntax and contextual nuances, to effectively support public administration, customer service, or local financial operations. But this approach extends beyond the border of the S-shaped country. In Japan, FPT is partnering with Sumitomo and SBI Holdings to build a second AI factory, aiming to support and collaborate with local players in developing “sovereign AI” capabilities, customized to the social fabric and strict standards of the Japanese market.

That means, each time FPT enters a new country or market, it does not apply a one-size-fits-all model. Instead, the corporation proactively approaches and localizes from data to product design. With a presence in over 30 countries and territories, FPT has accumulated a wealth of local insights, allowing its AI solutions to truly fit the needs of local users, exactly in line with the “Build Your Own AI” strategy.

Globally, this shift is already well underway. For instance, the Japanese government has invested $740 million to build a domestic AI factory in collaboration with Nvidia, striving to ensure infrastructure independence (Nvidia Blog). Meanwhile, the European Union plans to fund 20 large-scale AI factories between 2025 and 2026 (Digital Strategy EU). The telecom giant Softbank has also committed over $960 million to developing its own domestic AI infrastructure.

Amid this global momentum, Vietnam needs an adequate approach. We may not be able to compete in terms of scale with the U.S. or China, but we can differentiate ourselves by building infrastructure that is lean, agile, and attuned to local users.

FPT has made the first bold move by investing in core capabilities, developing platforms for Vietnamese businesses, and contributing to a future where AI is not an option, but a prerequisite for economic growth and national competitiveness.

From Strategic Vision to a Sovereign AI Ecosystem

Beyond demonstrating its internal capabilities, FPT’s partnership with Nvidia indicates a forward-looking commitment to a direction that many countries have yet to fully pursue.

Departing from the conventional path, FPT AI Factory is designed to produce AI capabilities, from data collection and model training to inference and deployment.

In just one year, this approach has been brought to life through high-performance GPU infrastructure, no-code/low-code platforms, and an open ecosystem that enables businesses to access AI as a service - no need for hardware investment, no dedicated technical team - yet still capable of delivering intelligent tools that meet real-world needs.

This is not AI for show, but a deployable capability at scale, aligned with the “make-in-Vietnam” spirit, spanning from computing power to language models and localized solutions.

An HR specialist can build a chatbot to answer questions about company policies, a logistics manager can train a model to analyze inventory risks, and a customer service representative can use AI to categorize and respond to emails. They do not need to know how to write a loss function or select an optimizer - the kinds of technical barriers that have long kept most people from accessing AI, but practical problems and real data.

One of the defining features of FPT’s AI platform is its deep adaptability to local contexts, including language, user behavior, and operational structures. Models are trained on formal datasets specific to each target market, enabling them to accurately understand linguistic nuances, workflows, and industry characteristics. Instead of requiring businesses to overhaul their core systems, AI services can be flexibly integrated with existing infrastructure, from CRM and ERP to internal platforms, allowing AI to operate as a natural extension of an organization’s current technology ecosystem.

This strategy helps safeguard data, shorten deployment time, and maintain on-site technological control - core elements for building sovereign AI capabilities that transcend the boundaries of a single nation. With an open architecture and a localization-first mindset, this model can be quickly replicated across international markets with similar needs.

The capabilities of FPT AI Factory have also been recognized through global benchmarks. On the TOP500 list of the world’s most powerful supercomputers (LINPACK benchmark), FPT’s systems currently rank 38th in Vietnam and 36th in Japan - a solid foundation for entering performance-intensive markets.

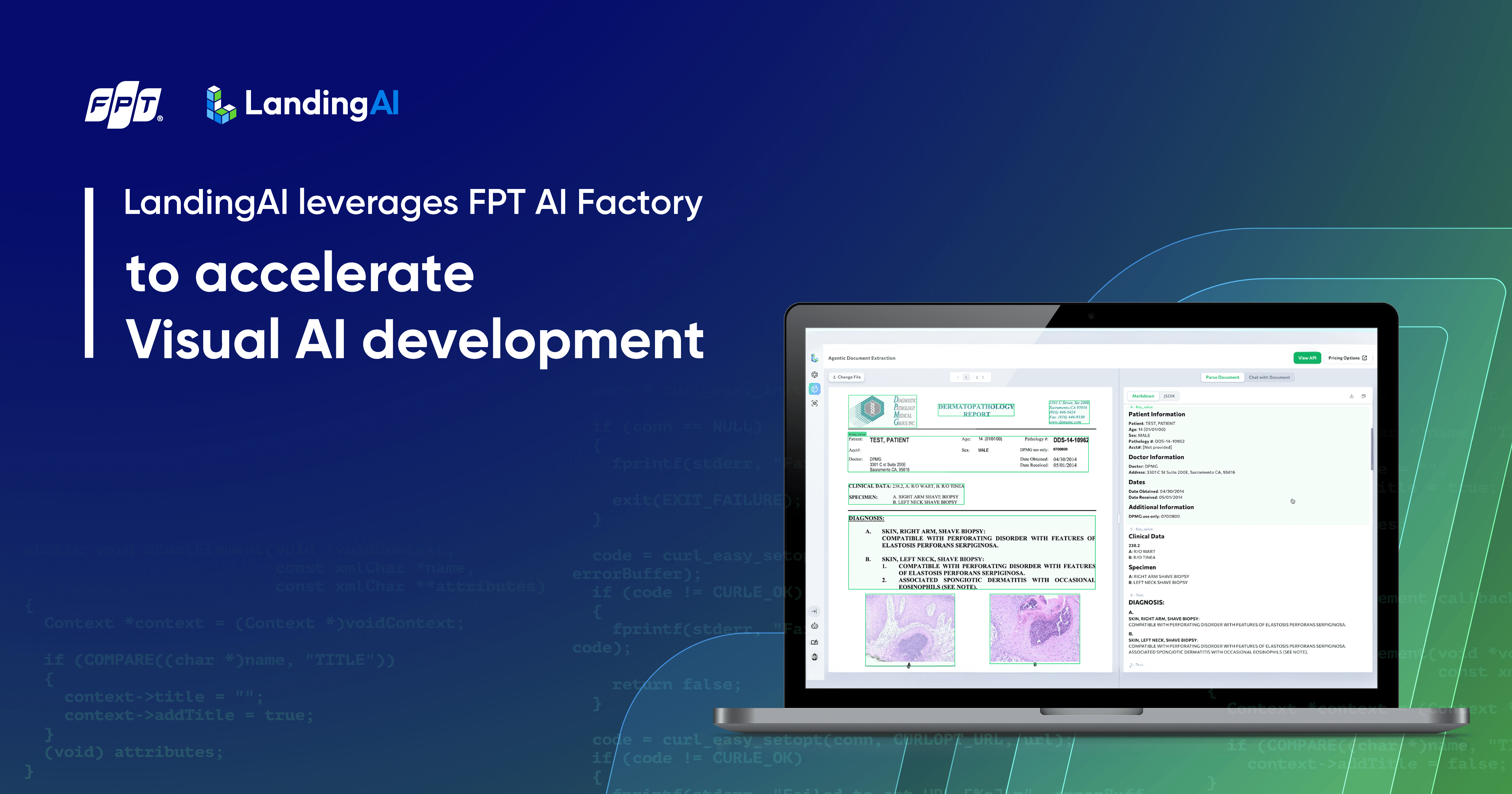

The stature of an AI factory is also reflected in its ability to attract global partners. A standout example is LandingAI - a company founded by Andrew Ng, well known for its enterprise Visual AI platform.

As it expanded to new markets, LandingAI chose to deploy its image inference tasks on the Metal Cloud from FPT AI Factory. Thus, they significantly lower costs and reduce model deployment times from several weeks to just a few days - a critical advantage for businesses pursuing rapid growth.

A single year may not seem long in the tech industry, but in the world of AI, where change happens exponentially, it is enough time to chart a path forward. While much of the world is still finding its footing, FPT made an early bet on practical AI infrastructure, not just to keep pace with global trends, but to deliver real value quickly, certainly, and strategically: building AI in a way that fits local markets and opens the door to autonomy in what is widely seen as the “power game” of the 21st century.

“Build Your Own AI” Strategy – FPT Opens Infrastructure, Enterprises Take Control of the Digital Future

FPT’s announcement to build 5 AI factories globally by 2030 is not a publicity stunt, but a firm commitment to go the distance in the technology race: investing in robust AI infrastructure while simultaneously developing a ready, capable AI workforce as a core competency.

On the “hard” aspect, FPT currently operates one of the region’s most powerful AI computing systems, partners with global leaders like Nvidia, and continues to expand its capabilities in both Vietnam and Japan. But as Dr. Truong Gia Binh, FPT Chairman, shared, “The world is facing a serious talent shortage, and that is where FPT holds an advantage.” Therefore, the “soft” component of this strategy focuses on education and widespread AI literacy, preparing students, engineers, and operational experts across industries with the skills to use and integrate AI at scale.

FPT perceives this as a journey that fuses the computational power of machines with the thinking and adaptability of humans to craft breakthrough solutions.

“As AI becomes more accessible and democratized, the greater the demand will be for AI factories. We will not stop at just two factories because many global corporations have already approached us to collaborate,” said Mr. Trương Gia Bình.

Starting from infrastructure and data, while the world races to train models with trillions of parameters, even a 32-billion-parameter model requires around 400 billion tokens to train. For comparison, a human processes only about 50–100 million language tokens in a lifetime. As Vietnam’s digital data remains fragmented and lacks transparent sharing mechanisms, it presents a significant challenge for building localized AI capabilities.

The solution stems from the unique data Vietnam already possesses: healthcare, education, and insurance. Once these sectors are fully digitized, we can not only build models that reflect local realities but also proactively improve public health and optimize public services.

Data sovereignty, then, is not just a slogan. It is a national strategic imperative. It requires robust digital infrastructure, transparent data-sharing mechanisms, and a long-term commitment to building AI platforms that serve the interests of the Vietnamese people.

According to Mr. Le Hong Viet, CEO of FPT Smart Cloud, FPT’s decision to invest directly in Vietnam is about more than building high-performance computing infrastructure. It is driven to address a key bottleneck in the domestic market and move AI out of experimental labs into practical use for every business.

”AI Factory was created to make AI accessible to everyone, from large enterprises to small startups and research institutions across Vietnam”, said Mr. Viet.

For FPT, he added, AI is not just a tool for boosting internal productivity or delivering value to clients. It is a strategic arena for establishing Vietnam’s position on the global technology map. The AI-first mindset has been embedded in FPT’s DNA from the beginning and is now serving as the guiding principle for its next stage of growth. Infrastructure is only the starting point: the real challenge revolves around technology transfer. This is why FPT is pursuing a parallel investment strategy: bringing the most advanced Nvidia chips to Vietnam, developing tools that help businesses fine-tune their own models at low cost, and training a workforce for both operation and deep research.

Mr. Viet further stated that fine-tuning an AI model used to cost tens of thousands of dollars, but now, with FPT’s services, businesses can sink down that cost to a few hundred dollars or even adopt a “pay-as-you-go” model, paying only for what they use. This grants access to cutting-edge technologies for small businesses, universities, and research institutions - those that are often left behind in the global AI surge.

At the same time, FPT is not hiding its ambition to expand the AI Factory model internationally, starting with Japan, one of the world’s most digitally advanced economies, yet still lacking compatible specialized AI infrastructure. Following Japan, FPT aims to accelerate expansion into markets such as Malaysia, South Korea, and Europe.

“We are entering the Japanese market to stay ahead of the wave of AI adoption. FPT will operate as a truly Japanese company to promote the development of sovereign AI in Japan,” Mr. Viet said.

With the involvement of partners like Sumitomo and SBI Holdings, the AI Factory in Japan will not be a replica of the Vietnamese model, but a fully localized entity, from organizational structure to integrated ecosystem.

At the strategic level, Mr. Le Hong Viet put it plainly: “The strategy of promoting sovereign AI will enhance Vietnam’s national competitiveness by amplifying productivity and accelerating automation.”