(Others) Tasks

(Others) Tasks

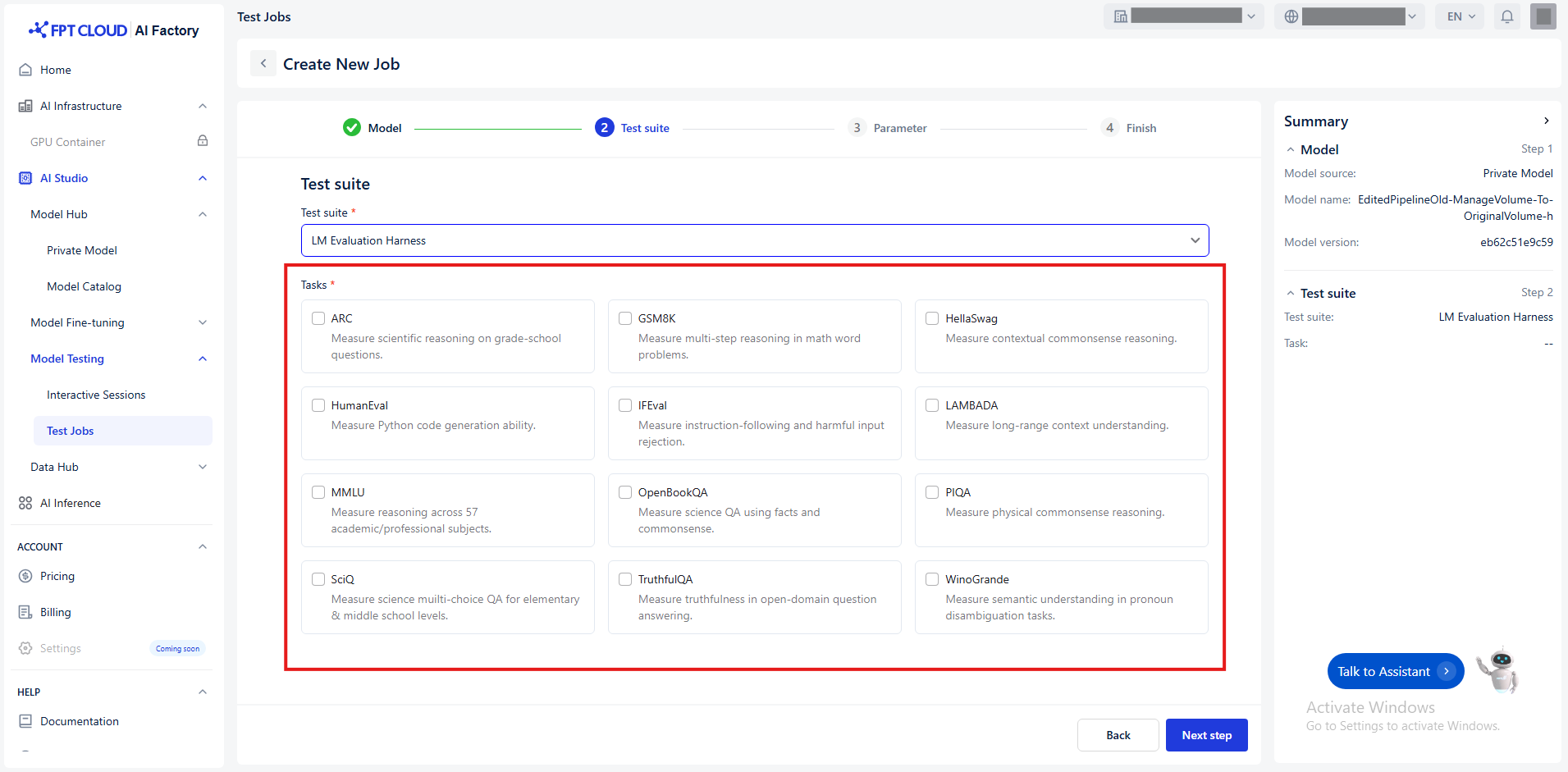

We offer the following tasks depend on the selected test suite:

| Test suite | Tasks | Description |

|---|---|---|

| Nejumi Leaderboard 3 | Jaster | Measure the model’s ability to understand and process the Japanese language. |

| JBBQ | Measure social bias in Japanese question answering by LLMs. | |

| JtruthfulQA | Measure the truthfulness of model answers to Japanese questions. | |

| LM Evaluation Harness | ARC | Measure scientific reasoning on grade-school questions. |

| GSM8K | Measure multi-step reasoning in math word problems. | |

| HellaSwag | Measure contextual commonsense reasoning. | |

| HumanEval | Measure Python code generation ability. | |

| IFEval | Measure instruction-following and harmful input rejection. | |

| LAMBADA | Measure long-range context understanding. | |

| MMLU | Measure reasoning across 57 academic/professional subjects. | |

| OpenBookQA | Measure science QA using facts and commonsense. | |

| PIQA | Measure physical commonsense reasoning. | |

| SciQ | Measure science multiple-choice QA for elementary & middle school levels. | |

| TruthfulQA | Measure truthfulness in open-domain question answering. | |

| Winogrande | Measure semantic understanding in pronoun disambiguation tasks. | |

| VLM Evaluation Kit | ChartQA | Measure chart-based data interpretation and question answering skills. |

| DocVQA | Measure question answering performance on document images. | |

| InfoVQA | Measure question answering based on information embedded in images. | |

| MTVQA | Measure multilingual visual-text question answering performance. | |

| OCRBench | Measure optical character recognition accuracy across varied datasets. |

© 2025 FPT Cloud. All Rights Reserved.