Horizontal Pod Autoscaler (HPA) automatically adjusts the resource allocation for workload resources (such as Deployments or StatefulSets) to dynamically scale to the application's resource demands Basically, when the workload of an application running on Kubernetes increases, HPA will deploy more Pods to meet the resource requirements. When the workload decreases and the number of Pods is higher than the configured minimum, HPA will reduce the workload resources, meaning it decreases the number of Pods. HPA for GPU uses custom metrics from DCGM to monitor and scale Pods based on the application's GPU utilization.

Example deployment with GPU HPA:

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: my-gpu-app

spec:

maxReplicas: 3 # Update this accordingly

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1beta1

kind: Deployment

name: my-gpu-app # Add label from Deployment we need to autoscale

metrics:

- type: Pods # scale pod based on gpu

pods:

metric:

name: DCGM_FI_PROF_GR_ENGINE_ACTIVE # Add the DCGM metric here accordingly

target:

type: AverageValue

averageValue: 0.8 # Set the threshold value as per the requirementMore details can be found at NVIDIA’s DCGM Metrics docs

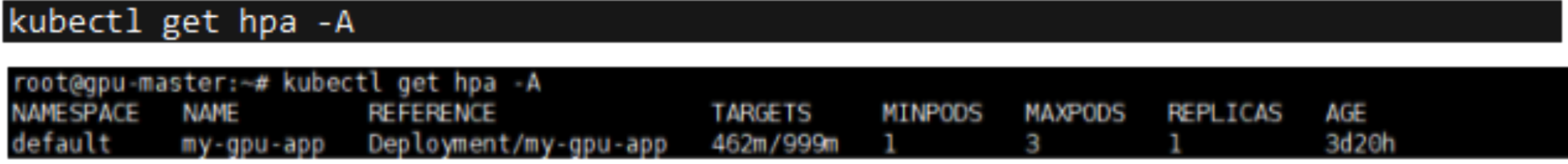

You can view the HPA by running this command:

kubectl get hpa -A